Summary: Just as with spoken language, sign language supports cognitive development in hearing infants.

Source: Northwestern University

Sign language, like spoken language, supports infant cognitive development in hearing infants, according to a groundbreaking new study by Northwestern University researchers.

Cognitive developmental scientists from Northwestern University discovered that observing American Sign Language (ASL) promotes cognition in hearing infants who had never been exposed to a signed language.

For 3- and 4-month-old infants, sign language offers infants a cognitive advantage in forming object categories. This advantage goes above and beyond the effects of pointing to and gazing at objects. This finding expands our understanding of the powerful and inherent link between language and cognition-language in humans.

The lead researcher is Sandra Waxman, the Louis W. Menk Chair in psychology at Weinberg College of Arts and Sciences, director of the Infant and Child Development Center at Northwestern and a faculty fellow in the University’s Institute for Policy Research.

Co-authors of the study include Miriam A. Novak, research scientist in Northwestern’s department of medical social sciences, as well as Diane Brentari and Susan Goldin-Meadow, professors of linguistics and psychology, respectively, at the University of Chicago.

“Sign language, like spoken language, promotes object categorization in young hearing infants” will publish in the October 2021 issue of the journal Cognition.

The new study builds on prior research from Waxman’s lab showing that very young infants initially link a broad range of acoustic signals to cognition. These signals include spoken human languages as well as vocalizations of non-human primates.

“We had already established a precocious link between acoustic signals to infant cognition. But we had not yet established whether this initial link is sufficiently broad to include sign language, even in infants never exposed to a sign language,” Waxman said. “This study is important because it shows the power of language, writ large,” said Waxman.

Because object categorization is fundamental to cognition, the researchers built upon an object categorization task that they had used in previous investigations. This was the first time, however, that the researchers presented language in the visual modality.

The experiment was conducted with 113 hearing infants, ranging from 4 to 6 months. None had previously been exposed to ASL or any other sign language.

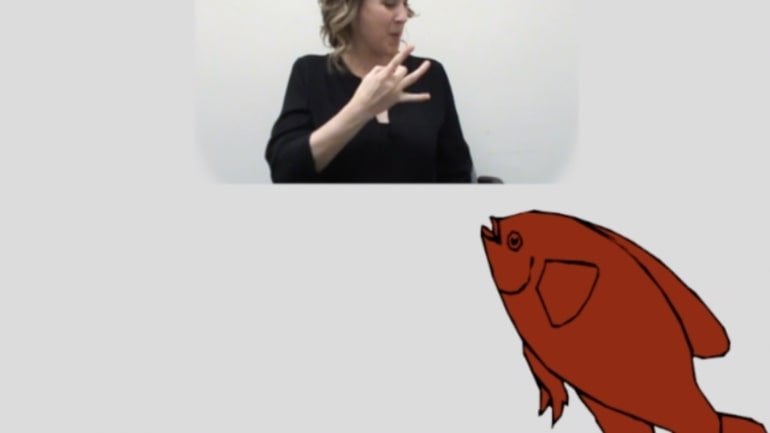

During a familiarization phase, infants viewed eight distinct images from a single category, for example, eight fish. For those in the ASL condition, these images were introduced by a woman signing about the objects. For infants in the non-linguistic control condition, the same woman merely pointed to and looked at the objects but did not produce any language.

Next, in the test phase, all infants viewed two static images: a new member of the same category (e.g. a new fish) or a new object from an entirely different category (e.g. a dinosaur).

The researchers found that at 3 and 4 months, infants in the ASL condition, but not the control condition, successfully formed the object category (e.g. fish). This result mirrored the effects observed at this age when infants listen to their native spoken language. But by 6 months, infants had narrowed this link and ASL no longer provided this cognitive advantage.

The study demonstrates that human infants, whether hearing or deaf, are equipped to link language — whether spoken or signed — to core cognitive processes like object categorization.

“What surprised us the most was that it was specifically the linguistic elements of the ASL that did the trick — not merely pointing and gesturing. Pointing and gesturing are communicative signals for sure, but they are not linguistic signals,” said Waxman.

“The message is that even before they can speak, babies learn a lot from language — even if it is not their own native language. Envelope them in your language. They will tune to the signal of their language, whether it is spoken or signed.”

About this neurodevelopment research news

Author: Stephanie Kulke

Source: Northwestern University

Contact: Stephanie Kulke – Northwestern University

Image: The image is credited to Northwestern University

Original Research: Closed access.

“Sign language, like spoken language, promotes object categorization in young hearing infants” by Sandra Waxman et al. Cognition’

Abstract

Sign language, like spoken language, promotes object categorization in young hearing infants

The link between language and cognition is unique to our species and emerges early in infancy. Here, we provide the first evidence that this precocious language-cognition link is not limited to spoken language, but is instead sufficiently broad to include sign language, a language presented in the visual modality.

Four- to six-month-old hearing infants, never before exposed to sign language, were familiarized to a series of category exemplars, each presented by a woman who either signed in American Sign Language (ASL) while pointing and gazing toward the objects, or pointed and gazed without language (control). At test, infants viewed two images: one, a new member of the now-familiar category; and the other, a member of an entirely new category.

Four-month-old infants who observed ASL distinguished between the two test objects, indicating that they had successfully formed the object category; they were as successful as age-mates who listened to their native (spoken) language. Moreover, it was specifically the linguistic elements of sign language that drove this facilitative effect: infants in the control condition, who observed the woman only pointing and gazing failed to form object categories.

Finally, the cognitive advantages of observing ASL quickly narrow in hearing infants: by 5- to 6-months, watching ASL no longer supports categorization, although listening to their native spoken language continues to do so. Together, these findings illuminate the breadth of infants’ early link between language and cognition and offer insight into how it unfolds.