Summary: The brain’s ventral tegmental area (VTA) encodes not only the value of anticipated rewards but also the precise timing of when those rewards are expected. Long known for its role in producing dopamine and guiding reward prediction, the VTA is now shown to process reward expectations on multiple time scales.

Researchers used a machine learning algorithm to decode activity in VTA neurons, finding that different neurons specialize in predicting rewards seconds, minutes, or even longer into the future. This finer-grained temporal encoding gives the brain greater flexibility in decision-making and learning—mirroring the mechanisms used in cutting-edge AI systems.

Key Facts:

- Timing Precision: VTA neurons predict the moment a reward is expected, not just its presence.

- Multi-Timescale Encoding: Different VTA neurons operate on short-, medium-, and long-term reward horizons.

- AI-Neuro Synergy: A machine learning algorithm helped reveal this fine-tuned dopamine signal, showing how AI can illuminate brain function.

Source: University of Geneva

A small region of the brain, known as the ventral tegmental area (VTA), plays a key role in how we process rewards. It produces dopamine, a neuromodulator that helps predict future rewards based on contextual cues.

A team from the universities of Geneva (UNIGE), Harvard, and McGill has shown that the VTA goes even further: it encodes not only the anticipated reward but also the precise moment it is expected.

This discovery, made possible by a machine learning algorithm, highlights the value of combining artificial intelligence with neuroscience.

The study is published in the journalNature.

The ventral tegmental area (VTA) plays a key role in motivation and the brain’s reward circuit. The main source of dopamine, this small cluster of neurons sends this neuromodulator to other brain regions to trigger an action in response to a positive stimulus.

‘‘Initially, the VTA was thought to be merely the brain’s reward centre. But in the 1990s, scientists discovered that it doesn’t encode reward itself, but rather the prediction of reward,’’ explains Alexandre Pouget, full professor in the Department of Basic Neurosciences in the UNIGE Faculty of Medicine.

Experiments on animals have shown that when a reward consistently follows a light signal, for example, the VTA eventually releases dopamine not at the moment of the reward, but as soon as the signal appears. This response therefore encodes the prediction of the reward—linked to the signal—rather than the reward itself.

A much more sophisticated function

This ‘‘reinforcement learning’’, which requires minimal supervision, is central to human learning. It’s also the principle behind many artificial intelligence algorithms that improve performance through training—such as AlphaGo, the first algorithm to defeat a world champion in the game of Go.

In a recent study, Alexandre Pouget’s team, in collaboration with Naoshige Uchida of Harvard University and Paul Masset of McGill University, shows that the VTA’s coding is even more sophisticated than previously thought.

‘’Rather than predicting a weighted sum of future rewards, the VTA predicts their temporal evolution. In other words, each gain is represented separately, with the precise moment at which it is expected,’’ explains the UNIGE researcher, who led this work.

“While we knew that VTA neurons prioritised rewards close in time over the ones further in the future- on the principle of a bird in the hand is worth two in the bush -we discovered that different neurons do so on different time scales, with some focus on the reward possible in a few seconds’ time, others on the reward expected in a minute’s time, and others on more distant horizons.

“This diversity is what allows the encoding of reward timing. This much finer representation gives the learning system great flexibility, allowing it to adapt to maximise immediate or delayed rewards, depending on the individual’s goals and priorities.’’

AI and neuroscience: a two-way street

These findings stem from a fruitful dialogue between neuroscience and artificial intelligence. Alexandre Pouget developed a purely mathematical algorithm that incorporates the timing of reward processing.

Meanwhile, the Harvard researchers gathered extensive neurophysiological data on VTA activity in animals experiencing rewards.

“They then applied our algorithm to their data and found that the results matched perfectly with their empirical findings.”

While the brain inspires AI and machine learning techniques, these results demonstrate that algorithms can also serve as powerful tools to reveal our neurophysiological mechanisms

About this neuroscience research news

Author: Antoine Guenot

Source: University of Geneva

Contact: Antoine Guenot – University of Geneva

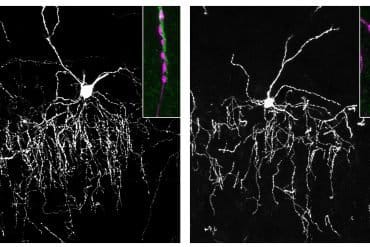

Image: The image is credited to Neuroscience News

Original Research: Closed access.

“Multi-timescale reinforcement learning in the brain” by Alexandre Pouget et al. Nature

Abstract

Multi-timescale reinforcement learning in the brain

To thrive in complex environments, animals and artificial agents must learn to act adaptively to maximize fitness and rewards.

Such adaptive behaviour can be learned through reinforcement learning, a class of algorithms that has been successful at training artificial agents and at characterizing the firing of dopaminergic neurons in the midbrain.

In classical reinforcement learning, agents discount future rewards exponentially according to a single timescale, known as the discount factor.

Here we explore the presence of multiple timescales in biological reinforcement learning.

We first show that reinforcement agents learning at a multitude of timescales possess distinct computational benefits.

Next, we report that dopaminergic neurons in mice performing two behavioural tasks encode reward prediction error with a diversity of discount time constants.

Our model explains the heterogeneity of temporal discounting in both cue-evoked transient responses and slower timescale fluctuations known as dopamine ramps.

Crucially, the measured discount factor of individual neurons is correlated across the two tasks, suggesting that it is a cell-specific property.

Together, our results provide a new paradigm for understanding functional heterogeneity in dopaminergic neurons and a mechanistic basis for the empirical observation that humans and animals use non-exponential discounts in many situations, and open new avenues for the design of more-efficient reinforcement learning algorithms.