Summary: Researchers have created a deep learning algorithm that can be tricked by optical illusions, just like humans. The findings shed new light on the human visual system and may help improve artificial vision.

Source: Brown University.

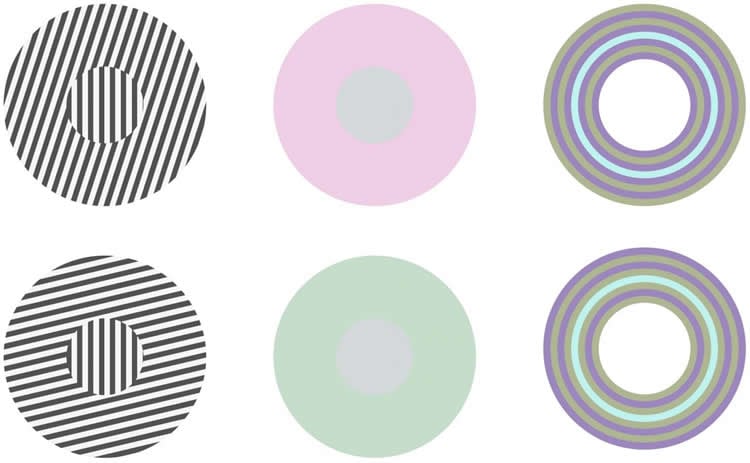

Is that circle green or gray? Are the center lines straight or tilted?

Optical illusions can be fun to experience and debate, but understanding how human brains perceive these different phenomena remains an active area of scientific research. For one class of optical illusions, called contextual phenomena, those perceptions are known to depend on context. For example, the color you think a central circle is depends on the color of the surrounding ring. Sometimes the outer color makes the inner color appear more similar, such as a neighboring green ring making a blue ring appear turquoise — but sometimes the outer color makes the inner color appear less similar, such as a pink ring making a grey circle appear greenish.

A team of Brown University computer vision experts went back to square one to understand the neural mechanisms of these contextual phenomena. Their study was published on Sept. 20 in Psychological Review.

“There’s growing consensus that optical illusions are not a bug but a feature,” said Thomas Serre, an associate professor of cognitive, linguistic and psychological sciences at Brown and the paper’s senior author. “I think they’re a feature. They may represent edge cases for our visual system, but our vision is so powerful in day-to-day life and in recognizing objects.”

For the study, the team lead by Serre, who is affiliated with Brown’s Carney Institute for Brain Science, started with a computational model constrained by anatomical and neurophysiological data of the visual cortex. The model aimed to capture how neighboring cortical neurons send messages to each other and adjust one another’s responses when presented with complex stimuli such as contextual optical illusions.

One innovation the team included in their model was a specific pattern of hypothesized feedback connections between neurons, said Serre. These feedback connections are able to increase or decrease — excite or inhibit — the response of a central neuron, depending on the visual context.

These feedback connections are not present in most deep learning algorithms. Deep learning is a powerful kind of artificial intelligence that is able to learn complex patterns in data, such as recognizing images and parsing normal speech, and depends on multiple layers of artificial neural networks working together. However, most deep learning algorithms only include feedforward connections between layers, not Serre’s innovative feedback connections between neurons within a layer.

Once the model was constructed, the team presented it a variety of context-dependent illusions. The researchers “tuned” the strength of the feedback excitatory or inhibitory connections so that model neurons responded in a way consistent with neurophysiology data from the primate visual cortex.

Then they tested the model on a variety of contextual illusions and again found the model perceived the illusions like humans.

In order to test if they made the model needlessly complex, they lesioned the model — selectively removing some of the connections. When the model was missing some of the connections, the data didn’t match the human perception data as accurately.

“Our model is the simplest model that is both necessary and sufficient to explain the behavior of the visual cortex in regard to contextual illusions,” Serre said. “This was really textbook computational neuroscience work — we started with a model to explain neurophysiology data and ended with predictions for human psychophysics data.”

In addition to providing a unifying explanation for how humans see a class of optical illusions, Serre is building on this model with the goal of improving artificial vision.

State-of-the-art artificial vision algorithms, such as those used to tag faces or recognize stop signs, have trouble seeing context, he noted. By including horizontal connections tuned by context-dependent optical illusions, he hopes to address this weakness.

Perhaps visual deep learning programs that take context into account will be harder to fool. A certain sticker, when stuck on a stop sign can trick an artificial vision system into thinking it is a 65-mile-per-hour speed limit sign, which is dangerous, Serre said.

Funding: The research was supported by the National Science Foundation (IIS-1252951) and DARPA (YFA N66001-14-1-4037).

Source: Mollie Rappe – Brown University

Publisher: Organized by NeuroscienceNews.com.

Image Source: NeuroscienceNews.com image is credited to Serre Lab/Brown University.

Original Research: Abstract for “Complementary surrounds explain diverse contextual phenomena across visual modalities” by Mély, David A.; Linsley, Drew; and Serre, Thomas in Psychological Review. Published September 20 2018.

doi:10.1037/rev0000109

[cbtabs][cbtab title=”MLA”]Brown University”Teaching Computers to See Optical Illusions.” NeuroscienceNews. NeuroscienceNews, 21 September 2018.

<https://neurosciencenews.com/optical-illusions-neural-network-ai-9901/>.[/cbtab][cbtab title=”APA”]Brown University(2018, September 21). Teaching Computers to See Optical Illusions. NeuroscienceNews. Retrieved September 21, 2018 from https://neurosciencenews.com/optical-illusions-neural-network-ai-9901/[/cbtab][cbtab title=”Chicago”]Brown University”Teaching Computers to See Optical Illusions.” https://neurosciencenews.com/optical-illusions-neural-network-ai-9901/ (accessed September 21, 2018).[/cbtab][/cbtabs]

Abstract

Complementary surrounds explain diverse contextual phenomena across visual modalities

Context is known to affect how a stimulus is perceived. A variety of illusions have been attributed to contextual processing—from orientation tilt effects to chromatic induction phenomena, but their neural underpinnings remain poorly understood. Here, we present a recurrent network model of classical and extraclassical receptive fields that is constrained by the anatomy and physiology of the visual cortex. A key feature of the model is the postulated existence of near- versus far- extraclassical regions with complementary facilitatory and suppressive contributions to the classical receptive field. The model accounts for a variety of contextual illusions, reveals commonalities between seemingly disparate phenomena, and helps organize them into a novel taxonomy. It explains how center-surround interactions may shift from attraction to repulsion in tilt effects, and from contrast to assimilation in induction phenomena. The model further explains enhanced perceptual shifts generated by a class of patterned background stimuli that activate the two opponent extraclassical regions cooperatively. Overall, the ability of the model to account for the variety and complexity of contextual illusions provides computational evidence for a novel canonical circuit that is shared across visual modalities.