Summary: Machine learning can predict, with significant accuracy, whether a person is a musician or not, based on fMRI data collected while subjects listened to music.

Source: University of Jyväskylä.

How your brain responds to music listening can reveal whether you have received musical training, according to new Nordic research conducted in Finland (University of Jyväskylä and AMI Center) and Denmark (Aarhus University). By applying methods of computational music analysis and machine learning on brain imaging data collected during music listening, the researchers we able to predict with a significant accuracy whether the listeners were musicians or not.

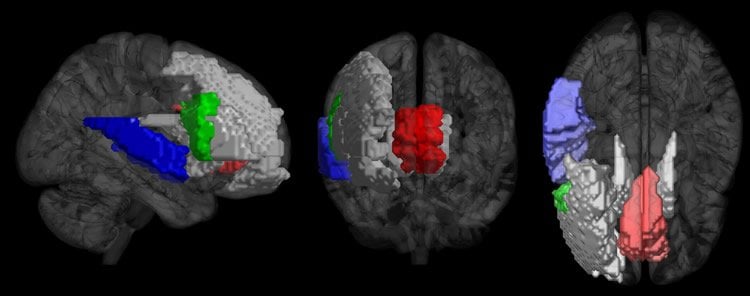

These results emphasize the striking impact of musical training on our neural responses to music to the extent of discriminating musicians’ brains from nonmusicians’ brains despite other independent factors such as musical preference and familiarity. The research also revealed that the brain areas that best predict musicianship exist predominantly in the frontal and temporal areas of the brain’s right hemisphere. These findings conform to previous work on how the brain processes certain acoustic characteristics of music as well as intonation in speech. The paper was published on January 15 in the journal Scientific Reports.

The study utilized functional magnetic resonance imaging (fMRI) brain data collected by Prof. Elvira Brattico’s team (previously at University of Helsinki and currently at Aarhus University) from 18 musicians and 18 non-musicians while they attentively listened to music of different genres. Computational algorithms were applied to extract musical features from the presented music.

“A novel feature of our approach was that, instead of relying on static representations of brain activity, we modelled how music is processed in the brain over time,” explains Pasi Saari, post-doctoral researcher at the University of Jyväskylä and the main author of the study. “Taking the temporal dynamics into account was found to improve the results remarkably.” As the last step of modelling, the researchers used machine learning to form a model that predicts musicianship from a combination of brain regions.

The machine learning model was able to predict the listeners’ musicianship with 77% accuracy, a result that is on a par with similar studies on participant classification with, for example, clinical populations of brain-damaged patients. The areas where music processing best predicted musicianship resided mostly in the right hemisphere, and included areas previously found to be associated with engagement and attention, processing of musical conventions, and processing of music-related sound features (e.g. pitch and tonality).

“These areas can be regarded as core structures in music processing which are most affected by intensive, lifelong musical training,” states Iballa Burunat, a co-author of the study. In these areas, the processing of higher-level features such as tonality and pulse was the best predictor of musicianship, suggesting that musical training affects particularly the processing of these aspects of music.

“The novelty of our approach is the integration of computational acoustic feature extraction with functional neuroimaging measures, obtained in a realistic music-listening environment, and taking into account the dynamics of neural processing. It represents a significant contribution that complements recent brain-reading methods which decode participant information from brain activity in realistic conditions,” concludes Petri Toiviainen, the senior author of the study. The research was funded by the Academy of Finland and Danish National Research Foundation.

Source: Petri Toiviainen – University of Jyväskylä

Publisher: Organized by NeuroscienceNews.com.

Image Source: NeuroscienceNews.com image is credited to the researchers..

Original Research: Open access research in Scientific Reports.

doi:10.1038/s41598-018-19177-5

[cbtabs][cbtab title=”MLA”]University of Jyväskylä “Your Brain Responses to Music Reveal if You Are a Musician or Not.” NeuroscienceNews. NeuroscienceNews, 23 January 2018.

<https://neurosciencenews.com/musician-brain-responses-8347/>.[/cbtab][cbtab title=”APA”]University of Jyväskylä (2018, January 23). Your Brain Responses to Music Reveal if You Are a Musician or Not. NeuroscienceNews. Retrieved January 23, 2018 from https://neurosciencenews.com/musician-brain-responses-8347/[/cbtab][cbtab title=”Chicago”]University of Jyväskylä “Your Brain Responses to Music Reveal if You Are a Musician or Not.” https://neurosciencenews.com/musician-brain-responses-8347/ (accessed January 23, 2018).[/cbtab][/cbtabs]

Abstract

Decoding Musical Training from Dynamic Processing of Musical Features in the Brain

Pattern recognition on neural activations from naturalistic music listening has been successful at predicting neural responses of listeners from musical features, and vice versa. Inter-subject differences in the decoding accuracies have arisen partly from musical training that has widely recognized structural and functional effects on the brain. We propose and evaluate a decoding approach aimed at predicting the musicianship class of an individual listener from dynamic neural processing of musical features. Whole brain functional magnetic resonance imaging (fMRI) data was acquired from musicians and nonmusicians during listening of three musical pieces from different genres. Six musical features, representing low-level (timbre) and high-level (rhythm and tonality) aspects of music perception, were computed from the acoustic signals, and classification into musicians and nonmusicians was performed on the musical feature and parcellated fMRI time series. Cross-validated classification accuracy reached 77% with nine regions, comprising frontal and temporal cortical regions, caudate nucleus, and cingulate gyrus. The processing of high-level musical features at right superior temporal gyrus was most influenced by listeners’ musical training. The study demonstrates the feasibility to decode musicianship from how individual brains listen to music, attaining accuracy comparable to current results from automated clinical diagnosis of neurological and psychological disorders.