Summary: A recurrent neural network algorithm demonstrates short-term synaptic plasticity can support short term maintenance of information, providing the memory delay period is sufficiently short.

Source: University of Chicago

Research by neuroscientists at the University of Chicago shows how short-term, working memory uses networks of neurons differently depending on the complexity of the task at hand.

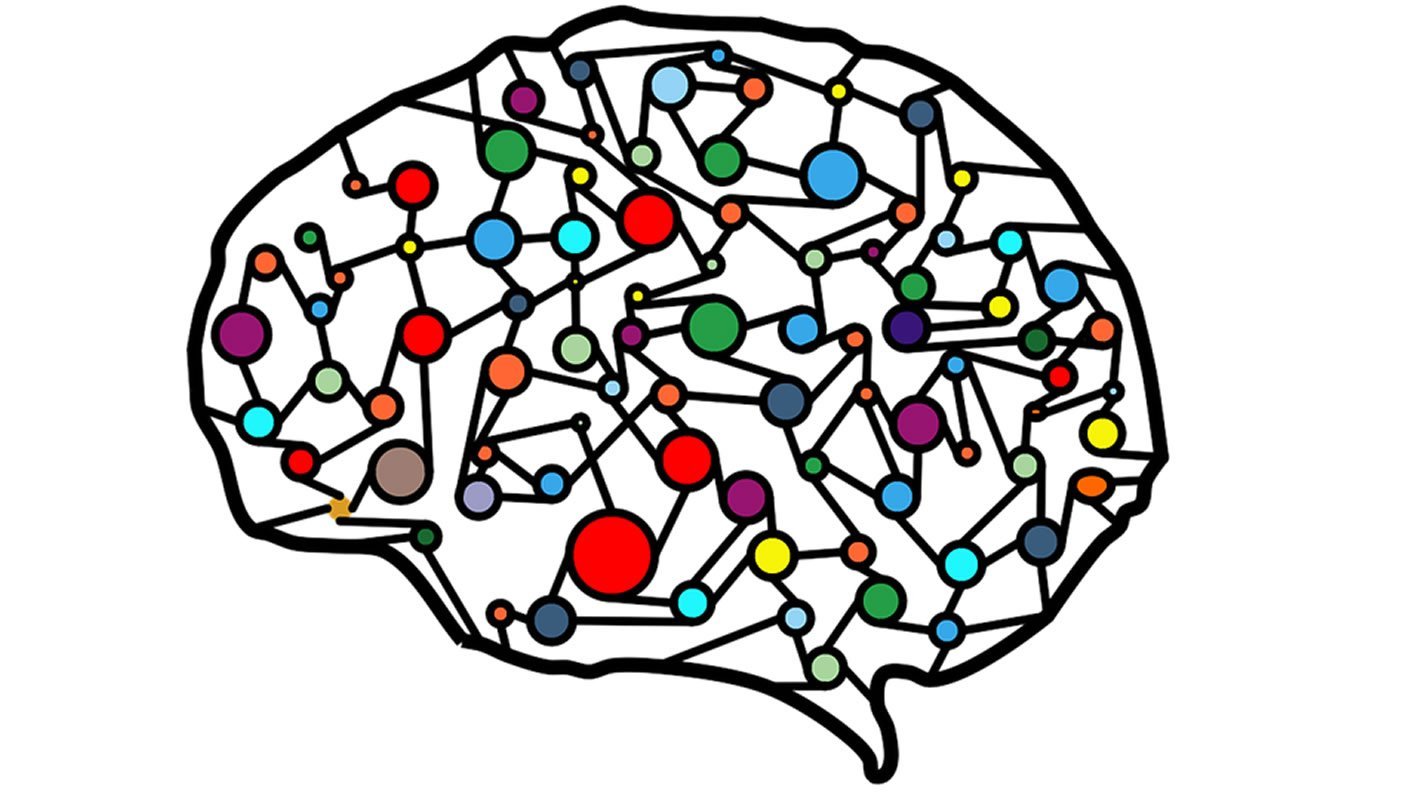

The researchers used modern artificial intelligence (AI) techniques to train computational neural networks to solve a range of complex behavioral tasks that required storing information in short term memory. The AI networks were based on the biological structure of the brain and revealed two distinct processes involved in short-term memory. One, a “silent” process where the brain stores short-term memories without ongoing neural activity, and a second, more active process where circuits of neurons fire continuously.

The study, led by Nicholas Masse, PhD, a senior scientist at UChicago, and senior author David Freedman, PhD, professor of neurobiology, was published this week in Nature Neuroscience.

“Short-term memory is likely composed of many different processes, from very simple ones where you need to recall something you saw a few seconds ago, to more complex processes where you have to manipulate the information you are holding in memory,” Freedman said. “We’ve identified how two different neural mechanisms work together to solve different kinds of memory tasks.”

Active vs silent memory

Many daily tasks require the use of working memory, information that you need to do something in the moment but are likely to forget later. Sometimes you actively remember something on purpose, like when you’re doing a math problem in your head or trying to remember a phone number before you have a chance to write it down. You also passively absorb information that you can recall later even if you didn’t make a point of remembering it, like if someone asks if you saw a particular person in the hallway.

Neuroscientists have learned a lot about how the brain represents information held in memory by monitoring the patterns of electrical activity coursing through the brains of animals as they perform tasks that require the use of short-term memory. They can then monitor the activity of brain cells and measure their activity as the animals perform the tasks.

But Freedman said he and his team were surprised that during certain tasks that required information to be held in memory, their experiments found neural circuits to be unusually quiet. This led them to speculate that these “silent” memories might reside in temporary changes in the strength of connections, or synapses, between neurons.

The problem is that it’s impossible using current technology to measure what’s happening in synapses during these “silent” periods in a living animal’s brain. So, Masse, Freedman and their team have been developing AI approaches that use data from the animal experiments to design networks that can simulate how the neurons in a real brain connect with each other. Then they can train the networks to solve the same kinds of tasks studied in the animal experiments.

During experiments with these biologically inspired neural networks, they were able to see two distinct processes at play during short-term memory processing. One, called persistent neuronal activity, was especially evident during more complex, but still short-term, tasks. When a neuron gets an input, it generates a brief electrical spike in activity. Neurons form synapses with other neurons, and as one neuron fires it triggers a chain reaction to make another neuron fire. Usually, this pattern of activity stops when the input is gone, but the AI model showed that when performing certain tasks, some circuits of neurons would continue firing even after an input was removed, like a reverberation or echo. This persistent activity appeared to be especially important for more complex problems that required information in memory to be manipulated in some way.

The researchers also saw a second process that explained how the brain could keep information in memory without persistent activity, as they had observed in their brain recording experiments. It’s similar to the way the brain stores things in long-term memory by making complex networks of connections among many neurons. As the brain learns new information, these connections are strengthened, rerouted, or removed, a concept known as plasticity. The AI models showed that during the silent periods of memory, the brain can use a short-term form of plasticity in the synaptic connections between neurons to remember information temporarily.

Both of these forms of short-term memory last from a few seconds up to a few minutes. Some of the information used in working memory may end up in long-term storage, but most of it fades away with time.

“It’s like writing something with your finger on a fogged-up mirror instead of writing it with a permanent marker,” Masse said.

Complementary fields of research

The study demonstrates how valuable AI has become to the study of neuroscience, and how the two fields inform each other. Freedman said that artificial neural networks are often more intelligent and easier to train on complex tasks when they are modeled after the real brain. This also makes biologically-inspired AI networks better platforms for testing ideas about functions of the real brain functions.

“These two fields are really benefitting one another,” he said. “Insights from neuroscience experiments are helping create smarter AI and studying circuits in artificial networks is helping answer fundamental questions about the brain.”

Source:

University of Chicago

Media Contacts:

Matt Wood – University of Chicago

Image Source:

The image is in the public domain.

Original Research: Closed access

“Circuit mechanisms for the maintenance and manipulation of information in working memory”. Nicolas Y. Masse, Guangyu R. Yang, H. Francis Song, Xiao-Jing Wang & David J. Freedman.

Nature Neuroscience. doi:s41593-019-0414-3

Abstract

Circuit mechanisms for the maintenance and manipulation of information in working memory

Recently it has been proposed that information in working memory (WM) may not always be stored in persistent neuronal activity but can be maintained in ‘activity-silent’ hidden states, such as synaptic efficacies endowed with short-term synaptic plasticity. To test this idea computationally, we investigated recurrent neural network models trained to perform several WM-dependent tasks, in which WM representation emerges from learning and is not a priori assumed to depend on self-sustained persistent activity. We found that short-term synaptic plasticity can support the short-term maintenance of information, provided that the memory delay period is sufficiently short. However, in tasks that require actively manipulating information, persistent activity naturally emerges from learning, and the amount of persistent activity scales with the degree of manipulation required. These results shed insight into the current debate on WM encoding and suggest that persistent activity can vary markedly between short-term memory tasks with different cognitive demands.