Summary: Using only direct brain stimulation, game players navigate through simple computerized mazes, a new study reports.

Source: University of Washington.

In the Matrix film series, Keanu Reeves plugs his brain directly into a virtual world that sentient machines have designed to enslave mankind.

The Matrix plot may be dystopian fantasy, but University of Washington researchers have taken a first step in showing how humans can interact with virtual realities via direct brain stimulation.

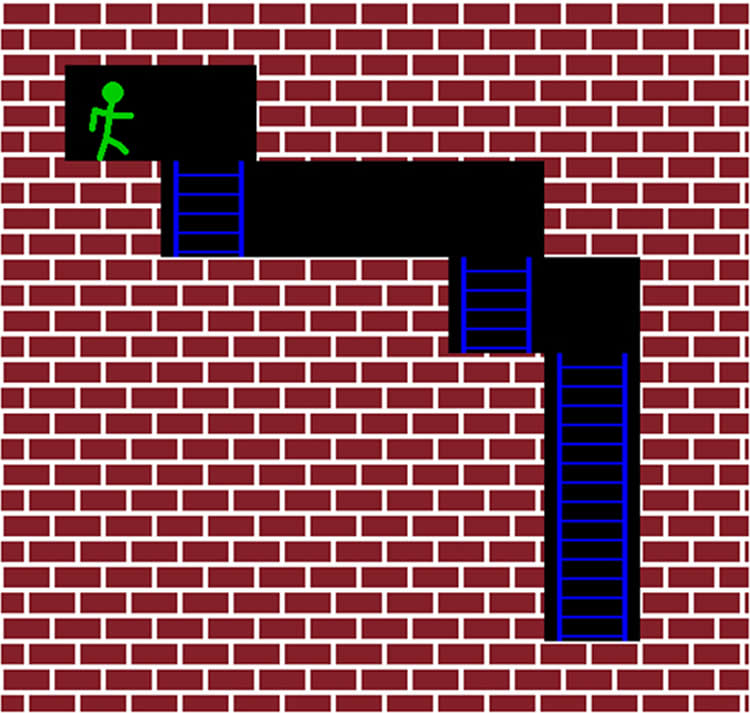

In a paper published online Nov. 16 in Frontiers in Robotics and AI, they describe the first demonstration of humans playing a simple, two-dimensional computer game using only input from direct brain stimulation — without relying on any usual sensory cues from sight, hearing or touch.

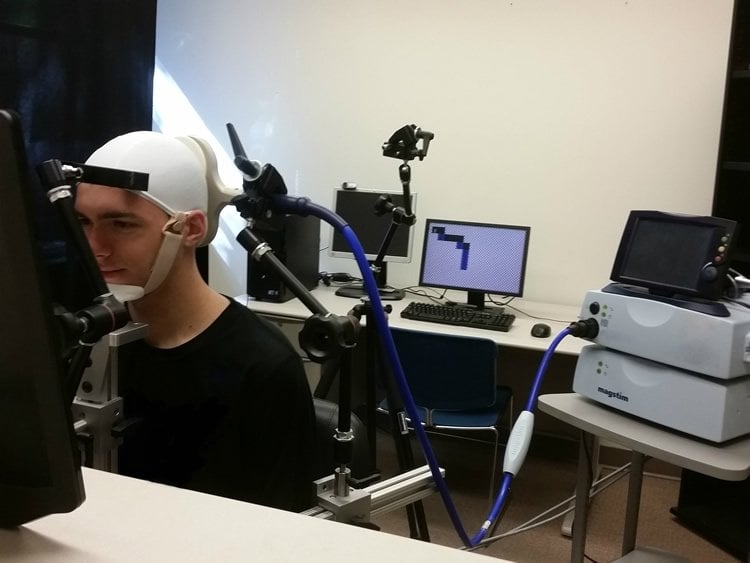

The subjects had to navigate 21 different mazes, with two choices to move forward or down based on whether they sensed a visual stimulation artifact called a phosphene, which are perceived as blobs or bars of light. To signal which direction to move, the researchers generated a phosphene through transcranial magnetic stimulation, a well-known technique that uses a magnetic coil placed near the skull to directly and noninvasively stimulate a specific area of the brain.

“The way virtual reality is done these days is through displays, headsets and goggles, but ultimately your brain is what creates your reality,” said senior author Rajesh Rao, UW professor of Computer Science & Engineering and director of the Center for Sensorimotor Neural Engineering.

“The fundamental question we wanted to answer was: Can the brain make use of artificial information that it’s never seen before that is delivered directly to the brain to navigate a virtual world or do useful tasks without other sensory input? And the answer is yes.”

The five test subjects made the right moves in the mazes 92 percent of the time when they received the input via direct brain stimulation, compared to 15 percent of the time when they lacked that guidance.

The simple game demonstrates one way that novel information from artificial sensors or computer-generated virtual worlds can be successfully encoded and delivered noninvasively to the human brain to solve useful tasks. It employs a technology commonly used in neuroscience to study how the brain works — transcranial magnetic stimulation — to instead convey actionable information to the brain.

The test subjects also got better at the navigation task over time, suggesting that they were able to learn to better detect the artificial stimuli.

“We’re essentially trying to give humans a sixth sense,” said lead author Darby Losey, a 2016 UW graduate in computer science and neurobiology who now works as a staff researcher for the Institute for Learning & Brain Sciences (I-LABS). “So much effort in this field of neural engineering has focused on decoding information from the brain. We’re interested in how you can encode information into the brain.”

The initial experiment used binary information — whether a phosphene was present or not — to let the game players know whether there was an obstacle in front of them in the maze. In the real world, even that type of simple input could help blind or visually impaired individuals navigate.

Theoretically, any of a variety of sensors on a person’s body — from cameras to infrared, ultrasound, or laser rangefinders — could convey information about what is surrounding or approaching the person in the real world to a direct brain stimulator that gives that person useful input to guide their actions.

“The technology is not there yet — the tool we use to stimulate the brain is a bulky piece of equipment that you wouldn’t carry around with you,” said co-author Andrea Stocco, a UW assistant professor of psychology and I-LABS research scientist. “But eventually we might be able to replace the hardware with something that’s amenable to real world applications.”

Together with other partners from outside UW, members of the research team have co-founded Neubay, a startup company aimed at commercializing their ideas and introducing neuroscience and artificial intelligence (AI) techniques that could make virtual-reality, gaming and other applications better and more engaging.

The team is currently investigating how altering the intensity and location of direct brain stimulation can create more complex visual and other sensory perceptions which are currently difficult to replicate in augmented or virtual reality.

“We look at this as a very small step toward the grander vision of providing rich sensory input to the brain directly and noninvasively,” said Rao. “Over the long term, this could have profound implications for assisting people with sensory deficits while also paving the way for more realistic virtual reality experiences.”

Co-authors include I-LABS research coordinator Justin Abernethy.

Funding: The research was funded by the W.M. Keck Foundation and the Washington Research Foundation.

Source: Jennifer Langston – University of Washington

Image Source: NeuroscienceNews.com images are credited to University of Washington.

Video Source: The video is credited to NeuralSystemsLab.

Original Research: Full open access research for “Navigating a 2D Virtual World Using Direct Brain Stimulation” by Darby M. Losey, Andrea Stocco, Justin A. Abernethy and Rajesh P. N. Rao in Frontiers in Robotics and AI. Published online November 166 2016 doi:10.3389/frobt.2016.00072

[cbtabs][cbtab title=”MLA”]University of Washington. “No Peeking: Humans Play Computer Games Using Only Direct Brain Stimulation.” NeuroscienceNews. NeuroscienceNews, 4 December 2016.

<https://neurosciencenews.com/dbs-computer-gaming-5690/>.[/cbtab][cbtab title=”APA”]University of Washington. (2016, December 4). No Peeking: Humans Play Computer Games Using Only Direct Brain Stimulation. NeuroscienceNews. Retrieved December 4, 2016 from https://neurosciencenews.com/dbs-computer-gaming-5690/[/cbtab][cbtab title=”Chicago”]University of Washington. “No Peeking: Humans Play Computer Games Using Only Direct Brain Stimulation.” https://neurosciencenews.com/dbs-computer-gaming-5690/ (accessed December 4, 2016).[/cbtab][/cbtabs]

Abstract

Navigating a 2D Virtual World Using Direct Brain Stimulation

Can the human brain learn to interpret inputs from a virtual world delivered directly through brain stimulation? We answer this question by describing the first demonstration of humans playing a computer game utilizing only direct brain stimulation and no other sensory inputs. The demonstration also provides the first instance of artificial sensory information, in this case depth, being delivered directly to the human brain through non-invasive methods. Our approach utilizes transcranial magnetic stimulation (TMS) of the human visual cortex to convey binary information about obstacles in a virtual maze. At certain intensities, TMS elicits visual percepts known as phosphenes, which transmit information to the subject about their current location within the maze. Using this computer–brain interface, five subjects successfully navigated an average of 92% of all the steps in a variety of virtual maze worlds. They also became more accurate in solving the task over time. These results suggest that humans can learn to utilize information delivered directly and non-invasively to their brains to solve tasks that cannot be solved using their natural senses, opening the door to human sensory augmentation and novel modes of human–computer interaction.

“Navigating a 2D Virtual World Using Direct Brain Stimulation” by Darby M. Losey, Andrea Stocco, Justin A. Abernethy and Rajesh P. N. Rao in Frontiers in Robotics and AI. Published online November 166 2016 doi:10.3389/frobt.2016.00072