Providing simple neural signals to brain implants could stand in for body’s own feedback system.

In new research that brings natural movement by artificial limbs closer to reality, UC San Francisco scientists have shown that monkeys can learn simple brain-stimulation patterns that represent their hand and arm position, and can then make use of this information to precisely execute reaching maneuvers.

Goal-directed arm movements involving multiple joints, such as those we employ to extend and flex the arm and hand to pick up a coffee cup, are guided both by vision and by proprioception—the sensory feedback system that provides information on the body’s overall position in three-dimensional space. Previous research has shown that movement is impaired when either of these sources of information is compromised.

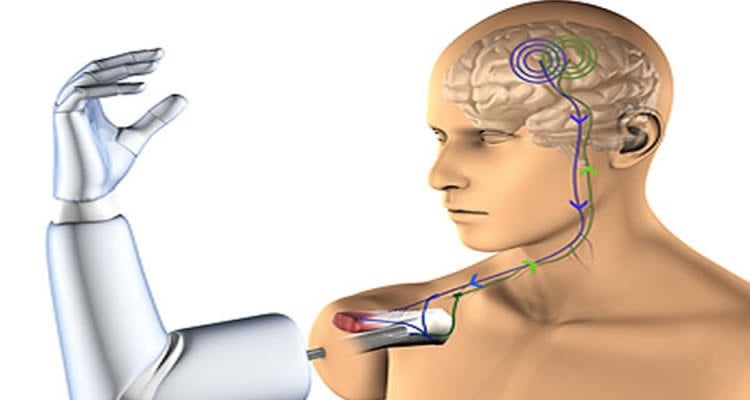

The most sophisticated artificial limbs, which are controlled via brain-machine interfaces (BMIs) that transmit neural commands to robotic mechanisms, rely on users’ visual guidance and do not yet incorporate proprioceptive feedback. These devices, though impressive, lack the fluidity and accuracy of skilled natural reaching movements, said Philip Sabes, PhD, senior author of the new study, published November 24, 2014 in the Advance Online Edition of Nature Neuroscience.

“State-of-the-art BMIs generate movements that are slow and labored—they veer around a lot, with many corrections,” said Sabes, whose research to improve prosthetics has been funded by the REPAIR (Reorganization and Plasticity to Accelerate Injury Recovery) initiative of the Defense Advanced Projects Research Agency (DARPA). “Achieving smooth, purposeful movements will require proprioceptive feedback.”

Many scientists have believed that solving this problem requires a “biomimetic” approach—understanding the neural signals normally employed by the body’s proprioceptive systems, and replicating them through electrical stimulation. But theoretical work by Sabes’ group over the past several years has suggested that the brain’s robust learning capacity might allow for a simpler strategy.

“The brain is remarkably good at looking for ‘temporal coincidence’—things that change together—and using that as a clue that those things belong together,” said Sabes. “So we’ve predicted that you could deliver information to the brain that’s entirely novel, and the brain would learn to figure it out if it changes moment-by-moment in tandem with something it knows a lot about, such as visual cues.”

In the new research, conducted in the Sabes laboratory by former graduate student Maria C. Dadarlat, PhD, and postdoctoral fellow Joseph E. O’Doherty, PhD, monkeys were taught to reach toward a target, but their reaching arm and the target were obscured by a tabletop. A sensor mounted on the monkeys’ reaching hand detected the distance from, and direction toward, the target and this information was used to create a “random-dot” display on a computer monitor that the monkeys could use for visual guidance.

Random-dot displays allow researchers to precisely control the usefulness of visual cues: in a “high coherence” condition, a collection of dots moving in one direction and at the same velocity across the screen perfectly reflects the accuracy of the direction and speed of a monkey’s reach toward a target; in “low coherence” conditions only a fraction of the dots move in ways that are useful in guiding movement.

In addition to controlling the random-dot display, information from the sensor was also converted in real time to patterns of electrical stimulation that were delivered by eight electrodes implanted in the monkeys’ brains.

The monkeys first learned to perform the task using just the visual display. The brain stimulation was then introduced, and the coherence of the random-dot display gradually reduced, which required the monkeys to increase their reliance on the brain-stimulation patterns to guide them to the target.

The monkeys were engaged in natural movement, so proprioception continued to provide them with information about the absolute position of their reaching hand, but the electrical signals provided otherwise unattainable information about the hand in relation to the target, offering a way for Sabes and colleagues to test their prediction that the brain can learn to make use of “entirely novel” sources of information if that information proves useful.

In the new research, that prediction proved to be correct: eventually the monkeys were capable of performing the task in a dark room, guided by electrical stimulation alone, but they made the most efficient reaching movements when the information from the brain stimulation was integrated with that from the visual display, which Sabes takes as evidence that “the brain’s natural mechanisms of sensory integration ‘figured out’ the relevance of the patterns of stimulation to the visual information.”

“To use this approach to provide proprioceptive feedback from a prosthetic device,” the authors write, instead of capturing information relative to a target, the brain stimulation “would instead encode the state of the device with respect to the body, for example joint or endpoint position or velocity. Because these variables are also available via visual feedback, the same learning mechanisms should apply.”

In addition to funding from DARPA’s REPAIR initiative, the new research was supported by a grant from the National Institutes of Health.

Contact: Pete Farley – UCSF

Source: UCSF press release

Image Source: The image is credited to Integrum and is adapted from a previous NeuroscienceNews.com article

Original Research: Abstract for “A learning-based approach to artificial sensory feedback leads to optimal integration” by Maria C Dadarlat, Joseph E O’Doherty and Philip N Sabes in Nature Neuroscience. Published online OCtober 27 2014 doi:10.1038/nn.3883