Summary: Our brains need only perform a few fast statistical calculations to detect the key properties of an unknown object. Findings challenge existing views of how our brains extract and learn about our environment.

Source: Zuckerman Institute

From a child snapping Legos together to a pickpocket plucking a wallet from your bag, our brains have a remarkable ability to spot new objects and figure out how to manipulate them. Scientists have long believed that the brain accomplishes this by methodically interpreting visual and textural cues, such as an object’s edges or boundaries. But a new study suggests that the human brain requires only a tiny bit of information, as well as its previous experience, to calculate a complete mental representation of a new object. These results help to explain the mental mathematics that enables us to easily know what a novel object looks like simply by touching it, or the way an object feels from sight alone.

This study, led by researchers at Columbia University, the University of Cambridge and the Central European University and reported in the journal eLife, illustrates the brain’s natural power to learn quickly and generalize.

“Our brains’ ability to single out one object from many by touch — the way pickpockets use their fingers to hone in on a wallet deep inside a purse — is a broadly used skill, and key to our ability to interact with the world,” said Daniel Wolpert, PhD, a principal investigator at Columbia’s Mortimer B. Zuckerman Mind Brain Behavior Institute and the study’s co-senior author. “Our latest study exemplifies the brain’s knack for performing mathematics to infer an object’s identity.”

Nearly 40 years ago, scientists trying to understand how we identify individual objects proposed that the edges or boundaries of each item allow us to distinguish one object from the next. But Dr. Wolpert and the research team hypothesized that this explanation did not tell the whole story and was perhaps a smaller part of a much larger, more generalized principle of how the brain infers properties about its surroundings.

“We wondered whether our brains could do more, with less,” said Máté Lengyel, PhD, professor of computational neuroscience from the University of Cambridge, a research fellow at the Central European University and one of the study’s senior authors. “Perhaps they don’t need to acquire and analyze boundary information systematically in order to identify an object, but can instead work out an object’s identity by performing clever statistical analyses that also incorporate memories and experiences,”

These “clever statistical analyses,” Dr. Lengyel and his colleagues thought, could allow our brains to not only immediately identify objects we’ve encountered before, but also predict key properties of new objects we come across.

To test their object-identification hypothesis, the researchers developed a simple computer game where players watched jigsaw-like puzzle pieces stuck together in various combinations on a screen. Their task, which they generally did with ease, was to recognize which combinations appeared together more frequently.

“The players’ brains were able to gather visual information about the pairs of puzzle pieces, such as which pieces were most often found together, or which looked easiest to pull apart,” said Dr. Wolpert, who is also a professor of neuroscience at Columbia’s Vagelos College of Physicians and Surgeons.

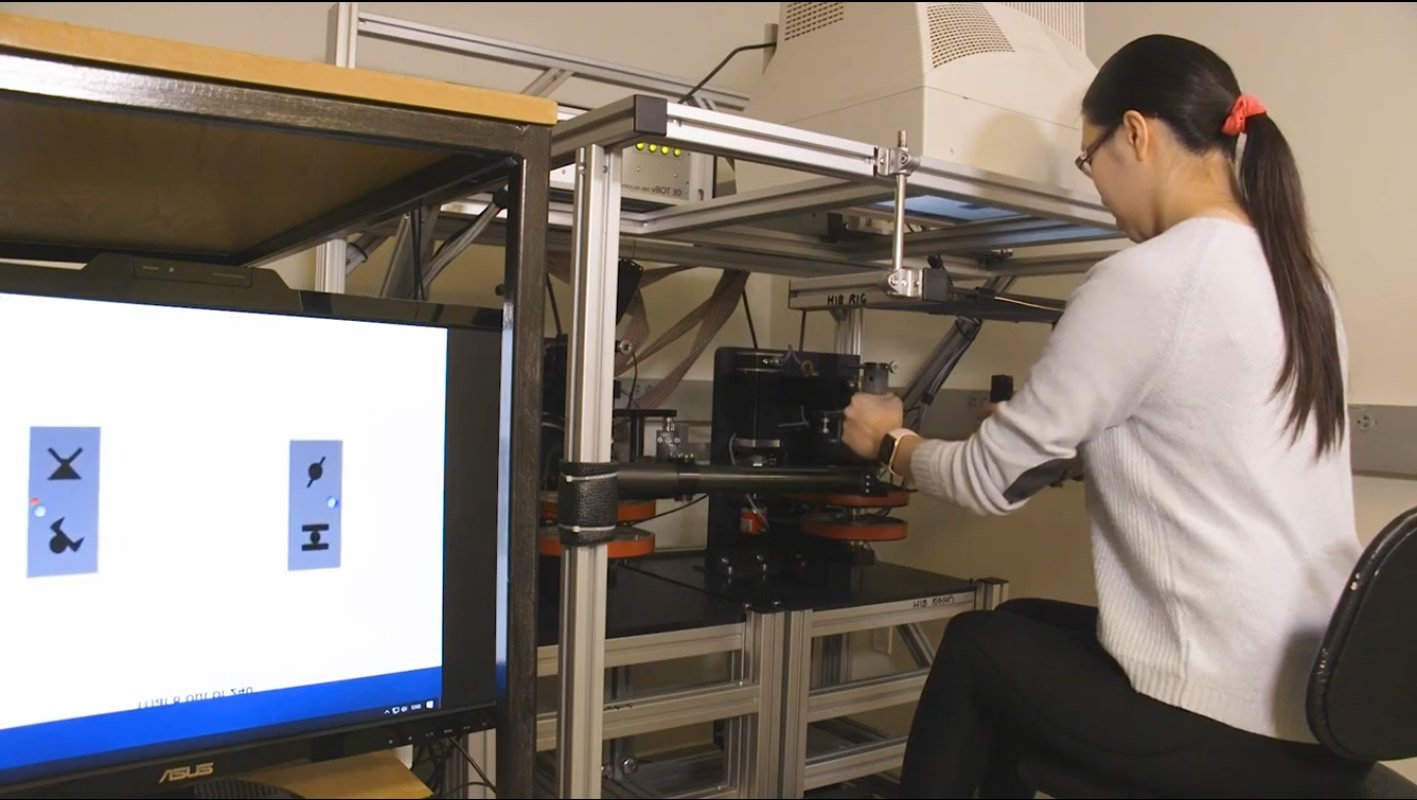

A second set of participants was given similar scenes of puzzle pieces stuck together. But instead of using their eyes to work out which pieces went together as a pair, they used their sense of touch. Scientists call this form of identification haptic perception.

The players grasped two handles — mounted to a robotic contraption that controlled the forces the player experienced when ripping apart different sets of puzzle pieces. Some combinations were easy to pull apart; others, more difficult.

After providing visual or haptic information to each of the two groups of players, the research team swapped the groups; the visual group now evaluated puzzle pieces using their sense of touch, and the haptic group now used their sense of vision.

“Our results of this swap revealed players’ adeptness to take the knowledge they had learned from one of the two modalities — either visual or haptic — and extrapolate to the other modality,” said Dr. Lengyel.

These findings challenge the conventional view of how our brains extract and learn about our environment. Even when faced with a minimal amount of source material — a small amount of statistics about how often two objects appeared together, or how much force it took to pull them apart — the human brain could make powerful inferences and connections.

Credit: Zuckerman Institute.

“Our study shows that our brains are wondrously adept at generalizing from one modality, such as vision, to another, such as touch,” said Dr. Wolpert. “This may be because our brains have calculated a statistical understanding of how objects behave based on our previous experiences. This study further reveals that the computations our brains perform are sufficiently powerful for achieving a multitude of cognitive feats — whether it be picking someone’s pocket or imagining the feel of a leather purse in a window display.”

Funding: This research was supported by the European Research Council (Consolidator Grant ERC-2016-COG/726090), the Royal Society (Noreen Murray Professorship in Neurobiology RP120142), the Seventh Framework Programme (Marie Curie CIG 618918), the Wellcome Trust (New Investigator Award 095621/Z/11/Z; Senior Investigator Award 097803/Z/11/Z) and the National Institutes of Health (R21 HD088731).

Source:

Zuckerman Institute

Media Contacts:

Anne Holden – Zuckerman Institute

Image Source:

The image is adapted from the Zuckerman Institute video

Original Research: Open access

“Unimodal statistical learning produces multimodal object-like representations”. Gábor Lengyel Is a corresponding author , Goda Žalalytė, Alexandros Pantelides, James N Ingram, József Fiser Is a corresponding author , Máté Lengyel Is a corresponding author , Daniel M Wolpert Is a corresponding author.

eLife. doi:10.7554/eLife.43942

Abstract

Unimodal statistical learning produces multimodal object-like representations

The concept of objects is fundamental to cognition and is defined by a consistent set of sensory properties and physical affordances. Although it is unknown how the abstract concept of an object emerges, most accounts assume that visual or haptic boundaries are crucial in this process. Here, we tested an alternative hypothesis that boundaries are not essential but simply reflect a more fundamental principle: consistent visual or haptic statistical properties. Using a novel visuo-haptic statistical learning paradigm, we familiarised participants with objects defined solely by across-scene statistics provided either visually or through physical interactions. We then tested them on both a visual familiarity and a haptic pulling task, thus measuring both within-modality learning and across-modality generalisation. Participants showed strong within-modality learning and ‘zero-shot’ across-modality generalisation which were highly correlated. Our results demonstrate that humans can segment scenes into objects, without any explicit boundary cues, using purely statistical information.