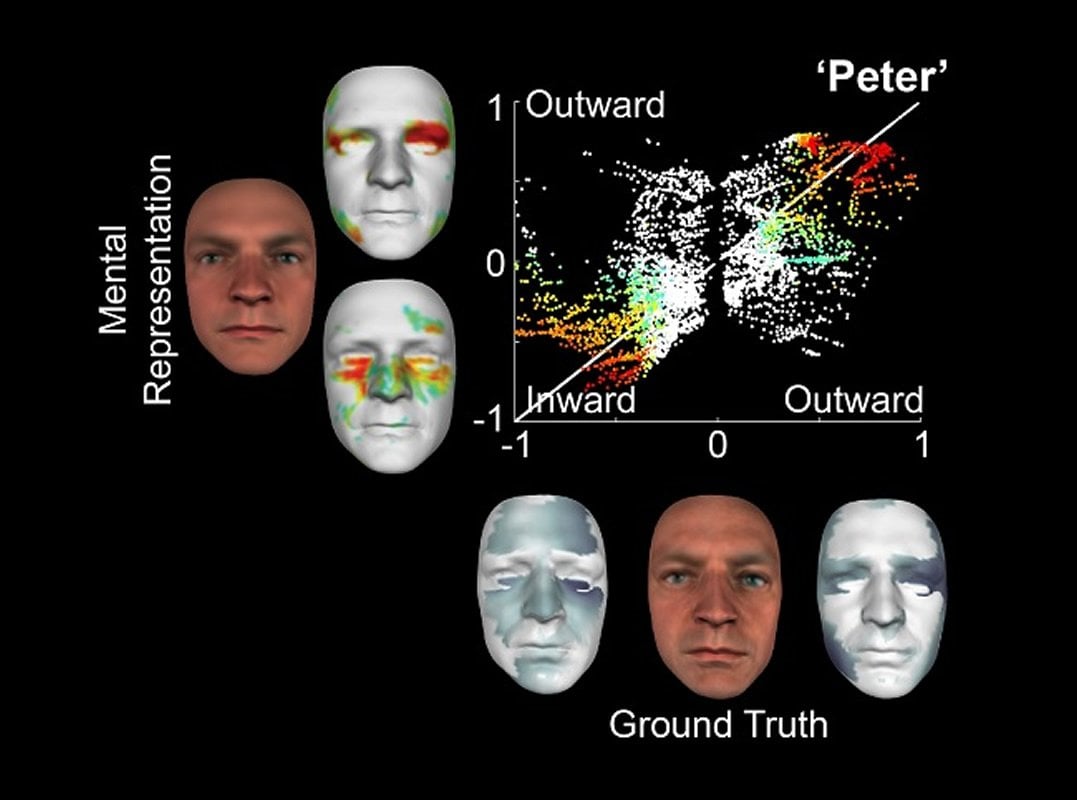

Summary: The ‘Generative Model of 3D Face Identity’ is able to reconstruct facial models using information stored in a person’s brain when recalling the familiar face of another person.

Source: University of Glasgow

In a world first, neuroscientists from the University of Glasgow have been able to construct 3D facial models using the unique information stored in an individual’s brain when recalling the face of a familiar person.

The study, which is published today in Nature Human Behaviour, will be the cornerstone for greater understanding of the brain mechanisms of face identification and could have applications for AI, gaming technology and eyewitness testimony.

A team of Glasgow Scientists studied how their colleagues (14 in total) recognised the faces of four other colleagues, by determining which specific facial information they used to identify them from memory.

To test their theories the researchers had volunteers compare faces which were in all points the same – same age, gender or ethnicity – except for the information that defines the essence of their identity. By doing so, the scientists designed a methodology which was able to ‘crack the code’ of what defines visual identity and generate it with a computer program.

The scientists then devised a method which, across many trials, led them to be able to reconstruct what information is specific to the identity of an individual in someone else’s memory.

Philippe Schyns, Professor of Visual Cognition at the Institute of Neuroscience and Psychology, said: “It’s difficult to understand what information people store in their memory when they recognise familiar faces. But we have developed a tool which has essentially given us a method to do just that.

“By reverse engineering the information that characterises someone’s identity, and then mathematically representing it, we were then able to render it graphically.”

The researchers designed a Generative Model of 3D Face Identity, using a database of 355 3D faces that described each face by its shape and texture. They then applied linear models to the faces to be able to extract the shape and texture for non-identity factors of sex, age and ethnicity, thereby isolating a face’s unique identity information.

In the experiment, the researchers asked observers to rate the resemblance between a remembered familiar face, and randomly generated faces that shared factors of sex, age and ethnicity, but with random identity information. To model the mental representations of these familiar faces, the researchers estimated the identity components of shape and texture from the memory of each observer.

As well as identification, the scientists were then able to use the mathematical model to generate new faces by taking the identity information unique to the familiar faces and combining it with a change their age, sex, ethnicity or a combination of those factors.

Funding: The paper, ‘Modelling Face Memory Reveals Task-Generalizable Representations,’ is published in Nature Human Behaviour and was funded by the Wellcome (Senior Investigator Award, UK; 107802) and the Multidisciplinary University Research Initiative/Engineering and Physical Sciences Research Council (USA, UK; 172046-01).

Source:

University of Glasgow

Media Contacts:

Ali Howard – University of Glasgow

Image Source:

The image is credited to Philippe Schyns et al.

Original Research: Closed access

“Modelling face memory reveals task-generalizable representations”. Jiayu Zhan, Oliver G. B. Garrod, Nicola van Rijsbergen & Philippe G. Schyns.

Nature Human Behavior. doi:10.1038/s41562-019-0625-3

Abstract

Modelling face memory reveals task-generalizable representations

Current cognitive theories are cast in terms of information-processing mechanisms that use mental representations. For example, people use their mental representations to identify familiar faces under various conditions of pose, illumination and ageing, or to draw resemblance between family members. Yet, the actual information contents of these representations are rarely characterized, which hinders knowledge of the mechanisms that use them. Here, we modelled the three-dimensional representational contents of 4 faces that were familiar to 14 participants as work colleagues. The representational contents were created by reverse-correlating identity information generated on each trial with judgements of the face’s similarity to the individual participant’s memory of this face. In a second study, testing new participants, we demonstrated the validity of the modelled contents using everyday face tasks that generalize identity judgements to new viewpoints, age and sex. Our work highlights that such models of mental representations are critical to understanding generalization behaviour and its underlying information-processing mechanisms.