Summary: Researchers report we recognize what we are looking at by combining current stimuli with comparison to images stored in memory.

Source: NYU Langone.

A rope coiled on a dusty trail may trigger a frightened jump by a hiker who recently stepped on a snake. Now a new study better explains how a one-time visual experience can shape perceptions afterward.

Led by neuroscientists from NYU School of Medicine and published online July 31 in eLife, the study argues that humans recognize what they are looking at by combining current sensory stimuli with comparisons to images stored in memory.

“Our findings provide important new details about how experience alters the content-specific activity in brain regions not previously linked to the representation of images by nerve cell networks,” says senior study author Biyu He, PhD, assistant professor in the departments of Neurology, Radiology, and Neuroscience and Physiology.

“The work also supports the theory that what we recognize is influenced more by past experiences than by newly arriving sensory input from the eyes,” says He, part of the Neuroscience Institute at NYU Langone Health.

She says this idea becomes more important as evidence mounts that hallucinations suffered by patients with post-traumatic stress disorder or schizophrenia occur when stored representations of past images overwhelm what they are looking at presently.

Glimpse of a Tiger

A key question in neurology is about how the brain perceives, for instance, that a tiger is nearby based on a glimpse of orange amid the jungle leaves. If the brains of our ancestors matched this incomplete picture with previous danger, they would be more likely to hide, survive and have descendants. Thus, the modern brain finishes perception puzzles without all the pieces.

Most past vision research, however, has been based on experiments wherein clear images were shown to subjects in perfect lighting, says He. The current study instead analyzed visual perception as subjects looked at black-and-white images degraded until they were difficult to recognize. Nineteen subjects were shown 33 such obscured “Mooney images” – 17 of animals and 16 manmade objects – in a particular order. They viewed each obscured image six times, then a corresponding clear version once to achieve recognition, and then blurred images again six times after. Following the presentation of each blurred image, subjects were asked if they could name the object shown.

As the subjects sought to recognize images, the researchers “took pictures” of their brains every two seconds using functional magnetic resonance images (fMRI). The technology lights up with increased blood flow, which is known to happen as brain cells are turned on during a specific task. The team’s 7 Tesla scanner offered a more than three-fold improvement in resolution over past studies using standard 3 Tesla scanners, for extremely precise fMRI-based measurement of vision-related nerve circuit activity patterns.

After seeing the clear version of each image, the study subjects were more than twice as likely to recognize what they were looking at when again shown the obscured version as they were of recognizing it before seeing the clear version. They had been “forced” to use a stored representation of clear images, called priors, to better recognize related, blurred versions, says He.

The authors then used mathematical tricks to create a 2D map that measured, not nerve cell activity in each tiny section of the brain as it perceived images, but instead of how similar nerve network activity patterns were in different brain regions. Nerve cell networks in the brain that represented images more similarly landed closer to each other on the map.

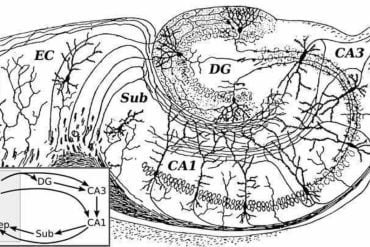

This approach revealed the existence of a stable system of brain organization that processed each image in the same steps, and regardless of whether clear or blurry, the authors say. Early, simpler brain circuits in the visual cortex that determine edge, shape, and color clustered on one end of the map, and more complex, “higher-order” circuits known to mix past and present information to plan actions at the opposite end.

These higher-order circuits included two brain networks, the default-mode network (DMN) and frontoparietal network (FPN), both linked by past studies to executing complex tasks such as planning actions, but not to visual, perceptual processing. Rather than remaining stable in the face of all images, the similarity patterns in these two networks shifted as brains went from processing unrecognized blurry images to effortlessly recognizing the same images after seeing a clear version. After previously seeing a clear version (disambiguation), neural activity patterns corresponding to each blurred image in the two networks became more distinct from the others, and more like the clear version in each case.

Strikingly, the clear image-induced shift of neural representation towards perceptual prior was much more pronounced in brain regions with higher, more complex functions than in the early, simple visual processing networks. This further suggests that more of the information shaping current perceptions comes from what people have experienced before.

Along with He, the study authors were Carlos González-García and Alexis Baria of the National Institute of Neurological Disorders and Stroke, National Institutes of Health, Bethesda, Maryland, as well as Matthew Flounders and Raymond Chang of the Neuroscience Institute at NYU School of Medicine. González-García is currently a post-doctoral fellow in the Department of Experimental Psychology at Ghent University in Belgium.

Funding: This research was supported by the Intramural Research Program of the National Institutes of Health/National Institute of Neurological Disorders and Stroke, New York University Langone Medical Center, Leon Levy Foundation, Klingenstein-Simons Fellowship, and Department of State Fulbright Program. The experiment was approved by the Institutional Review Board of the National Institute of Neurological Disorders and Stroke. All subjects provided written, informed consent.

Source: Greg Williams – NYU Langone

Publisher: Organized by NeuroscienceNews.com.

Image Source: NeuroscienceNews.com image is credited to NYU School of Medicine.

Original Research: Open access research for “Content-specific activity in frontoparietal and default-mode networks during prior-guided visual perception” by Carlos González-García, Matthew W Flounders, Raymond Chang, Alexis T Baria, and Biyu J He in eLife. Published July 31 2018.

doi:10.7554/eLife.36068

[cbtabs][cbtab title=”MLA”]NYU Langone”Past Experience Shapes What We See More Than What We Are Looking At Now.” NeuroscienceNews. NeuroscienceNews, 31 July 2018.

<https://neurosciencenews.com/experience-visual-perception-9636/>.[/cbtab][cbtab title=”APA”]NYU Langone(2018, July 31). Past Experience Shapes What We See More Than What We Are Looking At Now. NeuroscienceNews. Retrieved July 31, 2018 from https://neurosciencenews.com/experience-visual-perception-9636/[/cbtab][cbtab title=”Chicago”]NYU Langone”Past Experience Shapes What We See More Than What We Are Looking At Now.” https://neurosciencenews.com/experience-visual-perception-9636/ (accessed July 31, 2018).[/cbtab][/cbtabs]

Abstract

Content-specific activity in frontoparietal and default-mode networks during prior-guided visual perception

How prior knowledge shapes perceptual processing across the human brain, particularly in the frontoparietal (FPN) and default-mode (DMN) networks, remains unknown. Using ultra-high-field (7T) functional magnetic resonance imaging (fMRI), we elucidated the effects that the acquisition of prior knowledge has on perceptual processing across the brain. We observed that prior knowledge significantly impacted neural representations in the FPN and DMN, rendering responses to individual visual images more distinct from each other, and more similar to the image-specific prior. In addition, neural representations were structured in a hierarchy that remained stable across perceptual conditions, with early visual areas and DMN anchored at the two extremes. Two large-scale cortical gradients occur along this hierarchy: first, dimensionality of the neural representational space increased along the hierarchy; second, prior’s impact on neural representations was greater in higher-order areas. These results reveal extensive and graded influences of prior knowledge on perceptual processing across the brain.