Summary: Combining machine learning with neuroimaging data, researchers identified a brain region that appears to govern contextual associations.

Source: Johns Hopkins University

When people see a toothbrush, a car, a tree — any individual object — their brain automatically associates it with other things it naturally occurs with, allowing humans to build context for their surroundings and set expectations for the world.

By using machine-learning and brain imaging, researchers measured the extent of the “co-occurrence” phenomenon and identified the brain region involved.

The findings appear in Nature Communications.

“When we see a refrigerator, we think we’re just looking at a refrigerator, but in our mind, we’re also calling up all the other things in a kitchen that we associate with a refrigerator,” said corresponding author Mick Bonner, a Johns Hopkins University cognitive scientist. “This is the first time anyone has quantified this and identified the brain region where it happens.”

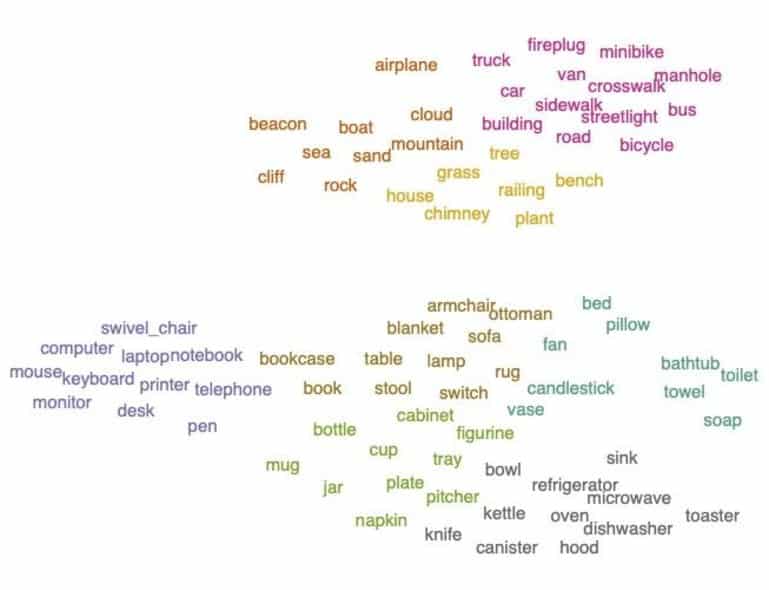

In a two-part study, Bonner and co-author, Russell Epstein, a psychology professor at the University of Pennsylvania, used a database with thousands of scenic photos with every object labeled. There were pictures of household scenes, city life, nature — and the pictures had labels for every mug, car, tree, etc.

To quantify object co-occurrences, or how often certain objects appeared with others, they created a statistical model and algorithm that demonstrated the likelihood of seeing a pen if you saw a keyboard, or seeing a boat if you saw a dishwasher.

With these contextual associations quantified, the researchers next attempted to map the brain region that handles the links.

While subjects were having their brain activity monitored with functional magnetic resonance imaging, or fMRI, the team showed them pictures of individual objects and looked for evidence of a region whose responses tracked this co-occurrence information. The spot they identified was a region in the visual cortex commonly associated with the processing of spatial scenes.

“When you look at a plane, this region signals sky and clouds and all the other things,” Bonner said. “This region of the brain long thought to process the spatial environment is also coding information about what things go together in the world.”

Researchers have long-known that people are slower to recognize objects out of context. The team believes this is the first large-scale experiment to quantify the associations between objects in the visual environment as well as the first insight into how this visual context is represented in the brain.

“We show in a fine-grained way that the brain actually seems to represent this rich statistical information,” Bonner said.

About this neuroscience research news

Source: Johns Hopkins University

Contact: Jill Rosen – Johns Hopkins University

Image: The image is credited to Johns Hopkins University

Original Research: Open access.

“Object representations in the human brain reflect the co-occurrence statistics of vision and language” by Michael F. Bonner & Russell A. Epstein. Nature Communications

Abstract

Object representations in the human brain reflect the co-occurrence statistics of vision and language

A central regularity of visual perception is the co-occurrence of objects in the natural environment. H

ere we use machine learning and fMRI to test the hypothesis that object co-occurrence statistics are encoded in the human visual system and elicited by the perception of individual objects.

We identified low-dimensional representations that capture the latent statistical structure of object co-occurrence in real-world scenes, and we mapped these statistical representations onto voxel-wise fMRI responses during object viewing.

We found that cortical responses to single objects were predicted by the statistical ensembles in which they typically occur, and that this link between objects and their visual contexts was made most strongly in parahippocampal cortex, overlapping with the anterior portion of scene-selective parahippocampal place area. In contrast, a language-based statistical model of the co-occurrence of object names in written text predicted responses in neighboring regions of object-selective visual cortex.

Together, these findings show that the sensory coding of objects in the human brain reflects the latent statistics of object context in visual and linguistic experience.