Summary: Using artificial intelligence and neuroimaging, researchers have identified a link between mental imagery and vision. The brain uses similar visual areas for mental imagery and vision but uses low-level visual areas less precisely for mental imagery than vision.

Source: Medical University of South Carolina

Medical University of South Carolina researchers report in Current Biology that the brain uses similar visual areas for mental imagery and vision, but it uses low-level visual areas less precisely with mental imagery than with vision.

These findings add knowledge to the field by refining methods to study mental imagery and vision. In the long-term, it could have applications for mental health disorders affecting mental imagery, such as post-traumatic stress disorder. One symptom of PTSD is intrusive visual reminders of a traumatic event. If the neural function behind these intrusive thoughts can be better understood, better treatments for PTSD could perhaps be developed.

The study was conducted by an MUSC research team led by Thomas P. Naselaris, Ph.D., associate professor in the Department of Neuroscience. The findings by the Naselaris team help answer an age-old question about the relationship between mental imagery and vision.

“We know mental imagery is in some ways very similar to vision, but it can’t be exactly identical,” explained Naselaris. “We wanted to know specifically in which ways it was different.”

To explore this question, the researchers used a form of artificial intelligence known as machine learning and insights from machine vision, which uses computers to view and process images.

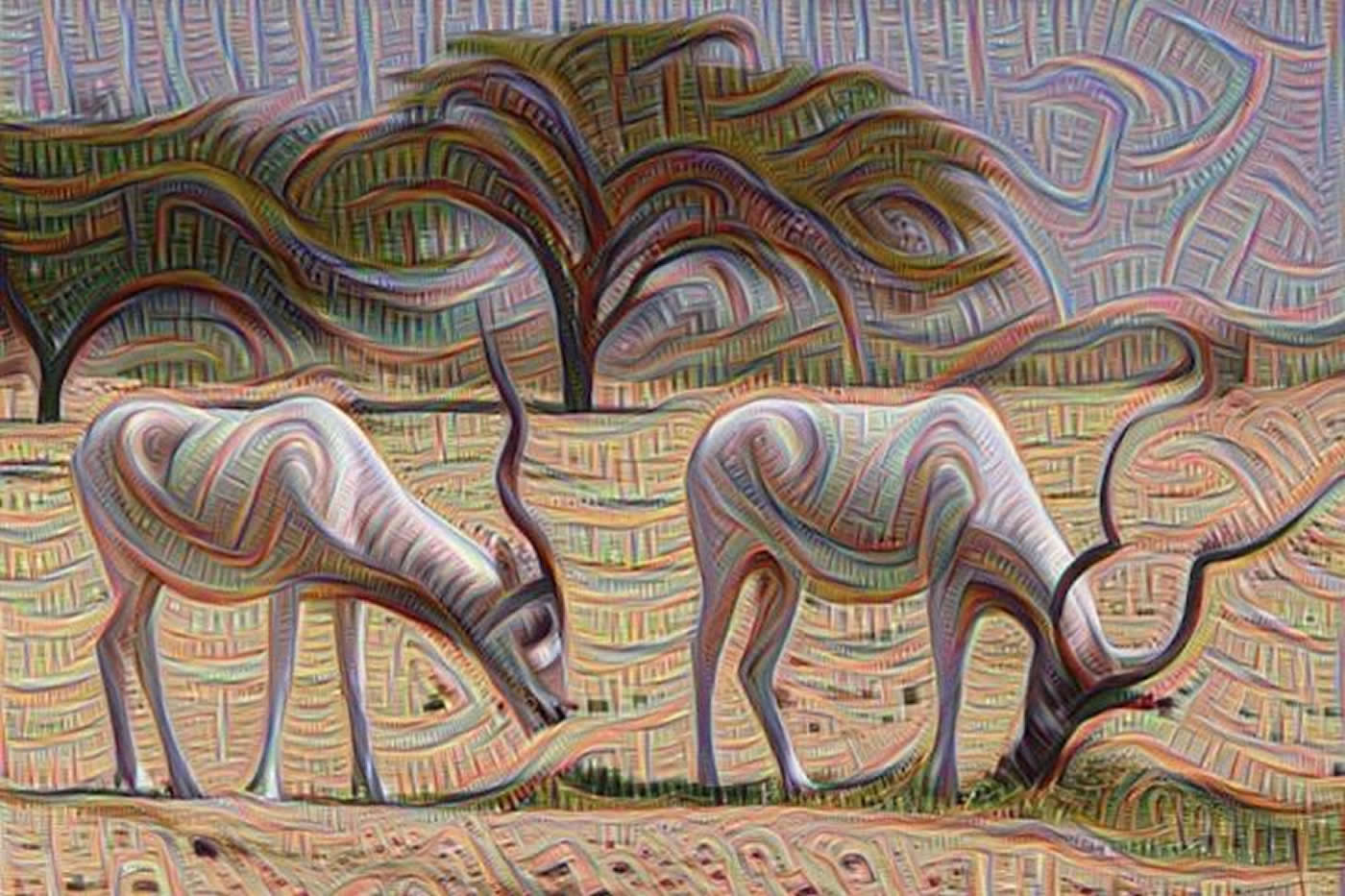

“There’s this brain-like artificial system, a neural network, that synthesizes images,” Naselaris explained. “It’s like a biological network that synthesizes images.”

The Naselaris team trained this network to see images and then took the next step of having the computer imagine images. Each part of the network is like a group of neurons in the brain. Each level of the network or neuron has a different function in vision and then mental imagery.

To test the idea that these networks are similar to the function of the brain, the researchers performed an MRI study to see which brain areas are activated with mental imagery or vision.

While inside the MRI, participants viewed images on a screen and were also asked to imagine images at different points on the screen. MRI imaging enabled researchers to define which parts of the brain were active or quiet while participants viewed a combination of animate and inanimate objects.

Once these brain areas were mapped, the researchers compared the results from the computer model to human brain function.

They discovered that both the computer and human brains functioned similarly. Areas of the brain from the retina of the eye to the primary visual cortex and beyond are both activated with vision and mental imagery. However, in mental imagery, the activation of the brain from the eye to the visual cortex is less precise, and in a sense, diffuse. This is similar to the neural network. With computer vision, low-level areas that represent the retina and visual cortex have precise activation. With mental imagery, this precise activation become diffuse. In brain areas beyond the visual cortex, the activation of the brain or the neural network is similar for both vision and mental imagery. The difference lies in what’s happening in the brain from the retina to the visual cortex.

“When you’re imagining, brain activity is less precise,” said Naselaris. “It’s less tuned to the details, which means that the kind of fuzziness and blurriness that you experience in your mental imagery has some basis in brain activity.”

Naselaris hopes these findings and developments in computational neuroscience will lead to a better understanding of mental health issues.

The fuzzy dream-like state of imagery helps us to distinguish between our waking and dreaming moments. In people with PTSD, invasive images of traumatic events can become debilitating and feel like reality in the moment. By understanding how mental imagery works, scientists may better understand mental illnesses characterized by disruptions in mental imagery.

“When people have really invasive images of traumatic events, such as with PTSD, one way to think of it is mental imagery dysregulation,” explained Naselaris. “There’s some system in your brain that keeps you from generating really vivid images of traumatic things.”

A better understanding of how this works in PTSD could provide insight into other mental health problems characterized by mental imagery disruptions, such as schizophrenia.

“That’s very long term,” Naselaris clarified.

For now, Naselaris is focusing on how mental imagery works, and more research needs to be done to address the connection to mental health.

A limitation of the study is the ability to recreate fully the mental images conjured by participants during the experiment. The development of methods for translating brain activity into viewable pictures of mental images is ongoing.

This study not only explored the neurological basis of seen and imagined imagery but also set the stage for research into improving artificial intelligence.

“The extent to which the brain differs from what the machine is doing gives you some important clues about how brains and machines differ,” said Naselaris. “Ideally, they can point in a direction that could help make machine learning more brainlike.”

About this visual neuroscience research article

Source:

Medical University of South Carolina

Media Contacts:

Heather Woolwine – Medical University of South Carolina

Image Source:

The image is credited to Dr. Guenther Noack.

Original Research: Closed access

“Generative Feedback Explains Distinct Brain Activity Codes for Seen and Mental Images” by Jesse L. Breedlove, Ghislain St-Yves, Cheryl A. Olman, Thomas Naselaris. Current Biology

Abstract

Generative Feedback Explains Distinct Brain Activity Codes for Seen and Mental Images

Highlights

• An analysis of generative networks yields distinct codes for seen and mental images

• An encoding model for mental images predicts human brain activity during imagery

• Low-level visual areas encode mental images with less precision than seen images

• The overlap of imagery and vision is limited by high-level representations

Summary

The relationship between mental imagery and vision is a long-standing problem in neuroscience. Currently, it is not known whether differences between the activity evoked during vision and reinstated during imagery reflect different codes for seen and mental images. To address this problem, we modeled mental imagery in the human brain as feedback in a hierarchical generative network. Such networks synthesize images by feeding abstract representations from higher to lower levels of the network hierarchy. When higher processing levels are less sensitive to stimulus variation than lower processing levels, as in the human brain, activity in low-level visual areas should encode variation in mental images with less precision than seen images. To test this prediction, we conducted an fMRI experiment in which subjects imagined and then viewed hundreds of spatially varying naturalistic stimuli. To analyze these data, we developed imagery-encoding models. These models accurately predicted brain responses to imagined stimuli and enabled accurate decoding of their position and content. They also allowed us to compare, for every voxel, tuning to seen and imagined spatial frequencies, as well as the location and size of receptive fields in visual and imagined space. We confirmed our prediction, showing that, in low-level visual areas, imagined spatial frequencies in individual voxels are reduced relative to seen spatial frequencies and that receptive fields in imagined space are larger than in visual space. These findings reveal distinct codes for seen and mental images and link mental imagery to the computational abilities of generative networks.