Robots do many things formerly done only by humans – from bartending and farming to driving cars – but a Dartmouth researcher and his colleagues have invented a “smart” paint spray can that robotically reproduces photographs as large-scale murals.

The computerized technique, which basically spray paints a photo, isn’t likely to spawn a wave of giant graffiti, but it can be used in digital fabrication, digital and visual arts, artistic stylization and other applications.

The findings appear in the journal Computer & Graphics. The project was a collaboration between ETH Zurich, Disney Research Zurich, Dartmouth College and Columbia University.

The “smart” spray can system is a novel twist on computer-aided painting, which originated in the early 1960s and is a well-studied subject among scientists and artists. Spray paint is affordable and easy to use, making large-scale spray painted murals common in modern urban culture. But covering walls of buildings and other large surfaces can be logistically and technically difficult even for skilled artists.

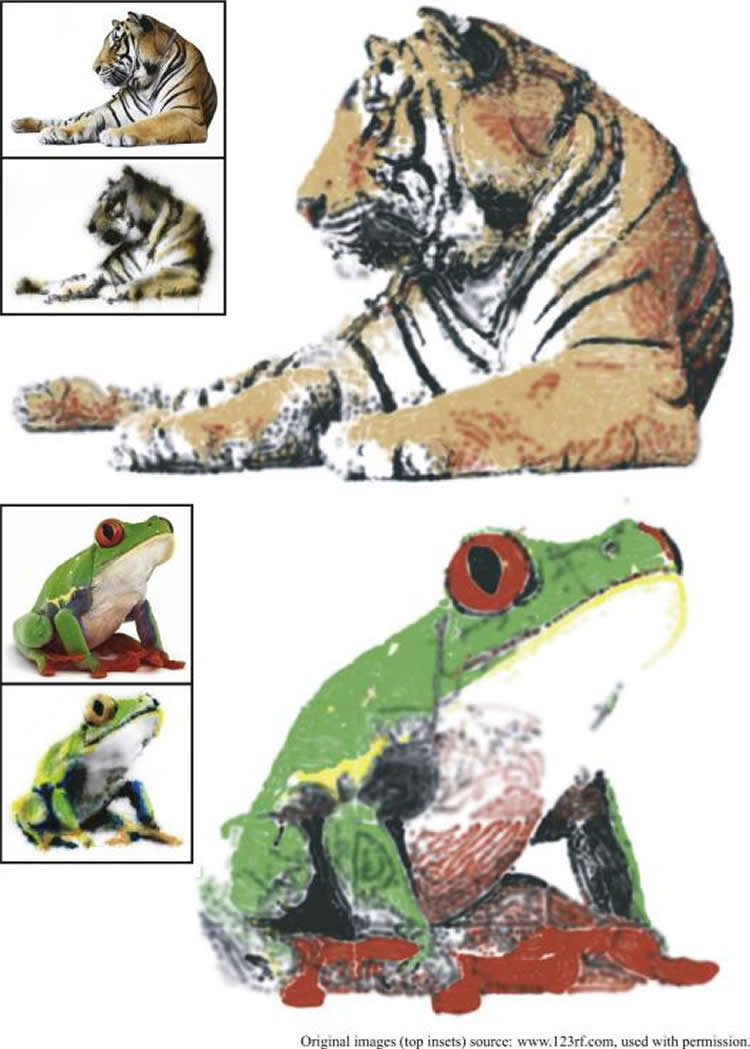

The researchers wanted to create a way to help non-artists create accurate reproductions of photographs as large-scale murals using spray painting. So, they developed a computer-aided system that uses an ordinary paint spray can, tracks the can’s position relative to the wall or canvas and recognizes what image it “wants” to paint. As the person waves the pre-programmed spray can around the canvas, the system automatically operates the spray on/off button to reproduce the specific image as a spray painting.

The prototype is fast and light-weight: it includes two webcams and QR-coded cubes for tracking, and a small actuation device for the spray can, attached via a 3D-printed mount. Paint commands are transmitted via radio directly connected to a servo-motor operating the spray nozzle. Running on a nearby computer, the real-time algorithm determines the optimal amount of paint of the current color to spray at the spray can’s tracked location. The end result is that the painting reveals itself as the user waves the can around, without the user necessarily needing to know the image beforehand.

Due to difficulty getting permission to spray paint university buildings, the researchers tested the automated painting system on large sheets of paper assembled into mural-size paintings. Though the method currently only supports painting on flat surfaces, one potential benefit of the new technique over standard printing is that it may be usable on more complicated, curved painting surfaces.

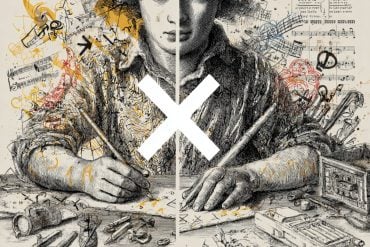

“Typically, computationally-assisted painting methods are restricted to the computer,” says co-author Wojciech Jarosz, an assistant professor of computer science at Dartmouth who previously was a senior research scientist at Disney Research Zurich. “In this research, we show that by combining computer graphics and computer vision techniques, we can bring such assistance technology to the physical world even for this very traditional painting medium, creating a somewhat unconventional form of digital fabrication. Our assistive approach is like a modern take on ‘paint by numbers’ for spray painting. Most importantly, we wanted to maintain the aesthetic aspects of physical spray painting and the tactile experience of holding and waving a physical spray can while enabling unskilled users to create a physical piece of art.”

In addition to Jarosz, the research team consisted of Romain Prévost, hAlec Jacobson and Olga Sorkine-Hornung.

Source: John Cramer – Dartmouth University

Image Credit: The image is credited to Wojciech Jarosz.

Original Research: Abstract for “Large-scale painting of photographs by interactive optimization” by Romain Prévost, Alec Jacobson, Wojciech Jarosz, and Olga Sorkine-Hornung in Computer & Graphics. Published online November 22 2015 doi:10.1016/j.cag.2015.11.001

Abstract

Large-scale painting of photographs by interactive optimization

We propose a system for painting large-scale murals of arbitrary input photographs. To that end, we choose spray paint, which is easy to use and affordable, yet requires skill to create interesting murals. An untrained user simply waves a programmatically actuated spray can in front of the canvas. Our system tracks the can׳s position and determines the optimal amount of paint to disperse to best approximate the input image. We accurately calibrate our spray paint simulation model in a pre-process and devise optimization routines for run-time paint dispersal decisions. Our setup is light-weight: it includes two webcams and QR-coded cubes for tracking, and a small actuation device for the spray can, attached via a 3D-printed mount. The system performs at haptic rates, which allows the user – informed by a visualization of the image residual – to guide the system interactively to recover low frequency features. We validate our pipeline for a variety of grayscale and color input images and present results in simulation and physically realized murals.

“Large-scale painting of photographs by interactive optimization” by Romain Prévost, Alec Jacobson, Wojciech Jarosz, and Olga Sorkine-Hornung in Computer & Graphics. Published online November 22 2015 doi:10.1016/j.cag.2015.11.001