Summary: A new study reveals that the brain uses special neurons to track where we are in a sequence of actions, not just where we are in space. In mice, researchers found “goal-progress cells” that fire based on how far along the animal is in a behavioral task, enabling them to infer what comes next even in novel scenarios.

These cells create internal maps of behavior rather than physical environments, allowing for flexible, generalised thinking. The discovery sheds light on how the brain supports everyday problem-solving and may inform future advances in both neuroscience and artificial intelligence.

Key Facts:

- Goal-Progress Cells: Neurons in the cortex track behavioral sequences, not spatial position.

- Beyond Memory: Mice inferred task structure without prior exposure, showing true generalization.

- Biological AI Bridge: Findings help connect brain function to adaptable AI systems.

Source: The Conversation

For decades, neuroscientists have developed mathematical frameworks to explain how brain activity drives behaviour in predictable, repetitive scenarios, such as while playing a game.

These algorithms have not only described brain cell activity with remarkable precision but also helped develop artificial intelligence with superhuman achievements in specific tasks, such as playing Atari or Go.

Yet these frameworks fall short of capturing the essence of human and animal behaviour: our extraordinary ability to generalise, infer and adapt.

Our study, published in Nature late last year, provides insights into how brain cells in mice enable this more complex, intelligent behaviour.

Unlike machines, humans and animals can flexibly navigate new challenges. Every day, we solve new problems by generalising from our knowledge or drawing from our experiences. We cook new recipes, meet new people, take a new path – and we can imagine the aftermath of entirely novel choices.

It was in the mid-20th century that psychologist Edward Tolman described the concept of “cognitive maps”. These are internal, mental representations of the world that organise our experiences and allow us to predict what we’ll see next.

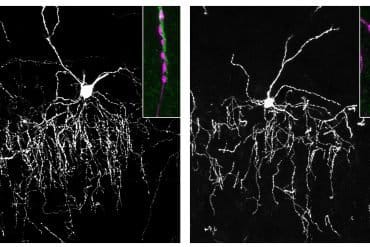

Starting in the 1970s, researchers identified a beautiful system of specialised cells in the hippocampus (the brain’s memory centre) and entorhinal cortex (an area that deals with memory, navigation, and time perception) in rodents that form a literal map of our environments.

These include “place cells”, which fire at specific locations, and “grid cells” that create a spatial framework. Together, these and a host of other neurons encode distances, goals and locations, forming a precise mental map of the physical world and where we are within it.

And now our attention has turned to other areas of cognition beyond finding our way around generalisation, inference, imagination, social cognition and memory. The same areas of the brain that help us navigate in space are also involved in these functions.

Cells for generalising?

We wanted to know if there are cells that organise the knowledge of our behaviour, rather than the outside world, and how they work. Specifically, what are the algorithms that underlie the activity of brain cells as we generalise from past experience? How do we rustle up that new pasta dish?

And we did find such cells. There are neurons that tell us “where we are” in a sequence of behaviour (we haven’t named the cells).

To uncover the brain cells, networks and algorithms that perform these roles, we studied mice, training the animals to complete a task. The task had a sequence of actions with a repeating structure. Mice moved through four locations, or “goals”, containing a water reward (A, B, C and D) in loops.

When we moved the location of the goals, the mice were able to infer what came next in the sequence – even when they had never experienced that exact scenario before.

When mice reached goal D in a new location for the first time, they immediately knew to return to goal A. This wasn’t memory, because they’d never encountered it. Instead, it shows that the mice understood the general structure of the task and tracked their position within it.

The mice had electrodes implanted into the brain, which allowed us to capture neural activity during the task. We found that specific cells in the cortex (the outermost layer of the brain) collectively mapped the animal’s goal progress.

For example, one cell could fire when the animal was 70% of the way to its goal, regardless of where the goal was or how far away.

Some cells tracked progress towards immediate subgoals – like chopping vegetables in our cooking analogy – while others mapped progress towards the overall goal, such as finishing the meal.

Together, these goal progress cells created a system that gave our location in behavioural space rather than a physical space. Crucially, the system is flexible and can be updated if the task changes.

This encoding allows the brain to predict the upcoming sequence of actions without relying on simple associative memories.

Common experiences

Why should the brain bother to learn general structural representations of tasks? Why not create a new representation for each one? For generalisation to be worthwhile, the tasks we complete must contain regularities that can be exploited — and they do.

The behaviour we compose to reach our goals is replete with repetition. Generalisation allows knowledge to extend beyond individual instances. Throughout life, we encounter a highly structured distribution of tasks. And each day we solve new problems by generalising from past experiences.

A previous encounter with making bolognese can inform a new ragu recipe, because the same general steps apply to both (such as starting with frying onions and adding fresh herbs at the end).

We propose that the goal-progress cells in the cortex serve as the building blocks – internal frameworks that organise abstract relationships between events, actions and outcomes.

While we’ve only shown this in mice, it is plausible that the same thing happens in the human brain.

By documenting these cellular networks and the algorithms that underlie them, we are building new bridges between human and animal neuroscience, and between biological and artificial intelligence. And pasta.

About this cognition and neuroscience research news

Author: Mohamady El-Gaby

Source: The Conversation

Contact: Mohamady El-Gaby – The Conversation

Image: The image is credited to Neuroscience News

Original Research: Open access.

“A cellular basis for mapping behavioural structure” by Mohamady El-Gaby et al. Nature

Abstract

A cellular basis for mapping behavioural structure

To flexibly adapt to new situations, our brains must understand the regularities in the world, as well as those in our own patterns of behaviour. A wealth of findings is beginning to reveal the algorithms that we use to map the outside world.

However, the biological algorithms that map the complex structured behaviours that we compose to reach our goals remain unknown.

Here we reveal a neuronal implementation of an algorithm for mapping abstract behavioural structure and transferring it to new scenarios.

We trained mice on many tasks that shared a common structure (organizing a sequence of goals) but differed in the specific goal locations.

The mice discovered the underlying task structure, enabling zero-shot inferences on the first trial of new tasks. The activity of most neurons in the medial frontal cortex tiled progress to goal, akin to how place cells map physical space.

These ‘goal-progress cells’ generalized, stretching and compressing their tiling to accommodate different goal distances. By contrast, progress along the overall sequence of goals was not encoded explicitly.

Instead, a subset of goal-progress cells was further tuned such that individual neurons fired with a fixed task lag from a particular behavioural step.

Together, these cells acted as task-structured memory buffers, implementing an algorithm that instantaneously encoded the entire sequence of future behavioural steps, and whose dynamics automatically computed the appropriate action at each step.

These dynamics mirrored the abstract task structure both on-task and during offline sleep.

Our findings suggest that schemata of complex behavioural structures can be generated by sculpting progress-to-goal tuning into task-structured buffers of individual behavioural steps.