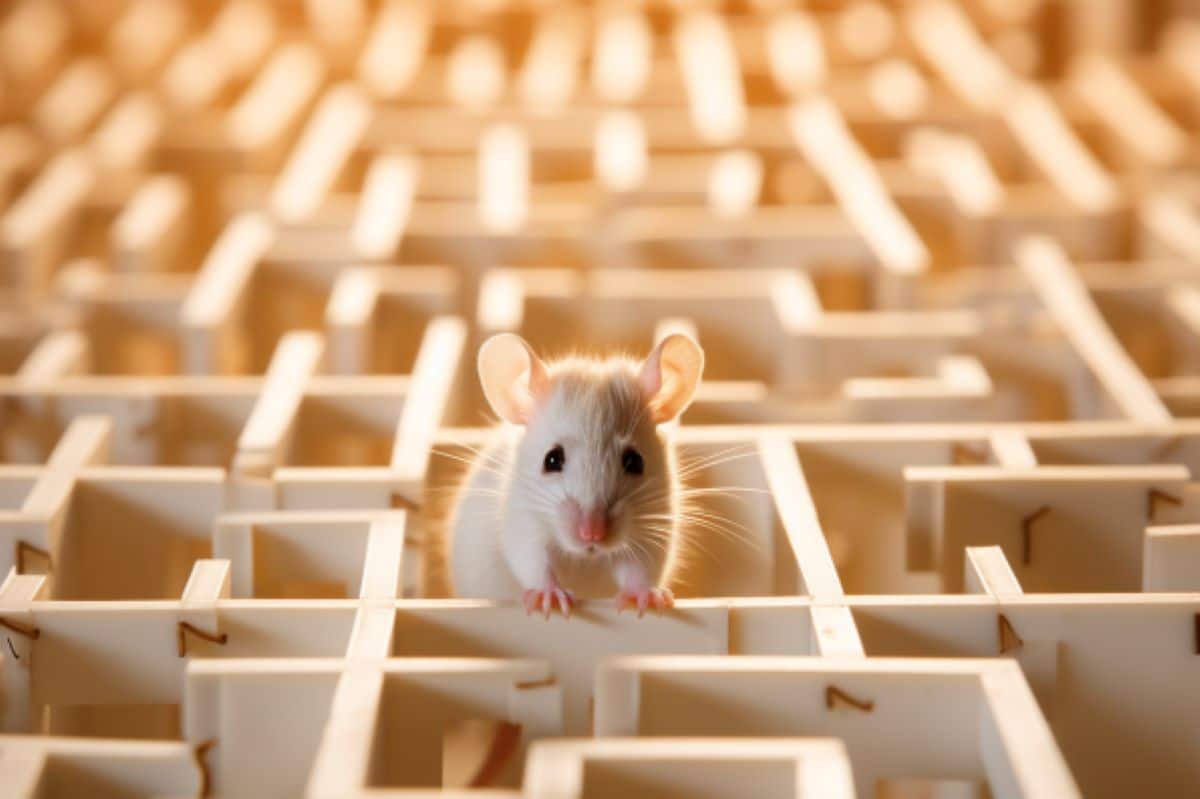

Summary: Researchers have delved deeper into mouse behavior, challenging previous assumptions about their cognitive strategies in reward-based learning tasks.

While humans efficiently adopt the “win-stay, lose-shift” approach in “reversal learning” activities, mice were observed to blend this with an exploratory strategy. Instead of sticking to an optimal rule-based strategy, mice occasionally diverged, possibly indicating an innate skepticism about consistent rewards.

This novel insight into mouse cognition, revealed through the use of a modified Hidden Markov Model, presents exciting avenues for understanding human neurological disorders.

Key Facts:

- Mice displayed a blend of optimal “win-stay, lose-shift” strategy and a more exploratory approach during a reversal learning task.

- Researchers used a modified Hidden Markov Model, termed blockHMM, to accurately decipher the shifting strategies employed by mice.

- These findings could provide insights into neurological disorders like schizophrenia and autism, where reversal learning behavior is altered.

Source: MIT

Neuroscience discoveries ranging from the nature of memory to treatments for disease have depended on reading the minds of mice, so researchers need to truly understand what the rodents’ behavior is telling them during experiments.

In a new study that examines learning from reward, MIT researchers deciphered some initially mystifying mouse behavior, yielding new ideas about how mice think and a mathematical tool to aid future research.

The task the mice were supposed to master is simple: Turn a wheel left or right to get a reward and then recognize when the reward direction switches. When neurotypical people play such “reversal learning” games they quickly infer the optimal approach: stick with the direction that works until it doesn’t and then switch right away.

Notably, people with schizophrenia struggle with the task. In the new study in PLOS Computational Biology, mice surprised scientists by showing that while they were capable of learning the “win-stay, lose-shift” strategy, they nonetheless refused to fully adopt it.

“It is not that mice cannot form an inference-based model of this environment—they can,” said corresponding author Mriganka Sur, Newton Professor in The Picower Institute for Learning and Memory and MIT’s Department of Brain and Cognitive Sciences (BCS). “The surprising thing is that they don’t persist with it. Even in a single block of the game where you know the reward is 100 percent on one side, every so often they will try the other side.”

While the mouse motif of departing from the optimal strategy could be due to a failure to hold it in memory, said lead author and Sur Lab graduate student Nhat Le, another possibility is that mice don’t commit to the “win-stay, lose-shift” approach because they don’t trust that their circumstances will remain stable or predictable.

Instead, they might deviate from the optimal regime to test whether the rules have changed. Natural settings, after all, are rarely stable or predictable.

“I’d like to think mice are smarter than we give them credit for,” Le said.

But regardless of which reason may cause the mice to mix strategies, added co-senior author Mehrdad Jazayeri, Associate Professor in BCS and the McGovern Institute for Brain Research, it is important for researchers to recognize that they do and to be able to tell when and how they are choosing one strategy or another.

“This study highlights the fact that, unlike the accepted wisdom, mice doing lab tasks do not necessarily adopt a stationary strategy and it offers a computationally rigorous approach to detect and quantify such non-stationarities,” he said.

“This ability is important because when researchers record the neural activity, their interpretation of the underlying algorithms and mechanisms may be invalid when they do not take the animals’ shifting strategies into account.”

Tracking thinking

The research team, which also includes co-author Murat Yildirim, a former Sur lab postdoc who is now an assistant professor at the Cleveland Clinic Lerner Research Institute, initially expected that the mice might adopt one strategy or the other.

They simulated the results they’d expect to see if the mice either adopted the optimal strategy of inferring a rule about the task, or more randomly surveying whether left or right turns were being rewarded. Mouse behavior on the task, even after days, varied widely but it never resembled the results simulated by just one strategy.

To differing, individual extents, mouse performance on the task reflected variance along three parameters: how quickly they switched directions after the rule switched, how long it took them to transition to the new direction, and how loyal they remained to the new direction.

Across 21 mice, the raw data represented a surprising diversity of outcomes on a task that neurotypical humans uniformly optimize. But the mice clearly weren’t helpless. Their average performance significantly improved over time, even though it plateaued below the optimal level.

In the task, the rewarded side switched every 15-25 turns. The team realized the mice were using more than one strategy in each such “block” of the game, rather than just inferring the simple rule and optimizing based on that inference.

To disentangle when the mice were employing that strategy or another, the team harnessed an analytical framework called a Hidden Markov Model (HMM), which can computationally tease out when one unseen state is producing a result vs. another unseen state. Le likens it to what a judge on a cooking show might do: inferring which chef contestant made which version of a dish based on patterns in each plate of food before them.

Before the team could use an HMM to decipher their mouse performance results, however, they had to adapt it. A typical HMM might apply to individual mouse choices, but here the team modified it to explain choice transitions over the course of whole blocks.

They dubbed their modified model the blockHMM. Computational simulations of task performance using the blockHMM showed that the algorithm is able to infer the true hidden states of an artificial agent. The authors then used this technique to show the mice were persistently blending multiple strategies, achieving varied levels of performance.

“We verified that each animal executes a mixture of behavior from multiple regimes instead of a behavior in a single domain,” Le and his co-authors wrote.

“Indeed 17/21 mice used a combination of low, medium and high-performance behavior modes.”

Further analysis revealed that the strategies afoot were indeed the “correct” rule inference strategy and a more exploratory strategy consistent with randomly testing options to get turn-by-turn feedback.

Now that the researchers have decoded the peculiar approach mice take to reversal learning, they are planning to look more deeply into the brain to understand which brain regions and circuits are involved. By watching brain cell activity during the task, they hope to discern what underlies the decisions the mice make to switch strategies.

By examining reversal learning circuits in detail, Sur said, it’s possible the team will gain insights that could help explain why people with schizophrenia show diminished performance on reversal learning tasks.

Sur added that some people with autism spectrum disorders also persist with newly unrewarded behaviors longer than neurotypical people, so his lab will also have that phenomenon in mind as they investigate.

Yildirim, too, is interested in examining potential clinical connections.

“This reversal learning paradigm fascinates me since I want to use it in my lab with various preclinical models of neurological disorders,” he said. “The next step for us is to determine the brain mechanisms underlying these differences in behavioral strategies and whether we can manipulate these strategies.”

Funding: Funding for the study came from The National Institutes of Health, the Army Research Office, a Paul and Lilah Newton Brain Science Research Award, the Massachusetts Life Sciences Initiative, The Picower Institute for Learning and Memory and The JPB Foundation.

About this learning and cognition research news

Author: David Orenstein

Source: MIT

Contact: David Orenstein – MIT

Image: The image is credited to Neuroscience News

Original Research: Open access.

“Mixtures of strategies underlie rodent behavior during reversal learning” by Mriganka Sur et al. PLOS Computational Biology

Abstract

Mixtures of strategies underlie rodent behavior during reversal learning

In reversal learning tasks, the behavior of humans and animals is often assumed to be uniform within single experimental sessions to facilitate data analysis and model fitting.

However, behavior of agents can display substantial variability in single experimental sessions as they execute different blocks of trials with different transition dynamics.

Here, we observed that in a deterministic reversal learning task, mice display noisy and sub-optimal choice transitions even at the expert stages of learning. We investigated two sources of the sub-optimality in the behavior.

First, we found that mice exhibit a high lapse rate during task execution, as they reverted to unrewarded directions after choice transitions. Second, we unexpectedly found that a majority of mice did not execute a uniform strategy, but rather mixed between several behavioral modes with different transition dynamics.

We quantified the use of such mixtures with a state-space model, block Hidden Markov Model (block HMM), to dissociate the mixtures of dynamic choice transitions in individual blocks of trials.

Additionally, we found that blockHMM transition modes in rodent behavior can be accounted for by two different types of behavioral algorithms, model-free or inference-based learning, that might be used to solve the task.

Combining these approaches, we found that mice used a mixture of both exploratory, model-free strategies and deterministic, inference-based behavior in the task, explaining their overall noisy choice sequences.

Together, our combined computational approach highlights intrinsic sources of noise in rodent reversal learning behavior and provides a richer description of behavior than conventional techniques, while uncovering the hidden states that underlie the block-by-block transitions.