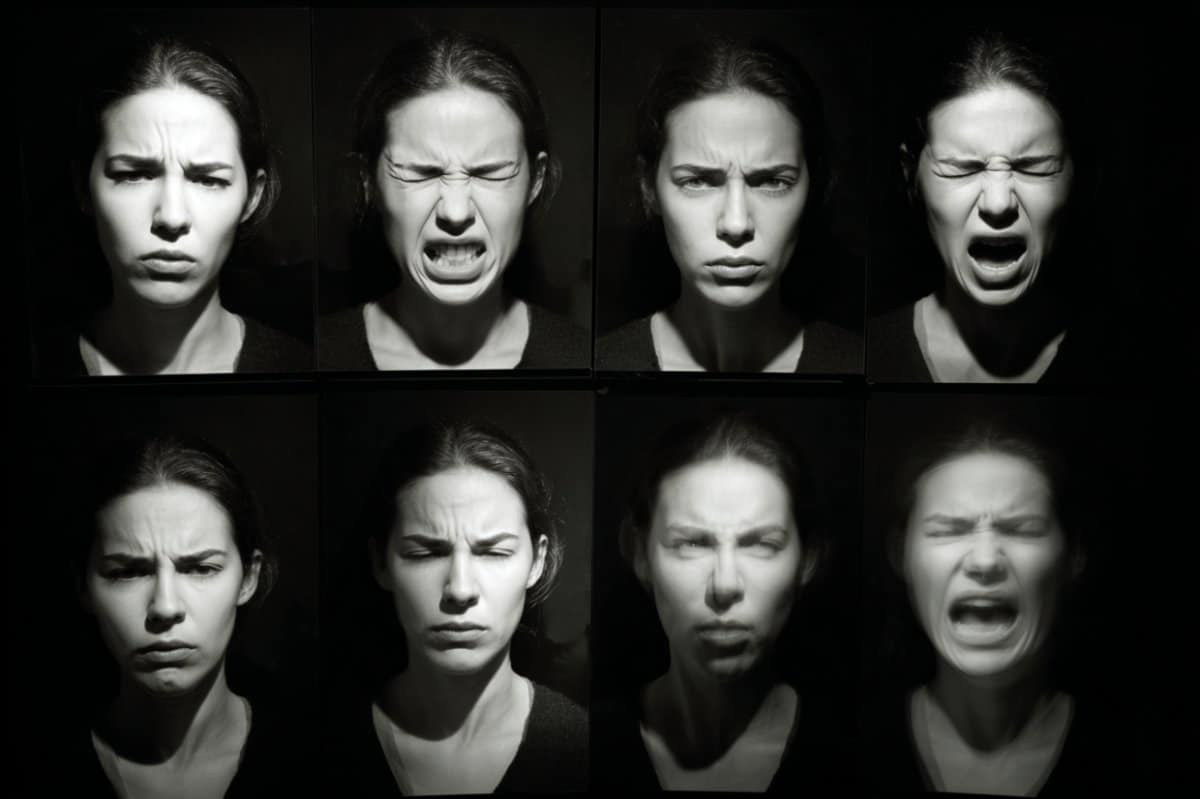

Summary: New research shows that facial expressions can reveal internal cognitive states, accurately predicting task performance across both macaques and mice. By analyzing facial features during a foraging task in a virtual reality setup, researchers identified patterns linked to motivation, focus, and responsiveness.

These patterns were consistent across species, suggesting a shared link between facial expression and cognition. If similar findings hold in humans, this method could revolutionize diagnostics in ADHD, autism, and dementia by objectively measuring attention and other mental states.

Key Facts:

- Cross-Species Insight: Facial expressions reflect similar internal cognitive states in both mice and macaques.

- Predictive Power: Specific facial patterns can forecast how quickly and accurately animals complete tasks.

- Clinical Potential: Findings may lead to new diagnostic tools for non-verbal conditions like autism, ADHD, or dementia.

Source: Max Planck Society

Whether you are solving a puzzle, navigating a shopping center or writing an email: How well you do will not only depend on the task at hand but also on your internal cognitive state.

Motivated, focused, restless, confident, or insecure?

In a new study published in Nature Communications, researchers at the Ernst Strüngmann Institute in Frankfurt have now shown that such cognitive states can be identified from facial expressions — and can even be used to accurately predict how quickly and successfully a task will be solved.

What’s more, this works across species – more specifically, macaques and mice. In both species, facial expressions not only express emotional states, but also latent cognitive processes in a measurable way.

Cognitive states are similar across species

Animal behaviour, for example when searching for food, is largely influenced by internal cognitive states such as attention or motivation to find food.

The aim of the study was therefore to better describe functional aspects of cognitive processes and to analyze whether latent cognitive states are similar across species.

To this end, the researchers had macaques and mice perform a naturalistic foraging task in a specially developed virtual reality environment.

A wide range of facial expressions were recorded, which were then used in a statistical model and further computer simulations to identify a series of latent states that accurately predict when the animals will respond to the stimuli shown and how well they will solve the search task.

The relationship between the identified states and task performance is comparable between species.

“Each cognitive state corresponds to a characteristic pattern of facial features that also overlaps between species,” said postdoctoral researcher Alejandro Tlaie Boria, first author of the study.

”This means that facial expressions can be considered a reliable manifestation of internal cognitive states even across species boundaries.”

Facial expressions provide insight into thought processes

The study thus also shows how facial expressions can be used as objective measures of internal states, allowing conclusions to be drawn about thought processes. This is already directly relevant for research on animal models and could represent a breakthrough for comparative neuroscience and behavioral research.

Chances for ADHD, autism, and dementia research

Whether the results can be transferred to human facial expressions remains to be investigated. However, if they are confirmed for humans, the findings could become relevant for applications in psychiatry and in autism and dementia research.

The approaches could be used to recognize latent cognitive states in non-verbal individuals, such as in cases of autism or locked-in syndrome.

The objective measurement of attention states also offers significant potential for improvement in ADHD diagnostics, for example to quantify severity or to identify specific cognitive patterns that can be assigned to ADHD subtypes.

About this facial expression and cognitive state research news

Author: Andrea Knierriem

Source: Max Planck Society

Contact: Andrea Knierriem – Max Planck Society

Image: The image is credited to Neuroscience News

Original Research: Open access.

“Inferring internal states across mice and monkeys using facial features” by Alejandro Tlaie Boria et al. Nature Communications

Abstract

Inferring internal states across mice and monkeys using facial features

Animal behaviour is shaped to a large degree by internal cognitive states, but it is unknown whether these states are similar across species.

To address this question, here we develop a virtual reality setup in which male mice and macaques engage in the same naturalistic visual foraging task.

We exploit the richness of a wide range of facial features extracted from video recordings during the task, to train a Markov-Switching Linear Regression (MSLR). By doing so, we identify, on a single-trial basis, a set of internal states that reliably predicts when the animals are going to react to the presented stimuli.

Even though the model is trained purely on reaction times, it can also predict task outcome, supporting the behavioural relevance of the inferred states.

The relationship of the identified states to task performance is comparable between mice and monkeys.

Furthermore, each state corresponds to a characteristic pattern of facial features that partially overlaps between species, highlighting the importance of facial expressions as manifestations of internal cognitive states across species.