Summary: Retrofitting wireless earbuds to detect neural signals and relaying the data back to smartphones via Bluetooth, researchers say the new earEEG system could have multiple applications, including health monitoring.

Source: UC Berkeley

From keypads to touch screens to voice commands – step by step, the interface between users and their smartphones has become more personalized, more seamless. Now the ultimate personalized interface is approaching: issuing smartphone commands with your brain waves.

Communication between brain activity and computers, known as brain-computer interface or BCI, has been used in clinical trials to monitor epilepsy and other brain disorders. BCI has also shown promise as a technology to enable a user to move a prosthesis simply by neural commands. Tapping into the basic BCI concept would make smart phones smarter than ever.

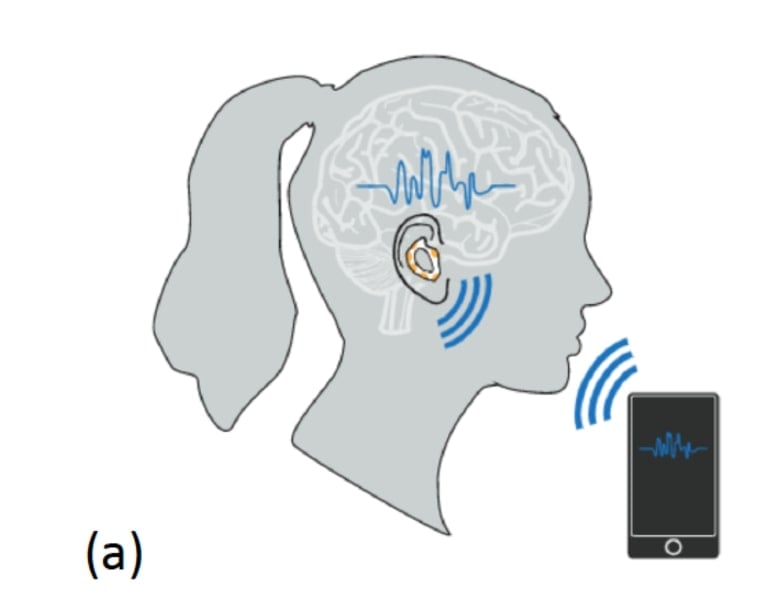

Research has zeroed in on retrofitting wireless earbuds to detect neural signals. The data would then be transmitted to a smartphone via Bluetooth. Software at the smartphone end would translate different brain wave patterns into commands. The emerging technology is called Ear EEG.

Rikky Muller, Assistant Professor of Electrical Engineering and Computer Science, has refined the physical comfort of EEG earbuds and has demonstrated their ability to detect and record brain activity. With support from the Bakar Fellowship Program, she is building out several applications to establish Ear EEG as a new platform technology to support consumer and health monitoring apps.

Q When did you first recognize the potential of Ear EEG for smartphone use?

A In the last five years, Ear EEG has emerged as a viable neural recording modality. Inspired by the popularity of wireless earbuds, my group aimed to figure out how to make Ear EEG a practical and comfortable user-generic interface that can be integrated with consumer earbuds. We’ve demonstrated the ability to fit a wide population of users, to detect high-quality neural signals and to transmit these signals wirelessly via Bluetooth.

Q The technology seems so sci fi. How big a market do you think there is?

A The hearables global market is greater than any other wearable, including smart watches.

We see Ear EEG as an enormously promising new consumer platform.

Q What types of applications do you imagine?

A This is the focus of my Bakar Fellowship. We know we can record a number of signals very robustly such as when a person blinks their eyes and brain signals associated with sleep and relaxation. As an example, eye blinks might be used to purposefully turn on the smartphone instead of relying on a voice command. On the other hand, they could also be used in conjunction with signatures of relaxation to monitor drowsiness.

Q What other applications do you envision, whether a consumer app or for health?

A We are investigating ideas from individualized sound experience to biofeedback. I would love to tell you, “These are the two killer apps for this new technology,” but I view Ear EEG as a platform technology – once you put a new platform in the hands of app developers, they are going to come up with extremely useful things to do with it.

Q How have you improved the comfort of the Ear EEG earbuds?

A Everyone’s ears are a little different. That’s something that developers of hearing aids have been working on for years. Ear EEG has the added challenge of detecting what are really very small signals, so the electrodes must make very good contact with the ear canal.

We took a database of ear canal measurements published by the audiology community for hearing aids, and we created an earbud structure with flexible electrodes in an outward flare that creates a gentle pressure so the earbud fits in anyone’s ear. It’s user-generic.

Q Does the Ear EEG need to be customized any more than that?

A Neural signals are also person-specific, so we need to personalize Ear EEG to individuals. We’re developing a training sequence – a machine-based learning classifier – to collect data on individuals’ brain wave patterns. Users would go through a training sequence, just like when you get a new phone , you might be asked to train the fingerprint or the face i.d. sensor.

We also need to assess which types of inputs are acceptable to users. There are many pieces of amazing technology that haven’t been adopted widely because they are not very comfortable to use in your daily life. I don’t know, for example, if “Blink once for yes, and twice for no” is going to be readily adopted. So there’s a big question of usability.

Q What are the next steps to get closer to marketability?

A Headphones are a very established industry. What we have is a new sensor platform. So the question is really how to integrate it with earbuds that are manufactured today. The Bakar Spark Fund is really helping us collaborate with industry to help establish this as a commercial technology. There are still many challenges, but I’m sure we’re going to get there.

About this neurotech research news

Author: Wallace Ravven

Source: UC Berkeley

Contact: Wallace Ravven – UC Berkeley

Image: The image is credited to the researchers