Summary: Researchers made a groundbreaking discovery in understanding the brain’s neuronal variability, with significant implications for both neuroscience and AI development. Their study unveils how dendrites, the neuron’s antennas, control the variability in neuronal responses.

This research not only advances our understanding of how neurons process variable inputs but also offers a new perspective for AI developers in mimicking brain-like computation.

Key Facts:

- The study focuses on how dendrites control the variability of neuron responses, a key aspect of synaptic plasticity.

- Zachary Friedenberger’s mathematical expertise was pivotal in developing a model for simulating neuronal networks with active dendrites.

- The findings provide critical insights into biological computation, valuable for both neuroscientists and AI developers.

Source: University of Ottawa

The inner workings of the human brain are a gradually unraveling mystery and Dr. Richard Naud of the University of Ottawa’s Faculty of Medicine has led a highly compelling new study that brings us closer to answering these big questions.

The study’s results have important implications for theories of learning and working memory and could potentially help lead to future developments in artificial intelligence (AI) since AI developers and programmers watch the work of Dr. Naud and other leading neuroscientists.

Published in Nature Computational Science, the study tackles the many-layered mystery of the “response variability” of neurons, brain cells that use electric signals and chemicals to process information and greenlights all the remarkable aspects of human consciousness.

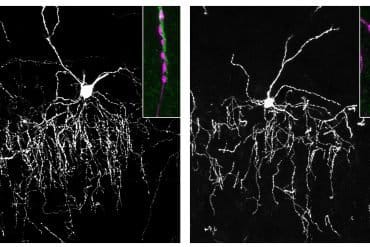

The findings unveil the nuts and bolts of how neuronal variability is controlled by dendrites, the antenna that reach out from each neuron to receive synaptic inputs in our own personal neural communication networks. The rigorous study establishes properties of dendrites potently control output variability, a property that’s been shown to control synaptic plasticity in the brain.

“The intensity of a neuron’s response is controlled by inputs to its core, but the variability of a neuron’s response is controlled by the inputs to its little antennas – the dendrites,” says Dr. Naud, an Associate Professor at the Faculty of Medicine’s Department of Cellular and Molecular Medicine and the uOttawa Department of Physics . “This study establishes more precisely how single neurons can have this crucial property of controlling response variability with their inputs.”

Dr. Naud suspected that if a mathematical framework he’d used to describe the cell body of neurons was extended to take their dendrites into account, then they might have luck efficiently simulating networks of neurons with active dendrites.

Cue the contribution of Zachary Friedenberger, a PhD student at the Department of Physics and a member of Dr. Naud’s lab, with a background in theoretical physics to solve the theoretical challenges and the math in a record time. Fast forward to the completed study: The predictions of the model were validated by analysis of in vivo recording data and observed over a wide range of model parameters.

“He managed to solve the math in a record time and solved a number of theoretical challenges I had not foreseen,” Dr. Naud says.

Dr. Naud believed that their technique could provide insight on the neuronal response to variable inputs. So they began working on a technique that would be able to compute statistics from a neuronal model with an active dendrite.

One of the work’s reviewers noted that the theoretical analysis “provides key insight into biological computation and will be of interest to a broad audience of computational and experimental neuroscientists.”

About this neuroscience and AI research news

Author: Paul Logothetis

Source: University of Ottawa

Contact: Paul Logothetis – University of Ottawa

Image: The image is credited to Neuroscience News

Original Research: Closed access.

“Dendritic excitability controls overdispersion” by Richard Naud et al. Nature Computational Science

Abstract

Dendritic excitability controls overdispersion

The brain is an intricate assembly of intercommunicating neurons whose input–output function is only partially understood. The role of active dendrites in shaping spiking responses, in particular, is unclear.

Although existing models account for active dendrites and spiking responses, they are too complex to analyze analytically and demand long stochastic simulations. Here we combine cable and renewal theory to describe how input fluctuations shape the response of neuronal ensembles with active dendrites.

We found that dendritic input readily and potently controls interspike interval dispersion. This phenomenon can be understood by considering that neurons display three fundamental operating regimes: one mean-driven regime and two fluctuation-driven regimes. We show that these results are expected to appear for a wide range of dendritic properties and verify predictions of the model in experimental data.

These findings have implications for the role of interspike interval dispersion in learning and for theories of attractor states.