Summary: A new study reveals that the human brain synchronizes more accurately with rhythm when listening to music than when feeling it through touch. When people tap along to sound, slow rhythmic brain waves align with the perceived beat, helping maintain steady timing.

However, with rhythmic vibration, the brain responds to each pulse individually, failing to generate the same beat-like neural patterns. These findings highlight why music’s rhythm is such a powerful auditory experience — and why touch alone can’t quite make us dance in time.

Key Facts:

- Auditory Advantage: The brain’s slow rhythmic activity locks onto the beat when music is heard but not when it’s felt through vibration.

- Less Precision Through Touch: People tapped less steadily when following tactile rhythms compared to auditory ones.

- Music and the Mind: Beat synchronization may be central to the social and emotional power of music.

Source: SfN

How do people keep the beat to music?

When people listen to songs, slow waves of activity in the brain correspond to the perceived beat so that they can tap their feet, nod their heads, or dance along.

In a new Journal of Neuroscience paper, researchers led by Cédric Lenoir, from Université catholique de Louvain (UCLouvain), explored whether this ability is unique to hearing or whether it also happens when rhythm is delivered by touch.

The researchers recorded brain activity as study volunteers finger tapped to the beat of music delivered via sound or rhythmic vibration. With sound, the brain generated slow rhythmic fluctuations that matched the perceived beat, and people tapped along to the rhythm more steadily.

However, with touch, the brain mainly tracked each burst of vibrations one by one, without creating the same beat-like fluctuations, and people were less precise in the way they synchronized with the rhythm.

Says Lenoir, “The ability to move in time with a beat is essential for human social interactions through music. Future research will help clarify whether long-term music practice can strengthen the brain’s ability to process rhythm through other senses, or whether sensory loss, such as hearing impairment, might allow the sense of touch to take over part of this function.”

Key Questions Answered:

A: When we listen to music, slow neural oscillations in the brain align with the beat, allowing us to move rhythmically and stay in time.

A: Not quite. The brain responds to each vibration separately instead of forming an overall sense of beat, making synchronization less precise.

A: Understanding how different senses process rhythm could inform music therapy, hearing research, and sensory rehabilitation.

About this auditory neuroscience and tactile perception research news

Author: SfN Media

Source: SfN

Contact: SfN Media – SfN

Image: The image is credited to Neuroscience News

Original Research: Closed access.

“Behavior-relevant periodized neural representation of acoustic but not tactile rhythm in humans” by Cédric Lenoir et al. Journal of Neuroscience

Abstract

Behavior-relevant periodized neural representation of acoustic but not tactile rhythm in humans

Music makes people move. This human propensity to coordinate movement with musical rhythm requires multiscale temporal integration, allowing fast sensory events composing rhythmic input to be mapped onto slower, behavior-relevant, internal templates such as periodic beats.

Relatedly, beat perception has been shown to involve an enhanced representation of the beat periodicities in neural activity.

However, the extent to which this ability to move to the beat, and the related periodized neural representation, are shared across the senses beyond audition remains unknown.

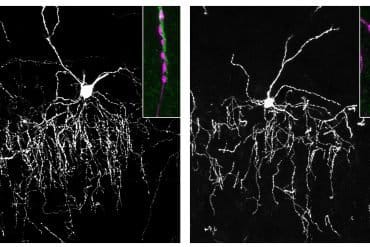

Here, we addressed this question by recording separately the electroencephalographic responses (EEG) and finger tapping to a rhythm conveyed either through acoustic or tactile inputs in healthy volunteers of either sex.

The EEG responses to the acoustic rhythm, spanning a low-frequency range (below 15 Hz), showed enhanced representation of the perceived periodic beat, compatible with behavior.

In contrast, the EEG responses to the tactile rhythm, spanning a broader frequency range (up to 25 Hz), did not show significant beat-related periodization, and yielded less stable tapping.

Together, these findings suggest a preferential role of low-frequency neural activity in supporting neural representation of the beat.

Most importantly, we show that this neural representation, as well as the ability to move to the beat, is not systematically shared across the senses.

More generally, these results, highlighting multimodal differences in beat processing, reveal a process of multiscale temporal integration that allows the auditory system to go beyond mere tracking of onset timing and to support higher-level internal representation and motor entrainment to rhythm.