Summary: Researchers have developed a geometric deep learning approach to uncover shared brain activity patterns across individuals. The method, called MARBLE, learns dynamic motifs from neural recordings and identifies common strategies used by different brains to solve the same task.

Tested on macaques and rats, MARBLE accurately decoded neural activity linked to movement and navigation, outperforming other machine learning methods. The system works by mapping neural data into high-dimensional geometric spaces, enabling pattern recognition across individuals and conditions.

Key Facts:

- Geometric Deep Learning: MARBLE identifies shared brain activity patterns by mapping neural signals onto high-dimensional shapes.

- Cross-Subject Comparisons: The method successfully detected common neural motifs in different animals performing the same task.

- Applications in Brain-Machine Interfaces: By decoding brain activity into recognizable patterns, MARBLE could improve assistive robotics and neuroscience research.

Source: EPFL

In the parable of the blind men and the elephant, several blind men each describe a different part of an elephant they are touching – a sharp tusk, a flexible trunk, or a broad leg – and disagree about the animal’s true nature.

The story illustrates the problem of understanding an unseen, or latent object based on incomplete individual perceptions.

Likewise, when researchers study brain dynamics based on recordings of a limited number of neurons, they must infer the latent patterns of brain dynamics that generate these recordings.

“Suppose you and I both engage in a mental task, such as navigating our way to work. Can signals from a small fraction of neurons tell us that we use the same or different mental strategies to solve the task?” says Pierre Vandergheynst, head of the Signal Processing Laboratory LTS2 in EPFL’s School of Engineering.

“This is a fundamental question to neuroscience, because experimentalists often record data from many animals, yet we have limited evidence as to whether they represent a given task using the same brain patterns.”

Vandergheynst and former postdoc Adam Gosztolai, now an assistant professor at the AI Institute of the Medical University of Vienna, have published a geometric deep learning approach in Nature Methods that can infer latent brain activity patterns across experimental subjects.

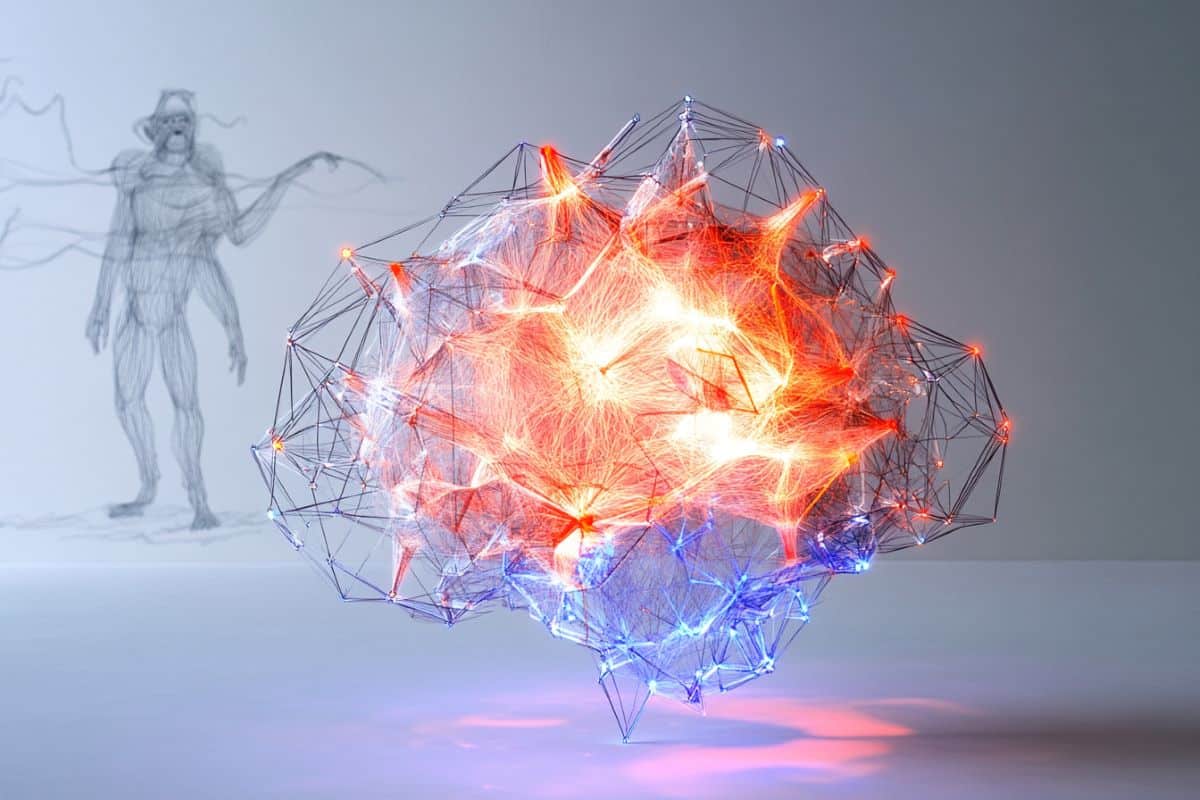

MARBLE (Manifold Representation Basis Learning) achieves this by breaking down electrical neural activity into dynamic patterns, or motifs, that are learnable by a geometric neural network.

In experiments on macaque and rat brain recordings, the scientists used MARBLE to show that when different animals used the same mental strategy to reach an arm or navigate a maze, their brain dynamics were made up of the same motifs.

A geometric neural net for dynamic data

Traditional deep learning is not suited to understanding dynamic systems that change regularly as a function of time, like firing neurons or flowing fluids.

These patterns of activity are so complex that they are best described as geometric objects in high-dimensional spaces. One example of such an object is a torus, which resembles a donut.

As Gosztolai explains, MARBLE is unique because it learns from within curved spaces –natural mathematical spaces for complex patterns of neuronal activity.

“Inside the curved spaces, the geometric deep learning algorithm is unaware that these spaces are curved. Thus, the dynamic motifs it learns are independent of the shape of the space, meaning it can discover the same motifs from different recordings.”

The EPFL team tested MARBLE on recordings of the pre-motor cortex of macaques during a reaching task, and of the hippocampus of rats during a spatial navigation task.

They found that MARBLE’s representations based on single-neuron population recordings were much more interpretable than those from other machine learning methods, and that MARBLE could decode brain activity to arm movements with greater accuracy than other methods.

Moreover, because MARBLE is grounded in the mathematic theory of high-dimensional shapes, it was able to independently patch together brain activity recordings from different experimental conditions into a global structure. This gives it an edge over other methods, which must work with a user-defined global shape.

Brain-machine interfaces and beyond

In addition to furthering our understanding of the dynamics underpinning brain computations and behavior, MARBLE could use neural activity data to recognize the brain’s dynamic patterns when carrying out specific tasks, like reaching, and transform them into decodable representations that could then be used to trigger an assistive robotic device.

However, the researchers emphasize that MARBLE is a powerful tool that could be applied across scientific fields and datasets to compare dynamic phenomena.

“The MARBLE method is primarily aimed at helping neuroscience researchers understand how the brain computes across individuals or experimental conditions, and to uncover – when they exist – universal patterns,” Vandergheynst says.

“But its mathematical basis is by no means limited to brain signals, and we expect that our tool will benefit researchers in other fields of life and physical sciences who wish to jointly analyze multiple datasets.”

About this AI and neuroscience research news

Author: Celia Luterbacher

Source: EPFL

Contact: Celia Luterbacher – EPFL

Image: The image is credited to Neuroscience News

Original Research: Open access.

“Interpretable statistical representations of neural population dynamics and geometry” by Pierre Vandergheynst et al. Nature Methods

Abstract

Interpretable statistical representations of neural population dynamics and geometry

The dynamics of neuron populations commonly evolve on low-dimensional manifolds. Thus, we need methods that learn the dynamical processes over neural manifolds to infer interpretable and consistent latent representations.

We introduce a representation learning method, MARBLE, which decomposes on-manifold dynamics into local flow fields and maps them into a common latent space using unsupervised geometric deep learning.

In simulated nonlinear dynamical systems, recurrent neural networks and experimental single-neuron recordings from primates and rodents, we discover emergent low-dimensional latent representations that parametrize high-dimensional neural dynamics during gain modulation, decision-making and changes in the internal state.

These representations are consistent across neural networks and animals, enabling the robust comparison of cognitive computations.

Extensive benchmarking demonstrates state-of-the-art within- and across-animal decoding accuracy of MARBLE compared to current representation learning approaches, with minimal user input.

Our results suggest that a manifold structure provides a powerful inductive bias to develop decoding algorithms and assimilate data across experiments.