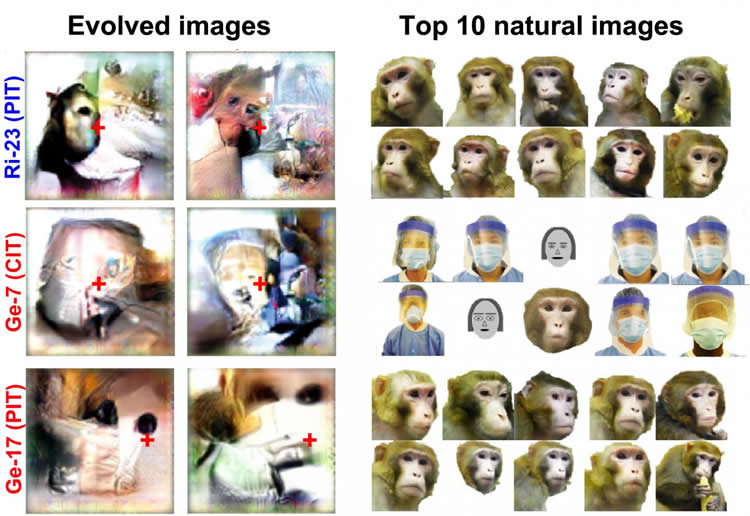

Summary: The XDREAM generative deep neural network utilizes firing rates of neurons in the visual cortex to guide the evolution of a novel, synthetic image. The evolved images activate neurons more than large numbers of natural images.

Source: Cell Press

To find out which sights specific neurons in monkeys “like” best, researchers designed an algorithm, called XDREAM, that generated images that made neurons fire more than any natural images the researchers tested. As the images evolved, they started to look like distorted versions of real-world stimuli. The work appears May 2 in the journal Cell.

“When given this tool, cells began to increase their firing rate beyond levels we have seen before, even with normal images pre-selected to elicit the highest firing rates,” explains co-first author Carlos Ponce, then a post-doctoral fellow in the laboratory of senior author Margaret Livingstone at Harvard Medical School and now a faculty member at Washington University in St. Louis.

“What started to emerge during each experiment were pictures that were reminiscent of shapes in the world but were not actual objects in the world,” he says. “We were seeing something that was more like the language cells use with each other.”

Researchers have known that neurons in the visual cortex of primate brains respond to complex images, like faces, and that most neurons are quite selective in their image preference. Earlier studies on neuronal preference used many natural images to see which images caused neurons to fire most. However, this approach is limited by the fact that one cannot present all possible images to understand what exactly will best stimulate the cell.

The XDREAM algorithm uses the firing rate of a neuron to guide the evolution of a novel, synthetic image. It goes through a series of images over the course of minutes, mutates them, combines them, and then shows a new series of images. At first, the images looked like noise, but gradually they changed into shapes that resembled faces or something recognizable in the animal’s environment, like the food hopper in the animals’ room or familiar people wearing surgical scrubs. The algorithm was developed by Will Xiao in the laboratory of Gabriel Kreiman at Children’s Hospital and tested on real neurons at Harvard Medical School.

“The big advantage of this approach is that it allows the neuron to build its own preferred images from scratch, using a tool that is not limited by much, that can create anything in the world or even things that don’t exist in the world,” says Ponce.

“In this way we have evolved a super-stimulus that drives the cell better than any natural stimulus we could guess at,” says Livingstone. “This approach allows you to use artificial intelligence to figure out what triggers neurons best. It’s a totally unbiased way of asking the cell what it really wants, what would make it fire the most.”

From this study, the researchers believe they are seeing that the brain learns to abstract statistically relevant features of its world. “We are seeing that the brain is analyzing the visual scene, and driven by experience, extracting information that is important to the individual over time,” says Ponce. “The brain is adapting to its environment and encoding ecologically significant information in unpredictable ways.”

The team believes this technology can be applied to any neuron in the brain that responds to sensory information, such as auditory neurons, hippocampal neurons, and prefrontal cortex neurons where memories can be accessed. “This is important because as artificial intelligence researchers develop models that work as well as the brain does – or even better – we will still need to understand which networks are more likely to behave safely and further human goals,” Ponce says. “More efficient AI can be grounded by knowledge of how the brain works.”

Funding: This work was supported by grants from the National Institutes of Health and the National Science Foundation.

Source:

Cell Press

Media Contacts:

Carly Britton – Cell Press

Image Source:

The images are credited to Ponce, Xiao, and Schade et al./Cell.

Original Research: Open access

“Evolving Images for Visual Neurons Using a Deep Generative Network Reveals Coding Principles and Neuronal Preferences”. Carlos R. Ponce, Will Xiao, Peter F. Schade, Till S. Hartmann, Gabriel Kreiman, Margaret S. Livingstone. Cell. doi:10.1016/j.cell.2019.04.005

Abstract

Evolving Images for Visual Neurons Using a Deep Generative Network Reveals Coding Principles and Neuronal Preferences

Highlights

• A generative deep neural network and a genetic algorithm evolved images guided by neuronal firing

• Evolved images maximized neuronal firing in alert macaque visual cortex

• Evolved images activated neurons more than large numbers of natural images

• Similarity to evolved images predicts response of neurons to novel images

Summary

What specific features should visual neurons encode, given the infinity of real-world images and the limited number of neurons available to represent them? We investigated neuronal selectivity in monkey inferotemporal cortex via the vast hypothesis space of a generative deep neural network, avoiding assumptions about features or semantic categories. A genetic algorithm searched this space for stimuli that maximized neuronal firing. This led to the evolution of rich synthetic images of objects with complex combinations of shapes, colors, and textures, sometimes resembling animals or familiar people, other times revealing novel patterns that did not map to any clear semantic category. These results expand our conception of the dictionary of features encoded in the cortex, and the approach can potentially reveal the internal representations of any system whose input can be captured by a generative model.