Summary: Using EEG technology, researchers have discovered a specific brain signal that helps us to understand what we hear in conversation.

Source: TCD.

Neuroscientists from Trinity College Dublin and the University of Rochester have identified a specific brain signal associated with the conversion of speech into understanding. The signal is present when the listener has understood what they have heard, but it is absent when they either did not understand, or weren’t paying attention.

The uniqueness of the signal means that it could have a number of potential applications, such as tracking language development in infants, assessing brain function in unresponsive patients, or determining the early onset of dementia in older persons.

During our everyday interactions, we routinely speak at rates of 120 – 200 words per minute. For listeners to understand speech at these rates – and to not lose track of the conversation – their brains must comprehend the meaning of each of these words very rapidly. It is an amazing feat of the human brain that we do this so easily — especially given that the meaning of words can vary greatly depending on the context. For example, the word bat means very different things in the following two sentences: “I saw a bat flying overhead last night”; “The baseball player hit a homerun with his favourite bat.”

However, precisely how our brains compute the meaning of words in context has, until now, remained unclear. The new approach, published today in the international journal Current Biology, shows that our brains perform a rapid computation of the similarity in meaning that each word has to the words that have come immediately before it.

To discover this, the researchers began by exploiting state-of-the-art techniques that allow modern computers and smartphones to “understand” speech. These techniques are quite different to how humans operate. Human evolution has been such that babies come more or less hardwired to learn how to speak based on a relatively small number of speech examples. Computers on the other hand need a tremendous amount of training, but because they are fast, they can accomplish this training very quickly. Thus, one can train a computer by giving it a lot of examples (e.g., all of Wikipedia) and by asking it to recognise which pairs of words appear together a lot and which don’t. By doing this, the computer begins to “understand” that words that appear together regularly, like “cake” and “pie”, must mean something similar. And, in fact, the computer ends up with a set of numerical measures capturing how similar any word is to any other.

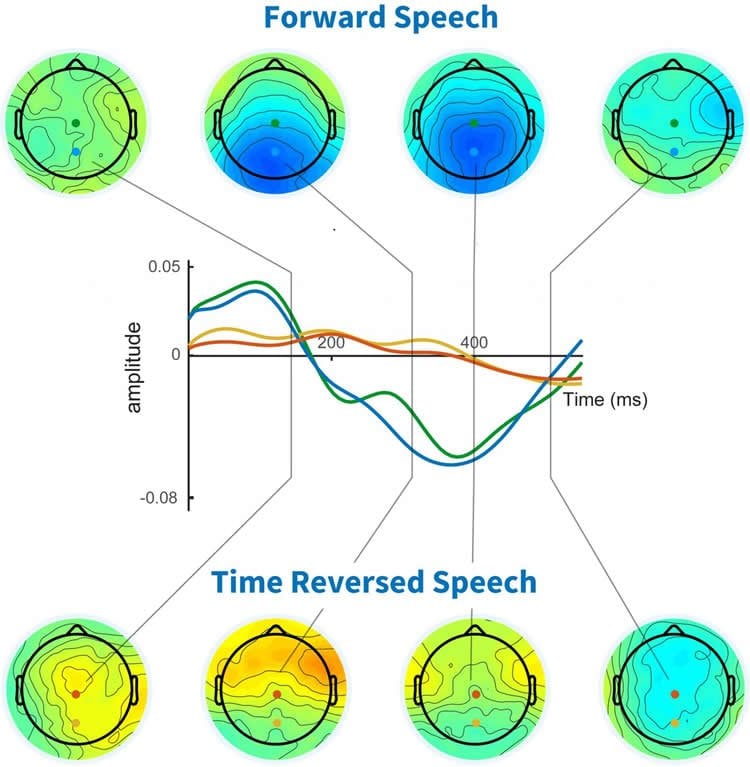

To test if human brains actually compute the similarity between words as we listen to speech, the researchers recorded electrical brainwave signals recorded from the human scalp – a technique known as electroencephalography or EEG – as participants listened to a number of audiobooks. Then, by analysing their brain activity, they identified a specific brain response that reflected how similar or different a given word was from the words that preceded it in the story.

Crucially, this signal disappeared completely when the subjects either could not understand the speech (because it was too noisy), or when they were just not paying attention to it. Thus, this signal represents an extremely sensitive measure of whether or not a person is truly understanding the speech they are hearing, and, as such, it has a number of potential important applications.

Ussher Assistant Professor in Trinity College Dublin’s School of Engineering, Trinity College Institute of Neuroscience, and Trinity Centre for Bioengineering, Ed Lalor, led the research.

Professor Lalor said: “Potential applications include testing language development in infants, or determining the level of brain function in patients in a reduced state of consciousness. The presence or absence of the signal may also confirm if a person in a job that demands precision and speedy reactions – such as an air traffic controller, or soldier — has understood the instructions they have received, and it may perhaps even be useful for testing for the onset of dementia in older people based on their ability to follow a conversation.”

“There is more work to be done before we fully understand the full range of computations that our brains perform when we understand speech. However, we have already begun searching for other ways that our brains might compute meaning, and how those computations differ from those performed by computers. We hope the new approach will make a real difference when applied in some of the ways we envision.”

Source: Thomas Deane – TCD

Publisher: Organized by NeuroscienceNews.com.

Image Source: NeuroscienceNews.com image is credited to Professor Ed Lalor.

Original Research: Open access research in Current Biology.

DOI:10.1016/j.cub.2018.01.080

[cbtabs][cbtab title=”MLA”]TCD “Brain Signal that Indicates Whether Speech Has Been Understood Discovered.” NeuroscienceNews. NeuroscienceNews, 22 February 2018.

< https://neurosciencenews.com/speech-understanding-brain-signal-8545/>.[/cbtab][cbtab title=”APA”]TCD (2018, February 22). Brain Signal that Indicates Whether Speech Has Been Understood Discovered. NeuroscienceNews. Retrieved February 22, 2018 from https://neurosciencenews.com/speech-understanding-brain-signal-8545/[/cbtab][cbtab title=”Chicago”]TCD “Brain Signal that Indicates Whether Speech Has Been Understood Discovered.” https://neurosciencenews.com/speech-understanding-brain-signal-8545/ (accessed February 22, 2018).[/cbtab][/cbtabs]

Abstract

Electrophysiological Correlates of Semantic Dissimilarity Reflect the Comprehension of Natural, Narrative Speech

Highlights

•EEG reflects semantic processing of continuous natural speech

•Mapping function of semantic features to neural response shares traits with the N400

•Computational language models capture neural-related measures of semantic dissimilarity

•Index of semantic processing is sensitive to attention and speech intelligibility

Summary

People routinely hear and understand speech at rates of 120–200 words per minute. Thus, speech comprehension must involve rapid, online neural mechanisms that process words’ meanings in an approximately time-locked fashion. However, electrophysiological evidence for such time-locked processing has been lacking for continuous speech. Although valuable insights into semantic processing have been provided by the “N400 component” of the event-related potential, this literature has been dominated by paradigms using incongruous words within specially constructed sentences, with less emphasis on natural, narrative speech comprehension. Building on the discovery that cortical activity “tracks” the dynamics of running speech and psycholinguistic work demonstrating and modeling how context impacts on word processing, we describe a new approach for deriving an electrophysiological correlate of natural speech comprehension. We used a computational model to quantify the meaning carried by words based on how semantically dissimilar they were to their preceding context and then regressed this measure against electroencephalographic (EEG) data recorded from subjects as they listened to narrative speech. This produced a prominent negativity at a time lag of 200–600 ms on centro-parietal EEG channels, characteristics common to the N400. Applying this approach to EEG datasets involving time-reversed speech, cocktail party attention, and audiovisual speech-in-noise demonstrated that this response was very sensitive to whether or not subjects understood the speech they heard. These findings demonstrate that, when successfully comprehending natural speech, the human brain responds to the contextual semantic content of each word in a relatively time-locked fashion.