Summary: Researchers have discovered that brain-to-brain coupling during conversation is significantly influenced by the context in which words are used, not just by linguistic information alone. Using high-resolution electrocorticography and GPT-2 language models, they found that specific brain activity in both speakers and listeners is driven by the context-specific meaning of words.

This study highlights the importance of context in aligning brain activity during interactions and offers new insights into how our brains process language. The findings pave the way for further exploration of brain coordination and communication.

Key Facts:

- Contextual Importance: Brain-to-brain coupling during conversation is largely driven by the context-specific meaning of words, enhancing communication.

- Brain Activity Timing: Word-specific brain activity occurs about 250 ms before speech in speakers and 250 ms after hearing in listeners, showing rapid processing.

- Advanced Modeling: The study used GPT-2 language models to accurately predict shared brain activity patterns, outperforming traditional linguistic models.

Source: Cell Press

When two people interact, their brain activity becomes synchronized, but it was unclear until now to what extent this “brain-to-brain coupling” is due to linguistic information or other factors, such as body language or tone of voice.

Researchers report August 2 in the journal Neuron that brain-to-brain coupling during conversation can be modeled by considering the words used during that conversation, and the context in which they are used.

“We can see linguistic content emerge word-by-word in the speaker’s brain before they actually articulate what they’re trying to say, and the same linguistic content rapidly reemerges in the listener’s brain after they hear it,” says first author and neuroscientist Zaid Zada of Princeton University.

To communicate verbally, we must agree on the definitions of different words, but these definitions can change depending on the context. For example, without context, it would be impossible to know whether the word “cold” refers to temperature, a personality trait, or a respiratory infection.

“The contextual meaning of words as they occur in a particular sentence, or in a particular conversation, is really important for the way that we understand each other,” says neuroscientist and co-senior author Samuel Nastase of Princeton University. “We wanted to test the importance of context in aligning brain activity between speaker and listener to try to quantify what is shared between brains during conversation.”

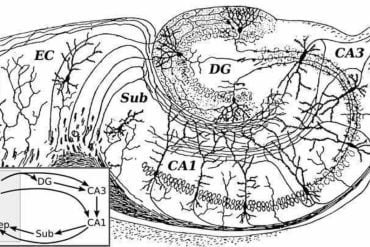

To examine the role of context in driving brain coupling, the team collected brain activity data and conversation transcripts from pairs of epilepsy patients during natural conversations.

The patients were undergoing intracranial monitoring using electrocorticography for unrelated clinical purposes at the New York University School of Medicine Comprehensive Epilepsy Center. Compared to less invasive methods like fMRI, electrocorticography records extremely high-resolution brain activity because electrodes are placed in direct contact with the surface of the brain.

Next, the researchers used the large language model GPT-2 to extract the context surrounding each of the words used in the conversations, and then used this information to train a model to predict how brain activity changes as information flows from speaker to listener during conversation.

Using the model, the researchers were able to observe brain activity associated with the context-specific meaning of words in the brains of both speaker and listener. They showed that word-specific brain activity peaked in the speaker’s brain around 250 ms before they spoke each word, and corresponding spikes in brain activity associated with the same words appeared in the listener’s brain approximately 250 ms after they heard them.

Compared to previous work on speaker–listener brain coupling, the team’s context-based approach model was better able to predict shared patterns in brain activity.

“This shows just how important context is, because it best explains the brain data,” says Zada.

“Large language models take all these different elements of linguistics like syntax and semantics and represent them in a single high-dimensional vector. We show that this type of unified model is able to outperform other hand-engineered models from linguistics.”

In the future, the researchers plan to expand on their study by applying the model to other types of brain activity data, for example fMRI data, to investigate how parts of the brain not accessible with electrocorticography operate during conversations.

“There’s a lot of exciting future work to be done looking at how different brain areas coordinate with each other at different timescales and with different kinds of content,” says Nastase.

Funding:

This research was supported by the National Institutes of Health.

About this language and neuroscience research news

Author: Kristopher Benke

Source: Cell Press

Contact: Kristopher Benke – Cell Press

Image: The image is credited to Neuroscience News

Original Research: Open access.

“A shared model-based linguistic space for transmitting our thoughts from brain to brain in natural conversations” by Samuel Nastase et al. Neuron

Abstract

A shared model-based linguistic space for transmitting our thoughts from brain to brain in natural conversations

Highlights

- We acquired intracranial recordings in five dyads during face-to-face conversations

- Large language models can serve as a shared linguistic space for communication

- Context-sensitive embeddings track the exchange of information from brain to brain

- Contextual embeddings outperform other models for speaker-listener coupling

Summary

Effective communication hinges on a mutual understanding of word meaning in different contexts. We recorded brain activity using electrocorticography during spontaneous, face-to-face conversations in five pairs of epilepsy patients.

We developed a model-based coupling framework that aligns brain activity in both speaker and listener to a shared embedding space from a large language model (LLM). The context-sensitive LLM embeddings allow us to track the exchange of linguistic information, word by word, from one brain to another in natural conversations.

Linguistic content emerges in the speaker’s brain before word articulation and rapidly re-emerges in the listener’s brain after word articulation. The contextual embeddings better capture word-by-word neural alignment between speaker and listener than syntactic and articulatory models.

Our findings indicate that the contextual embeddings learned by LLMs can serve as an explicit numerical model of the shared, context-rich meaning space humans use to communicate their thoughts to one another.