Summary: New research reveals that neurons in the visual cortex are far more adaptable than previously thought, responding dynamically to complex stimuli during object recognition tasks. While visual processing has traditionally been viewed as mostly feedforward, this study shows that feedback from higher brain areas conveys prior knowledge and behavioral context to shape perception.

This “top-down” information allows early visual neurons to shift their responsiveness moment by moment depending on the task at hand. These findings challenge classical models of visual processing and may have implications for understanding perception and brain disorders like autism.

Key Facts:

- Dynamic Neurons: Early visual neurons adapt rapidly based on task demands and prior experiences.

- Top-Down Feedback: Higher brain regions send contextual information to lower visual areas, guiding perception.

- Implications for Autism: Understanding feedback mechanisms may shed light on perceptual differences in autism.

Source: Rockefeller University

Our brains begin to create internal representations of the world around us from the first moment we open our eyes. We perceptually assemble components of scenes into recognizable objects thanks to neurons in the visual cortex.

This process occurs along the ventral visual cortical pathway, which extends from the primary visual cortex at the back of the brain to the temporal lobes.

It’s long been thought that specific neurons along this pathway handle specific types of information depending on where they are located, and that the dominant flow of visual information is feedforward, up a hierarchy of visual cortical areas.

Although the reverse direction of cortical connections, often referred to as feedback, has long been known to exist, its functional role has been little understood.

Ongoing research from the lab of Rockefeller University’s Charles D. Gilbert is revealing an important role for feedback along the visual pathway.

As his team demonstrates in a paper recently published in PNAS, this countercurrent stream carries so-called “top down” information across cortical areas that is informed by our prior encounters with an object.

One consequence of this flow is that neurons in this pathway aren’t fixed in their responsiveness but can adapt moment to moment to the information they’re receiving.

“Even at the first stages of object perception, the neurons are sensitive to much more complex visual stimuli than had previously been believed, and that capability is informed by feedback from higher cortical areas,” says Gilbert, head of the Laboratory of Neurobiology.

A different flow

Gilbert’s lab has investigated fundamental aspects of how information is represented in the brain for many years, primarily by studying the circuitry underlying visual perception and perceptual learning in the visual cortex.

“The classical view of this pathway proposes that neurons at its beginning can only perceive simple information such as a line segment, and that complexity increases the farther up the hierarchy you go until you reach the neurons that will only respond to a specific level of complexity,” he says.

Previous findings from his lab indicate that this view may be incorrect. His group has, for example, found that the visual cortex is capable of altering its functional properties and circuitry, a quality known as plasticity.

And in work done with his Rockefeller colleague (and Nobel Prize winner) Torsten N. Wiesel, Gilbert discovered long-range horizontal connections along cortical circuits, which enable neurons to link bits of information over much larger areas of the visual field than had been thought.

He’s also documented that neurons can switch their inputs between those that are task relevant and those that are task irrelevant, underscoring the dexterity of their functional properties.

“For the current study, we were trying to establish that these capabilities are part of our normal process of object recognition,” he says.

Seeing is understanding

Gilbert’s lab spent several years studying a pair of macaques that had been trained in object recognition using images of a variety of objects the animals may or may not have had familiarity with, such as fruits, vegetables, tools, and machines.

As the animals learned to recognize these objects, the researchers monitored their brain activity using fMRI to identify which regions responded to the visual stimuli. (This method was pioneered by Gilbert’s Rockefeller colleague Winrich Freiwald, who has used it to identify regions of the brain that are responsive to faces.)

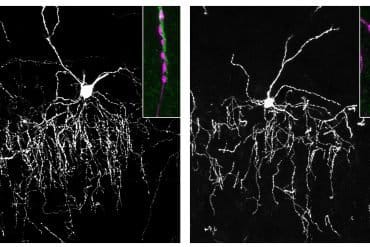

They then implanted electrode arrays, which enabled them to record the activity of individual nerve cells as the animals were shown images of the objects they’d been trained to recognize.

Sometimes they were shown the full object, and other times a partial or tightly cropped image. Then they were shown a variety of different visual stimuli and indicated whether they found a match to the original object or not.

“These are called delayed match-to-sample tasks because there is a delay between when they see an object cue and when they are shown a second object or object component, to which they are trained to report whether the second image corresponds to the initial cue,” Gilbert says.

“While they’re looking through all the visual stimuli to find a match, they have to use their working memory to keep in mind the original image.”

Adaptive processing

The researchers found that over a range of visual targets, a single neuron may be more responsive to one target, and with another cue, they’ll be more responsive to a different target.

“We learned these neurons are adaptive processors that change on a moment-to-moment basis, taking on different functions that are appropriate for the immediate behavioral context,” Gilbert says.

They also demonstrated that the neurons found at the beginning of the pathway, thought to be limited to responding to simple visual information, were not actually so constrained in their abilities.

“These neurons are sensitive to much more complex visual stimuli than had previously been believed,” he says.

“There doesn’t seem to be as large of a difference in terms of the degree of complexity represented in the early cortical areas relative to the higher cortical areas as previously thought.”

These findings bolster what Gilbert believes is a novel view of cortical processing: that adult neurons do not have fixed functional properties but are instead dynamically tuned, changing their specificities with varying sensory experience.

Observation of cortical activity also revealed a potential functional role of reciprocal feedback connections in object recognition, where the flow of information from higher cortical areas to these lower ones contributes to their dynamic capabilities.

“We discovered that these so-called ‘top-down’ feedback connections convey information from areas of the visual cortex that represent previously stored information about the nature and identity of objects, which is acquired through experience and behavioral context,” he says.

“In a sense, the higher-order cortical areas send an instruction to the lower areas to perform a particular calculation, and the return signal—the feedforward signal—is the result of that calculation.

“These interactions are likely to be operating continually as we recognize an object and, more broadly speaking, make visual sense of our surroundings.”

Autism research applications

The findings are part of an increasing recognition of the importance and prevalence of feedback information flow in the visual cortex—and perhaps far beyond.

“I would argue that top-down interactions are central to all brain functions, including other senses, motor control, and higher order cognitive functions, so understanding the cellular and circuit basis for these interactions could expand our understanding of the mechanisms underlying brain disorders,” Gilbert says.

To that end, his lab is beginning to investigate animal models of autism both at the behavioral and the imaging level. Will Snyder, a research specialist in Gilbert’s lab, will study perceptual differences between autism-model mice and their wild-type littermates.

In conjunction, the lab will observe large neuronal populations in the animals’ brains as they engage in natural behaviors using the highly advanced neuroimaging technologies in the Elizabeth R. Miller Brain Observatory, an interdisciplinary research center located on Rockefeller’s campus.

“Our goal is to see if we can identify any perceptual differences between these two groups and the operation of cortical circuits that may underly these differences,” Gilbert says.

About this visual neuroscience research news

Author: Katherine Fenz

Source: Rockefeller University

Contact: Katherine Fenz – Rockefeller University

Image: The image is credited to Neuroscience News

Original Research: Closed access.

“Expectation-dependent stimulus selectivity in the ventral visual cortical pathway” by Charles D. Gilbert et al. PNAS

Abstract

Expectation-dependent stimulus selectivity in the ventral visual cortical pathway

The hierarchical view of the ventral object recognition pathway is primarily based on feedforward mechanisms, starting from a fixed basis set of object primitives and ending on a representation of whole objects in the inferotemporal cortex.

Here, we provide a different view. Rather than being a fixed “labeled line” for a specific feature, neurons are continually changing their stimulus selectivities on a moment-to-moment basis, as dictated by top–down influences of object expectation and perceptual task.

Here, we also derive the selectivity for stimulus features from an ethologically curated stimulus set, based on a delayed match-to-sample task, that finds components that are informative for object recognition in addition to full objects, though the top–down effects were seen for both informative and uninformative components.

Cortical areas responding to these stimuli were identified with functional MRI in order to guide placement of chronically implanted electrode arrays.