Summary: Neuroscientists have uncovered how a small network of neurons in fruit flies accurately maintains an internal compass, defying previous theories that required large networks for precision.

This new understanding reveals that small networks, when carefully connected, can perform complex tasks like tracking spatial orientation. The study changes how scientists think about brain functions, such as memory and decision-making, suggesting small brain systems are more powerful than once believed.

Key Facts:

- Fruit flies use a tiny network of neurons to maintain an accurate internal compass.

- Smaller networks can perform complex computations, but they require precise connections.

- This discovery reshapes our understanding of how the brain handles navigation and decision-making.

Source: HHMI

Neuroscientists had a problem.

For decades, researchers had a theory about how an animal’s brain keeps track of where it is relative to its surroundings without outside cues – like how we know where we are, even with our eyes closed.

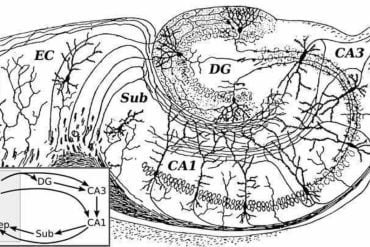

According to the theory, which was based on brain recordings from rodents, networks of neurons called ring attractor networks maintain an internal compass that keeps track of where you are in the world.

An accurate internal compass was thought to require a large network with many neurons, while a small network with few neurons would cause the compass’s needle to drift, creating errors.

Then researchers discovered an internal compass in the tiny fruit fly.

“The fly’s compass is very accurate, but it’s built from a really small network, contrary to what previous theories assumed,” says Janelia Group Leader Ann Hermundstad. “So, there was clearly a gap in our understanding of brain compasses.”

Now, research led by Marcella Noorman, a postdoc in the Hermundstad Lab at HHMI’s Janelia Research Campus, explains this conundrum. The new theory shows how it is possible to create a perfectly accurate internal compass with a very small network, like in fruit flies.

The work changes the way neuroscientists think about how the brain carries out many tasks, from working memory to navigation to decision-making.

“This really expands our knowledge of what small networks can do,” Noorman says. “They actually can do a lot more complicated computations than previously known.”

Generating a ring attractor

When Noorman arrived at Janelia in 2019, she was presented with the problem Hermundstad and others had been puzzling over: How could the fruit fly’s small brain generate an accurate internal compass?

Noorman first set out to show that you couldn’t generate a ring attractor with a small network of neurons, but that you needed to add “extra stuff” — like other cell types and more detailed biophysical properties of the cells – to get it to work.

To do that, she stripped away all the “extra stuff” from existing models, to see if she could generate a ring attractor with what was left over. She thought this wouldn’t be possible.

But Noorman struggled to prove her hypothesis. That’s when she decided she needed a different approach.

“I had to flip my mindset and think, well, maybe it’s because you can generate a ring attractor with a small network,” she says, “and then figure out what specific conditions the network has to satisfy to make that happen.”

By changing her assumption, Noorman discovered that, in fact, it is possible to generate a ring attractor with as few as four neurons, as long as the connections between them are carefully adjusted. Noorman worked with other researchers at Janelia to test the new theory in the lab, finding physiological evidence that the fly brain can generate a ring attractor.

“Smaller networks and smaller brains can perform more complicated computations than we previously thought,” Noorman says. “But, to do so, the neurons have to be connected much more precisely than they would otherwise need to be in a larger brain where you can use a lot of neurons to perform the same computation.”

“So there’s a trade-off between how many neurons you use for this computation and how carefully you have to connect them,” she says.

Next, the researchers plan to explore whether the “extra stuff” might provide additional robustness to the ring attractor network, and whether the base computation could serve as a building block for more complicated computations in bigger networks with multiple variables.

Additional experiments could also help researchers understand how the connections between neurons in the network are adjusted and how sensory cues might impact the network’s representation of head direction.

For Noorman, a mathematician turned neuroscientist, it has been challenging but fun to figure out how to translate biology into a math problem that can be solved.

“The fly’s head direction system is the first example of neural activity that I’d ever seen, so it’s been fun to actually figure out and understand how that works,” she says.

About this neuroscience research news

Author: Nanci Bompey

Source: HHMI

Contact: Nanci Bompey – HHMI

Image: The image is credited to Neuroscience News

Original Research: Open access.

“Maintaining and updating accurate internal representations of continuous variables with a handful of neurons” by Ann Hermundstad et al. Nature Neuroscience

Abstract

Maintaining and updating accurate internal representations of continuous variables with a handful of neurons

Many animals rely on persistent internal representations of continuous variables for working memory, navigation, and motor control. Existing theories typically assume that large networks of neurons are required to maintain such representations accurately; networks with few neurons are thought to generate discrete representations.

However, analysis of two-photon calcium imaging data from tethered flies walking in darkness suggests that their small head-direction system can maintain a surprisingly continuous and accurate representation.

We thus ask whether it is possible for a small network to generate a continuous, rather than discrete, representation of such a variable. We show analytically that even very small networks can be tuned to maintain continuous internal representations, but this comes at the cost of sensitivity to noise and variations in tuning.

This work expands the computational repertoire of small networks, and raises the possibility that larger networks could represent more and higher-dimensional variables than previously thought.