Summary: Researchers are investigating how the brain combines visual and auditory cues to improve speech comprehension in noisy environments. The study focuses on how visual information, like lip movements, enhances the brain’s ability to differentiate similar sounds, such as “F” and “S.”

Using EEG caps to monitor brainwaves, the team will study individuals with cochlear implants to understand how auditory and visual information integrate, particularly in those implanted later in life.

This research aims to uncover how development stages influence reliance on visual cues and could lead to advanced assistive technologies. Insights into this process may also improve speech perception strategies for those who are deaf or hard of hearing.

Key Facts:

- Multisensory Integration: Visual cues, like lip movements, enhance auditory processing in noisy environments.

- Cochlear Implant Focus: Researchers are studying how implant timing affects the brain’s reliance on visual information.

- Tech Advancements: Findings may inform better technologies for those with hearing impairments.

Source: University of Rochester

In a loud, crowded room, how does the human brain use visual speech cues to augment muddled audio and help the listener better understand what a speaker is saying?

While most people know intuitively to look at a speaker’s lip movements and gestures to help fill in the gaps in speech comprehension, scientists do not yet know how that process works physiologically.

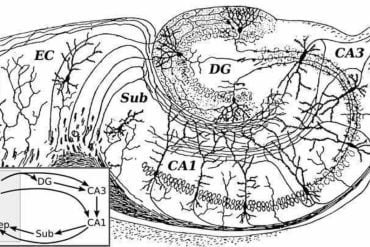

“Your visual cortex is at the back of your brain and your auditory cortex is on the temporal lobes,” says Edmund Lalor, an associate professor of biomedical engineering and of neuroscience at the University of Rochester.

“How that information merges together in the brain is not very well understood.”

Scientists have been chipping away at the problem, using noninvasive electroencephalography (EEG) brainwave measurements to study how people respond to basic sounds such as beeps, clicks, or simple syllables.

Lalor and his team of researchers have made progress by exploring how the specific shape of the articulators—such as the lips and the tongue against the teeth—help a listener determine whether somebody is saying “F” or “S,” or “P” or “D,” which in a noisy environment can sound similar.

Now Lalor wants to take the research a step further and explore the problem in more naturalistic, continuous, multisensory speech.

The National Institutes of Health (NIH) is providing him an estimated $2.3 million over the next five years to pursue that research. The project builds on a previous NIH R01 grant and was originally started by seed funding from the University’s Del Monte Institute for Neuroscience.

To study the phenomena, Lalor’s team will monitor the brainwaves of people for whom the auditory system is especially noisy—people who are deaf or hard of hearing and who use cochlear implants.

The researchers aim to recruit 250 participants with cochlear implants, who will be asked to watch and listen to multisensory speech while wearing EEG caps that will measure their brain responses.

“The big idea is that if people get cochlear implants implanted at age one, while maybe they’ve missed out on a year of auditory input, perhaps their audio system will still wire up in a way that is fairly similar to a hearing individual,” says Lalor.

“However, people who get implanted later, say at age 12, have missed out on critical periods of development for their auditory system.

“As such, we hypothesize that they may use visual information they get from a speaker’s face differently or more, in some sense, because they need to rely on it more heavily to fill in information.”

Lalor is partnering on the study with co-principal investigator Professor Matthew Dye, who directs Rochester Institute of Technology’s doctoral program in cognitive science and the National Technical Institute for the Deaf Sensory, Perceptual, and Cognitive Ecology Center, and also serves as an adjunct faculty member at the University of Rochester Medical Center.

Lalor says one of the biggest challenges is that the EEG cap, which measures the electrical activity of the brain through the scalp, collects a mixture of signals coming from many different sources.

Measuring EEG signals in individuals with cochlear implants further complicates the process because the implants also generate electrical activity that further obscures EEG readings.

“It will require some heavy lifting on the engineering side, but we have great students here at Rochester who can help us use signal processing, engineering analysis, and computational modeling to examine these data in a different way that makes it feasible for us to use,” says Lalor.

Ultimately, the team hopes that better understanding how the brain processes audiovisual information will lead to better technology to help people who are deaf or hard of hearing.

About this sensory processing and auditory neuroscience research news

Author: Luke Auburn

Source: University of Rochester

Contact: Luke Auburn – University of Rochester

Image: The image is credited to Neuroscience News