Summary: Researchers found that humans and AI share a similar interplay between two learning systems: flexible, quick in-context learning and gradual incremental learning. Experiments showed that AI could develop in-context learning abilities after extensive incremental practice, much like humans do.

Both also displayed trade-offs between flexibility and retention, with harder tasks strengthening memory while easier tasks boosted adaptability. These findings could shape the design of AI systems that work more intuitively alongside human cognition.

Key Facts

- Shared Strategies: Humans and AI both use in-context and incremental learning in complementary ways.

- Meta-Learning Breakthrough: AI gained flexible in-context learning only after thousands of incremental training tasks.

- Trade-Offs: Like humans, AI balances between flexibility (quick rule learning) and retention (long-term memory updates).

Source: Brown University

New research found similarities in how humans and artificial intelligence integrate two types of learning, offering new insights about how people learn as well as how to develop more intuitive AI tools.

Led by Jake Russin, a postdoctoral research associate in computer science at Brown University, the study found by training an AI system that flexible and incremental learning modes interact similarly to working memory and long-term memory in humans.

“These results help explain why a human looks like a rule-based learner in some circumstances and an incremental learner in others,” Russin said. “They also suggest something about what the newest AI systems have in common with the human brain.”

Russin holds a joint appointment in the laboratories of Michael Frank, a professor of cogntive and psychological sciences and director of the Center for Computational Brain Science at Brown’s Carney Institute for Brain Science, and Ellie Pavlick, an associate professor of computer science who leads the AI Research Institute on Interaction for AI Assistants at Brown.

The study was published in the Proceedings of the National Academy of Sciences.

Depending on the task, humans acquire new information in one of two ways. For some tasks, such as learning the rules of tic-tac-toe, “in-context” learning allows people to figure out the rules quickly after a few examples. In other instances, incremental learning builds on information to improve understanding over time — such as the slow, sustained practice involved in learning to play a song on the piano.

While researchers knew that humans and AI integrate both forms of learning, it wasn’t clear how the two learning types work together. Over the course of the research team’s ongoing collaboration, Russin — whose work bridges machine learning and computational neuroscience — developed a theory that the dynamic might be similar to the interplay of human working memory and long-term memory.

To test this theory, Russin used “meta-learning”— a type of training that helps AI systems learn about the act of learning itself — to tease out key properties of the two learning types. The experiments revealed that the AI system’s ability to perform in-context learning emerged after it meta-learned through multiple examples.

One experiment, adapted from an experiment in humans, tested for in-context learning by challenging the AI to recombine similar ideas to deal with new situations: if taught about a list of colors and a list of animals, could the AI correctly identify a combination of color and animal (e.g. a green giraffe) it had not seen together previously?

After the AI meta-learned by being challenged to 12,000 similar tasks, it gained the ability to successfully identify new combinations of colors and animals.

The results suggest that for both humans and AI, quicker, flexible in-context learning arises after a certain amount of incremental learning has taken place.

“At the first board game, it takes you a while to figure out how to play,” Pavlick said. “By the time you learn your hundredth board game, you can pick up the rules of play quickly, even if you’ve never seen that particular game before.”

The team also found trade-offs, including between learning retention and flexibility: Similar to humans, the harder it is for AI to correctly complete a task, the more likely it will remember how to perform it in the future.

According to Frank, who has studied this paradox in humans, this is because errors cue the brain to update information stored in long-term memory, whereas error-free actions learned in context increase flexibility but don’t engage long-term memory in the same way.

For Frank, who specializes in building biologically inspired computational models to understand human learning and decision-making, the team’s work showed how analyzing strengths and weaknesses of different learning strategies in an artificial neural network can offer new insights about the human brain.

“Our results hold reliably across multiple tasks and bring together disparate aspects of human learning that neuroscientists hadn’t grouped together until now,” Frank said.

The work also suggests important considerations for developing intuitive and trustworthy AI tools, particularly in sensitive domains such as mental health.

“To have helpful and trustworthy AI assistants, human and AI cognition need to be aware of how each works and the extent that they are different and the same,” Pavlick said. “These findings are a great first step.”

Funding: The research was supported by the Office of Naval Research and the National Institute of General Medical Sciences Centers of Biomedical Research Excellence.

About this AI and learning research news

Author: Kevin Stacey

Source: Brown University

Contact: Kevin Stacey – Brown University

Image: The image is credited to Neuroscience News

Original Research: Closed access.

“Parallel trade-offs in human cognition and neural networks: The dynamic interplay between in-context and in-weight learning” by Jake Russin et al. PNAS

Abstract

Parallel trade-offs in human cognition and neural networks: The dynamic interplay between in-context and in-weight learning

Human learning embodies a striking duality: Sometimes, we can rapidly infer and compose logical rules, benefiting from structured curricula (e.g., in formal education), while other times, we rely on an incremental approach or trial-and-error, learning better from curricula that are randomly interleaved.

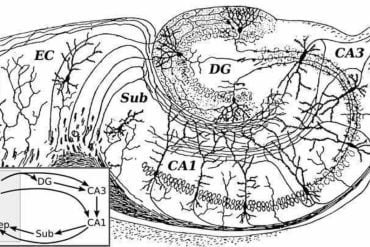

Influential psychological theories explain this seemingly conflicting behavioral evidence by positing two qualitatively different learning systems—one for rapid, rule-based inferences (e.g., in working memory) and another for slow, incremental adaptation (e.g., in long-term and procedural memory).

It remains unclear how to reconcile such theories with neural networks, which learn via incremental weight updates and are thus a natural model for the latter, but are not obviously compatible with the former.

However, recent evidence suggests that metalearning neural networks and large language models are capable of in-context learning (ICL)—the ability to flexibly infer the structure of a new task from a few examples.

In contrast to standard in-weight learning (IWL), which is analogous to synaptic change, ICL is more naturally linked to activation-based dynamics thought to underlie working memory in humans.

Here, we show that the interplay between ICL and IWL naturally ties together a broad range of learning phenomena observed in humans, including curriculum effects on category-learning tasks, compositionality, and a trade-off between flexibility and retention in brain and behavior.

Our work shows how emergent ICL can equip neural networks with fundamentally different learning properties that can coexist with their native IWL, thus offering an integrative perspective on dual-process theories of human cognition.