Spoken sentences can be reconstructed from activity patterns of human brain surface. “Brain to Text” combines knowledge from neuroscience, medicine and informatics.

Speech is produced in the human cerebral cortex. Brain waves associated with speech processes can be directly recorded with electrodes located on the surface of the cortex. It has now been shown for the first time that is possible to reconstruct basic units, words, and complete sentences of continuous speech from these brain waves and to generate the corresponding text. Researchers at KIT and Wadsworth Center, USA present their ”Brain-to-Text“ system in the scientific journal Frontiers in Neuroscience.

”It has long been speculated whether humans may communicate with machines via brain activity alone,” says Tanja Schultz, who conducted the present study with her team at the Cognitive Systems Lab of KIT. ”As a major step in this direction, our recent results indicate that both single units in terms of speech sounds as well as continuously spoken sentences can be recognized from brain activity.“

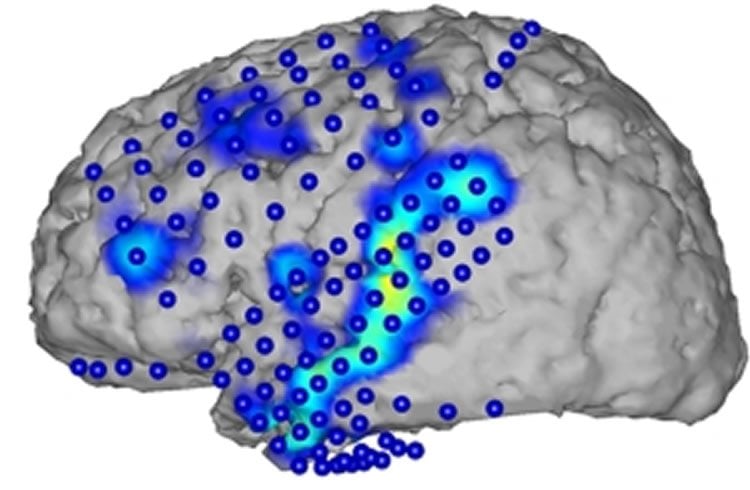

These results were obtained by an interdisciplinary collaboration of researchers of informatics, neuroscience, and medicine. In Karlsruhe, the methods for signal processing and automatic speech recognition have been developed and applied. ”In addition to the decoding of speech from brain activity, our models allow for a detailed analysis of the brain areas involved in speech processes and their interaction,” outline Christian Herff und Dominic Heger, who developed the Brain-to-Text system within their doctoral studies.

The present work is the first that decodes continuously spoken speech and transforms it into a textual representation. For this purpose, cortical information is combined with linguistic knowledge and machine learning algorithms to extract the most likely word sequence. Currently, Brain-to-Text is based on audible speech. However, the results are an important first step for recognizing speech from thought alone.

The brain activity was recorded in the USA from 7 epileptic patients, who participated voluntarily in the study during their clinical treatments. An electrode array was placed on the surface of the cerebral cortex (electrocorticography (ECoG)) for their neurological treatment. While patients read aloud sample texts, the ECoG signals were recorded with high resolution in time and space. Later on, the researchers in Karlsruhe analyzed the data to develop Brain-to-Text. In addition to basic science and a better understanding of the highly complex speech processes in the brain, Brain-to-Text might be a building block to develop a means of speech communication for locked-in patients in the future.

Source: Kosta Schinarakis – KIT

Image Credit: The image is credited to CSL/KIT

Original Research: Full open access research for “Brain-to-text: decoding spoken phrases from phone representations in the brain” by Christian Herff, Dominic Heger, Adriana de Pesters, Dominic Telaar, Peter Brunner, Gerwin Schalk and Tanja Schultz in Frontiers in Neuroscience. Published online June 12 2015 doi:10.3389/fnins.2015.00217

Abstract

Brain-to-text: decoding spoken phrases from phone representations in the brain

It has long been speculated whether communication between humans and machines based on natural speech related cortical activity is possible. Over the past decade, studies have suggested that it is feasible to recognize isolated aspects of speech from neural signals, such as auditory features, phones or one of a few isolated words. However, until now it remained an unsolved challenge to decode continuously spoken speech from the neural substrate associated with speech and language processing. Here, we show for the first time that continuously spoken speech can be decoded into the expressed words from intracranial electrocorticographic (ECoG) recordings.Specifically, we implemented a system, which we call Brain-To-Text that models single phones, employs techniques from automatic speech recognition (ASR), and thereby transforms brain activity while speaking into the corresponding textual representation. Our results demonstrate that our system can achieve word error rates as low as 25% and phone error rates below 50%. Additionally, our approach contributes to the current understanding of the neural basis of continuous speech production by identifying those cortical regions that hold substantial information about individual phones. In conclusion, the Brain-To-Text system described in this paper represents an important step toward human-machine communication based on imagined speech.

“Brain-to-text: decoding spoken phrases from phone representations in the brain” by Christian Herff, Dominic Heger, Adriana de Pesters, Dominic Telaar, Peter Brunner, Gerwin Schalk and Tanja Schultz in Frontiers in Neuroscience. Published online June 12 2015 doi:10.3389/fnins.2015.00217