Summary: A team of researchers used a massive dance video dataset and advanced AI models to map how the human brain interprets dance, revealing striking differences between experts and nonexperts. By pairing fMRI recordings with AI-derived cross-modal features, they found that higher-order brain regions outperform simple motion or sound cues when processing choreography.

Expert dancers displayed more varied and complex neural response patterns, suggesting that expertise broadens — rather than narrows — perceptual richness. The findings highlight deep parallels between predictive AI models and human cognition, pointing to new ways to study artistic perception across the senses.

Key Facts:

- Cross-Modal Encoding Dominates: Combined music-and-movement features predicted neural activity far better than movement-only or sound-only cues.

- Expert Brains Respond Differently: Dance experts showed more diverse and finely differentiated brain patterns when viewing performances.

- AI Mirrors Human Prediction: The AI choreography model’s next-step predictions aligned closely with how brains integrate audiovisual information.

Source: University of Tokyo

Dance is a form of cultural expression that has endured all of human history, channeling a seemingly innate response to the recognition of sound and rhythm.

A team at the University of Tokyo and collaborators demonstrated distinct fMRI activity patterns in the brain related to a specific audience’s level of expertise in dance.

The findings were born from recent breakthroughs in dance motion-capture datasets and AI (artificial intelligence) generative models, facilitating a cross-modal study characterizing the art form’s complexity.

Previous studies on dance have typically been limited to artificially controlled movement or music in isolation, or coarse binary descriptors from categorized clips. The ability to elicit holistic, cross-modal correspondence of real-world performances to local brain activity allowed for the capture of fine-grained, high-dimensional relationships in dance.

This research project, led by Professor Hiroshi Imamizu of the University of Tokyo, Associate Professor Yu Takagi of the Nagoya Institute of Technology and their team, builds upon quantitative encoding advances in AI-based naturalistic modeling to compare brain responses to stimuli.

“In our research we strived to understand how the human brain directs movement of the body. As an everyday life example, dance provided the perfect theme,” said Imamizu. “Our team had great passion for genres like street dance and ballet, and by collaborating with street dance experts, the research soon took a life of its own.”

According to the team, a major problem to date was that in order to identify and respond to the multitude of stimuli in the real world, humans must process a wealth of perceptual information.

“That’s where the release of the AIST Dance Video Database was a stroke of fortune for us. It has over 13,000 recordings covering 10 genres of street dance,” said Imamizu. “It also led to an AI model which generates choreography from music. It almost felt that our research was being pushed by this new era of technology itself.”

In describing the study, the researchers said one of the underlying problems they would like to solve is to understand how the brain and AI correspond to each other. Can AI models represent the human mind? And conversely, can brain functions be used to grasp the inner working of AI?

To answer this, the team recruited 14 participants of mixed dance backgrounds and monitored their brain responses while viewing 1,163 dance clips of varied dancers and styles.

“By linking a choreographing AI to fMRI, or functional magnetic resonance imaging, a technique that can visualize active regions of the brain by recognizing small changes in blood flow, we could pinpoint where the brain binds music and movement,” said Takagi.

“Cross‑modal features consistently predicted activity in higher‑order association areas better than motion‑only or sound‑only features — evidence that integration of different sensory modalities such as sight and sound is central to how we experience dance.”

The findings also suggested that the model’s next-motion prediction architecture aligns well with human cognition, revealing parallels between how biological and artificial systems process and integrate audiovisual information.

Furthermore, to identify how dance features mapped to brain responses and emotional experiences, the team created a list of concepts informed by expert dancers with multiple rating scales.

Feedback results from an online survey were processed through a brain‑activity simulator they’d developed, showing that different impressions correspond to distinct, distributed neural patterns, in which aesthetic and emotional responses were not reducible to a single scale dimension.

“Surprisingly, compared to nonexpert audiences, our brain-activity simulator was able to more precisely predict responses in experts. Even more interesting was the fact that while nonexperts exhibited individual differences in response patterns, the videos elicited a more diverse number of patterns in experts,” said Imamizu.

“In other words, the results suggest that brain responses diverged rather than converged with expertise. This has very interesting implications for understanding the relation of experience and diversity of expressions in art.

“We believe that the freedom demonstrated to connect tightly controlled research methods with large, diverse real-world datasets opens up a new dimension of research possibilities.”

For the impassioned members of the team, the results brought them full circle.

“We would love nothing more than to see our developed brain-activity simulator be used as a tool to create new dance styles which move people. We very much wish to explore applications to other forms of art also,” said Imamizu.

Key Questions Answered:

A: Experts show more diverse and finely tuned neural patterns when viewing dance, reflecting richer perceptual and emotional representations.

A: Higher-order association areas integrate auditory and visual cues, outperforming motion-only or music-only processing.

A: AI-generated choreography linked with fMRI decoding exposes how prediction-based models parallel human audiovisual integration.

Editorial Notes:

- This article was edited by a Neuroscience News editor.

- Journal paper reviewed in full.

- Additional context added by our staff.

About this AI, neuroscience, and dance research news

Author: Rohan Mehra

Source: University of Tokyo

Contact: Rohan Mehra – University of Tokyo

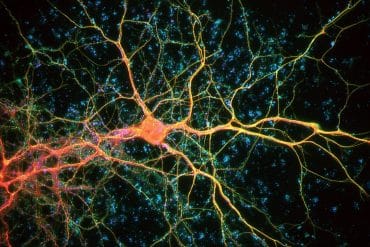

Image: The image is credited to Neuroscience News

Original Research: Open access.

“Cross-modal deep generative models reveal the cortical representation of dancing” by Hiroshi Imamizu et al. Nature Communication

Abstract

Cross-modal deep generative models reveal the cortical representation of dancing

Dance is an ancient, holistic art form practiced worldwide throughout human history.

Although it offers a window into cognition, emotion, and cross‑modal processing, fine‑grained quantitative accounts of how its diverse information is represented in the brain have rarely been performed.

Here, we relate features from a cross‑modal deep generative model of dance to functional magnetic resonance imaging responses while participants watched naturalistic dance clips.

We demonstrate that cross-modal features explain dance‑evoked brain activity better than low‑level motion and audio features.

Using encoding models as in silico simulators, we quantify how dances that elicit different emotions yield distinct neural patterns.

While expert dancers’ brain activity is more broadly explained by dance features than that of novices, experts exhibit greater individual variability.

Our approach links cross-modal representations from generative models to naturalistic neuroimaging, clarifying how motion, music, and expertise jointly shape aesthetic and emotional experience.