Summary: New research reveals how the brain merges visual and auditory information to make quicker, more accurate decisions. Using EEG, scientists found that auditory and visual decision processes start independently but ultimately combine in the motor system, enabling faster reaction times.

Computational models showed that this integration—not a simple race between senses—best explains behavior, especially when one signal is slightly delayed. The findings offer a concrete model of multisensory decision-making and may inform future clinical approaches to sensory or cognitive impairments.

Key Facts:

- Parallel Then Merge: Auditory and visual decision processes run separately before combining in the motor system.

- Integration Advantage: The integrated model explained results better than a “race” model, especially with sensory delays.

- Clinical Relevance: Understanding multisensory decision pathways can aid in designing treatments for sensory processing disorders.

Source: University of Rochester

It has long been understood that experiencing two senses simultaneously, like seeing and hearing, can lead to improved responses relative to those seen when only one sensory input is experienced by itself.

For example, a potential prey that gets visual and auditory clues that it is about to be attacked by a snake in the grass has a better chance of survival.

Precisely how multiple senses are integrated or work together in the brain has been an area of fascination for neuroscientists for decades.

New research by an international collaboration of scientists at the University of Rochester and a research team in Dublin, Ireland, has revealed some new key insights.

“Just like sensory integration, sometimes you need human integration,” said John Foxe, PhD, director of the Del Monte Institute for Neuroscience at the University of Rochester and co-author of the study that shows how multisensory integration happens in the brain.

These findings were published in Nature Human Behaviour today. “This research was built on decades of study and friendship. Sometimes ideas need time to percolate. There is a pace to science, and this research is the perfect example of that.”

Simon Kelly, PhD, professor at University College Dublin, led the study. In 2012, his lab discovered a way to measure information for a decision being gathered over time in the brain using an electroencephalographic (EEG) signal. This step followed years of research that set the stage for this work.

“We were uniquely positioned to tackle this,” Kelly said.

“The more we know about the fundamental brain architecture underlying such elementary behaviors, the better we can interpret differences in the behaviors and signals associated with such tasks in clinical groups and design mechanistically informed diagnostics and treatments.”

Research participants were asked to watch a simple dot animation while listening to a series of tones and press a button when they noticed a change in the dots, the tones, or both.

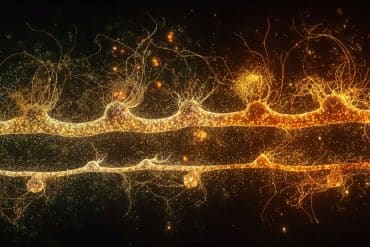

Using EEG, the scientists were able to infer that when changes happened in both the dots and tones, auditory and visual decision processes unfolded in parallel but came together in the motor system. This allowed participants to speed up their reaction times.

“We found that the EEG accumulation signal reached very different amplitudes when auditory versus visual targets were detected, indicating that there are distinct auditory and visual accumulators,” Kelly said.

Using computational models, the researchers then tried to explain the decision signal patterns as well as reaction times. In one model, the auditory and visual accumulators race against each other to trigger a motor reaction, while the other model integrates the auditory and visual accumulators and then sends the information to the motor system.

Both models worked until researchers added a slight delay to either the audio or visual signals. Then the integration model did a much better job at explaining all the data, suggesting that during a multisensory (audiovisual) experience, the decision signals may start on their own sensory-specific tracks but then integrate when sending the information to areas of the brain that generate movement.

“The research provides a concrete model of the neural architecture through which multisensory decisions are made,” Kelly said. “It clarifies that distinct decision processes gather information from different modalities, but their outputs converge onto a single motor process where they combine to meet a single criterion for action.”

Team Science Takes a Village

In the 2000s, Foxe’s Cognitive Neurophysiology Lab, which was then located at City College in New York City, brought in a multitude of young researchers, including Kelly and Manuel Gomez-Ramirez, PhD, assistant professor of Brain and Cognitive Sciences at the University of Rochester and a co-author of the research out today.

It is here where Kelly spent his time as a postdoc and was first introduced to multisensory integration and the tools and metrics used to assess audiovisual detection. Gomez-Ramirez, who was a PhD student in the lab at the time, designed an experiment to understand the integration of auditory, visual, and tactile inputs.

“The three of us have been friends across the years with very different backgrounds,” Foxe said.

“But we are bound together by a common interest in answering fundamental questions about the brain. When we get together, we talk about these things, we run ideas by each other, and then six months later, something will come to you. This is a really good example that sometimes science operates on a longer temporal horizon.”

Other authors include the first author, John Egan, University College Dublin, and Redmond O’Connell, PhD, of Trinity College Dublin.

Funding: This research was supported by the Science Foundation Ireland, Wellcome Trust, European Research Council Consolidator, Eunice Kennedy Shriver National Institute of Child Health and Human Development (UR-IDDRC), and the National Institute of Mental Health.

About this visual and auditory neuroscience research news

Author: Kelsie Smith Hayduk

Source: University of Rochester

Contact: Kelsie Smith Hayduk – University of Rochester

Image: The image is credited to Neuroscience News

Original Research: Closed access.

“Distinct audio and visual accumulators co-activate motor preparation for multisensory detection” by Simon Kelly et al. Nature Human Behavior

Abstract

Distinct audio and visual accumulators co-activate motor preparation for multisensory detection

Detecting targets in multisensory environments is an elemental brain function, but it is not yet known whether information from different sensory modalities is accumulated by distinct processes, and, if so, whether the processes are subject to separate decision criteria.

Here we address this in two experiments (n = 22, n = 21) using a paradigm design that enables neural evidence accumulation to be traced through a centro-parietal positivity and modelled alongside response time distributions.

Through analysis of both redundant (respond-to-either-modality) and conjunctive (respond-only-to-both) audio-visual detection data, joint neural–behavioural modelling, and a follow-up onset-asynchrony experiment, we found that auditory and visual evidence is accumulated in distinct processes during multisensory detection, and cumulative evidence in the two modalities sub-additively co-activates a single, thresholded motor process during redundant detection.

These findings answer long-standing questions about information integration and accumulation in multisensory conditions.