Summary: Researchers have developed a new prosthetic hand with the ability to ‘see’ objects. They believe their invention can allow the wearer to automatically reach for objects.

Source: Newcastle University.

A new generation of prosthetic limbs which will allow the wearer to reach for objects automatically, without thinking – just like a real hand – are to be trialled for the first time.

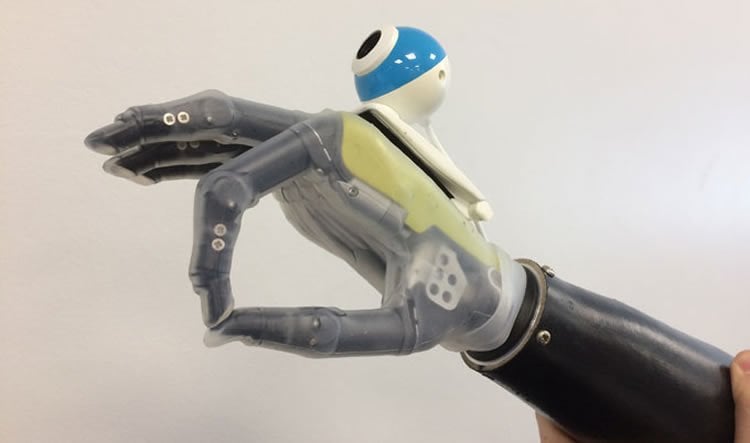

Led by biomedical engineers at Newcastle University and funded by the Engineering and Physical Sciences Research Council (EPSRC), the bionic hand is fitted with a camera which instantaneously takes a picture of the object in front of it, assesses its shape and size and triggers a series of movements in the hand.

Bypassing the usual processes which require the user to see the object, physically stimulate the muscles in the arm and trigger a movement in the prosthetic limb, the hand ‘sees’ and reacts in one fluid movement.

A small number of amputees have already trialled the new technology and now the Newcastle University team are working with experts at Newcastle upon Tyne Hospitals NHS Foundation Trust to offer the ‘hands with eyes’ to patients at Newcastle’s Freeman Hospital.

A hand which can respond automatically

Publishing their findings today in the Journal of Neural Engineering, co-author on the study Dr Kianoush Nazarpour, a Senior Lecturer in Biomedical Engineering at Newcastle University, explains:

“Prosthetic limbs have changed very little in the past 100 years – the design is much better and the materials’ are lighter weight and more durable but they still work in the same way.

“Using computer vision, we have developed a bionic hand which can respond automatically – in fact, just like a real hand, the user can reach out and pick up a cup or a biscuit with nothing more than a quick glance in the right direction.

“Responsiveness has been one of the main barriers to artificial limbs. For many amputees the reference point is their healthy arm or leg so prosthetics seem slow and cumbersome in comparison.

“Now, for the first time in a century, we have developed an ‘intuitive’ hand that can react without thinking.”

Artificial vision for artificial hands

Recent statistics show that in the UK there are around 600 new upper-limb amputees every year, of which 50% are in the age range of 15-54 years old. In the US there are 500,000 upper limb amputees a year.

Current prosthetic hands are controlled via myoelectric signals – that is electrical activity of the muscles recorded from the skin surface of the stump.

Controlling them, says Dr Nazarpour, takes practice, concentration and, crucially, time.

Using neural networks – the basis for Artificial Intelligence – lead author on the study Ghazal Ghazaei showed the computer numerous object images and taught it to recognise the ‘grip’ needed for different objects.

“We would show the computer a picture of, for example, a stick,” explains Miss Ghazaei, who carried out the work as part of her PhD in the School of Electrical and Electronic Engineering at Newcastle University. “But not just one picture, many images of the same stick from different angles and orientations, even in different light and against different backgrounds and eventually the computer learns what grasp it needs to pick that stick up.

“So the computer isn’t just matching an image, it’s learning to recognise objects and group them according to the grasp type the hand has to perform to successfully pick it up.

“It is this which enables it to accurately assess and pick up an object which it has never seen before – a huge step forward in the development of bionic limbs.”

Grouping objects by size, shape and orientation, according to the type of grasp that would be needed to pick them up, the team programmed the hand to perform four different ‘grasps’: palm wrist neutral (such as when you pick up a cup); palm wrist pronated (such as picking up the TV remote); tripod (thumb and two fingers) and pinch (thumb and first finger).

A new generation of prosthetic limbs which will allow the wearer to reach for objects automatically, without thinking – just like a real hand – are to be trialled for the first time.

Using a 99p camera fitted to the prosthesis, the hand ‘sees’ an object, picks the most appropriate grasp and sends a signal to the hand – all within a matter of milliseconds and ten times faster than any other limb currently on the market.

“One way would have been to create a photo database of every single object but clearly that would be a massive task and you would literally need every make of pen, toothbrush, shape of cup – the list is endless,” says Dr Nazarpour.

“The beauty of this system is that it’s much more flexible and the hand is able to pick up novel objects – which is crucial since in everyday life people effortlessly pick up a variety of objects that they have never seen before.”

First step towards a fully connected bionic hand

The work is part of a larger research project to develop a bionic hand that can sense pressure and temperature and transmit the information back to the brain.

Led by Newcastle University and involving experts from the universities of Leeds, Essex, Keele, Southampton and Imperial College London, the aim is to develop novel electronic devices that connect to the forearm neural networks to allow two-way communications with the brain.

Led by biomedical engineers at Newcastle University and funded by the Engineering and Physical Sciences Research Council (EPSRC), the bionic hand is fitted with a camera which instantaneously takes a picture of the object in front of it, assesses its shape and size and triggers a series of movements in the hand.

Reminiscent of Luke Skywalker’s artificial hand, the electrodes in the bionic limb would wrap around the nerve endings in the arm. This would mean for the first time the brain could communicate directly with the prosthesis.

The ‘hand that sees’, explains Dr Nazarpour, is an interim solution that will bridge the gap between current designs and the future.

“It’s a stepping stone towards our ultimate goal,” he says. “But importantly, it’s cheap and it can be implemented soon because it doesn’t require new prosthetics – we can just adapt the ones we have.”

Anne Ewing, Advanced Occupational Therapist at Newcastle upon Tyne Hospitals NHS Foundation Trust, has been working with Dr Nazarpour and his team.

“I work with upper limb amputee patients which is extremely rewarding, varied and at times challenging,” she said.

“We always strive to put the patient at the heart of everything we do and so make sure that any interventions are client centred to ensure patients’ individual goals are met either with a prosthesis or alternative method of carrying out a task.

“This project in collaboration with Newcastle University has provided an exciting opportunity to help shape the future of upper limb prosthetics, working towards achieving patients’ prosthetic expectations and it is wonderful to have been involved.”

Case Study – Doug McIntosh, 56

“For me it was literally a case of life or limb,” says Doug McIntosh, who lost his right arm in 1997 through cancer.

“I had developed a rare form of cancer called epithelial sarcoma, which develops in the deep tissue under the skin, and the doctors had no choice but to amputate the limb to save my life.

“Losing an arm and battling cancer with three young children was life changing. I left my job as a life support supervisor in the diving industry and spent a year fund-raising for cancer charities.

“It was this and my family that motivated me and got me through the hardest times.”

Since then, Doug has gone on to be an inspiration to amputees around the world. Becoming the first amputee to cycle from John O’Groats to Land’s End in 100hrs, cycle around the coast line of Britain, he has run three London Marathons, cycled The Dallaglio Flintoff Cycle Slam 2012 and 2014 and in 2014 cycled with the British Lions Rugby Team to Murrayfield Rugby Stadium for “Walking with Wounded” Charity. He is currently preparing to do Mont Ventoux this September, three cycle climbs in one day for Cancer Research UK and Maggie’s Cancer Centres.

Involved in the early trials of the first myoelectric prosthetic limbs, Doug has been working with the Newcastle team to trail the new hand that sees.

“The problem is there’s nothing yet that really comes close to feeling like the real thing,” explains the father-of-three who lives in Westhill, Aberdeen with his wife of 32 years, Diane.

“Some of the prosthetics look very realistic but they feel slow and clumsy when you have a working hand to compare them to.

“In the end I found it easier just to do without and learn to adapt. When I do use a prosthesis I use a split hook which doesn’t look pretty but does the job.”

But he says the new, responsive hand being developed in Newcastle is a ‘huge leap forward’.

“This offers for the first time a real alternative for upper limb amputees,” he says.

“For me, one of the ways of dealing with the loss of my hand was to be very open about it and answer people’s questions. But not everyone wants that and so to have the option of a hand that not only looks realistic but also works like a real hand would be an amazing breakthrough and transform the recovery time – both physically and mentally – for many amputees.”

Source: Lanisha Butterfield – Newcastle University

Image Source: NeuroscienceNews.com image is adapted from the Newcastle University news release.

Video Source: The videos are credited to Newcastle University.

Original Research: Full open access research for “Deep learning-based artificial vision for grasp classification in myoelectric hands” by Ghazal Ghazaei, Ali Alameer, Patrick Degenaar, Graham Morgan and Kianoush Nazarpour in Journal of Neural Engineering. Published online May 3 2017 doi:10.1088/1741-2552/aa6802

[cbtabs][cbtab title=”MLA”]Newcastle University “Hand That Sees Offers New Hope For Amputees.” NeuroscienceNews. NeuroscienceNews, 5 May 2017.

<https://neurosciencenews.com/seeing-prosthetic-arm-6599/>.[/cbtab][cbtab title=”APA”]Newcastle University (2017, May 5). Hand That Sees Offers New Hope For Amputees. NeuroscienceNew. Retrieved May 5, 2017 from https://neurosciencenews.com/seeing-prosthetic-arm-6599/[/cbtab][cbtab title=”Chicago”]Newcastle University “Hand That Sees Offers New Hope For Amputees.” https://neurosciencenews.com/seeing-prosthetic-arm-6599/ (accessed May 5, 2017).[/cbtab][/cbtabs]

Abstract

Deep learning-based artificial vision for grasp classification in myoelectric hands

Objective. Computer vision-based assistive technology solutions can revolutionise the quality of care for people with sensorimotor disorders. The goal of this work was to enable trans-radial amputees to use a simple, yet efficient, computer vision system to grasp and move common household objects with a two-channel myoelectric prosthetic hand.

Approach. We developed a deep learning-based artificial vision system to augment the grasp functionality of a commercial prosthesis. Our main conceptual novelty is that we classify objects with regards to the grasp pattern without explicitly identifying them or measuring their dimensions. A convolutional neural network (CNN) structure was trained with images of over 500 graspable objects. For each object, 72 images, at ${{5}^{\circ}}$ intervals, were available. Objects were categorised into four grasp classes, namely: pinch, tripod, palmar wrist neutral and palmar wrist pronated. The CNN setting was first tuned and tested offline and then in realtime with objects or object views that were not included in the training set.

Main results. The classification accuracy in the offline tests reached $85 \% $ for the seen and $75 \% $ for the novel objects; reflecting the generalisability of grasp classification. We then implemented the proposed framework in realtime on a standard laptop computer and achieved an overall score of $84 \% $ in classifying a set of novel as well as seen but randomly-rotated objects. Finally, the system was tested with two trans-radial amputee volunteers controlling an i-limb UltraTM prosthetic hand and a motion controlTM prosthetic wrist; augmented with a webcam. After training, subjects successfully picked up and moved the target objects with an overall success of up to $88 \% $ . In addition, we show that with training, subjects’ performance improved in terms of time required to accomplish a block of 24 trials despite a decreasing level of visual feedback.

Significance. The proposed design constitutes a substantial conceptual improvement for the control of multi-functional prosthetic hands. We show for the first time that deep-learning based computer vision systems can enhance the grip functionality of myoelectric hands considerably.

“Deep learning-based artificial vision for grasp classification in myoelectric hands” by Ghazal Ghazaei, Ali Alameer, Patrick Degenaar, Graham Morgan and Kianoush Nazarpour in Journal of Neural Engineering. Published online May 3 2017 doi:10.1088/1741-2552/aa6802