Difficult grammar affects music experience.

Reading and listening to music at the same time affects how you hear the music. Language scientists and neuroscientists from Radboud University and the Max Planck Institute for Psycholinguistics published this finding in an article in Royal Society Open Science on February 3.

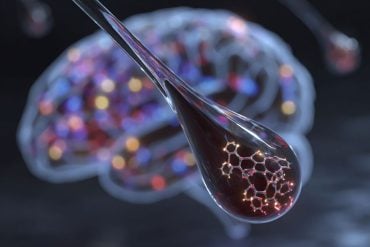

“The neural pathways for language and music share a crossroads,” says lead author Richard Kunert. “This has been shown in previous research, but these studies focused on the effect of simultaneous reading and listening on language processing. Until now, the effect of this multitasking on the neural processing of music has been predicted only in theory.”

Kunert therefore asked his subjects to read several easy and difficult phrases while they listened to a short piece of music, which Kunert composed himself. Afterwards, he asked the subjects to judge the closure, i.e. the feeling of completeness, of a chord sequence: did it stop before the end, or had they heard the entire sequence from beginning to end?

The experiment showed that the subjects judged the music to be less complete with grammatically difficult sentences than with simple sentences. The brain area that is the crossroads of music and language therefore has to do with grammar. “Previously, researchers thought that when you read and listen at the same time, you do not have enough attention to do both tasks well. With music and language, it is not about general attention, but about activity in the area of the brain that is shared by music and language,” explains Kunert.

Language and music appear to be fundamentally more alike than you might think. A word in a sentence derives its meaning from the context. The same applies to a tone in a chord sequence or a piece of music. Language and music share the same brain region to create order in both processes: arranging words in a sentence and arranging tones in a chord sequence. Reading and listening at the same time overload the capacity of this brain region, known as Broca’s area, which is located somewhere under your left temple.

Richard Kunert is a PhD student at The Max Planck Institute for Psycholinguistics. He talks about the fact that the human brain is the only structure in the world which can understand music and language and what clues that gives for understanding the brain.

Previously, researchers demonstrated that children with musical training were better at language than children who did not learn to play an instrument. The results of Kunert and colleagues demonstrate that the direction of this positive effect probably does not matter. Musical training enhances language skills, and language training probably enhances the neural processing of music in the same way. But engaging in language and music at the same time remains difficult for everyone – whether you are a professional guitar player or have no musical talent at all.

Funding: The study was funded by Presidium of RAS.

Source: Radboud University

Image Source: The image is adapted from the Radboud University press release

Video Source: The video is available at the Radboud Universiteit Nijmegen YouTube page

Original Research: Full open access research for “Language influences music harmony perception: effects of shared syntactic integration resources beyond attention” by Richard Kunert, Roel M. Willems, and Peter Hagoort in Royal Society Open Science. Published online February 3 2016 doi:10.1098/rsos.150685

Abstract

Language influences music harmony perception: effects of shared syntactic integration resources beyond attention

Many studies have revealed shared music–language processing resources by finding an influence of music harmony manipulations on concurrent language processing. However, the nature of the shared resources has remained ambiguous. They have been argued to be syntax specific and thus due to shared syntactic integration resources. An alternative view regards them as related to general attention and, thus, not specific to syntax. The present experiments evaluated these accounts by investigating the influence of language on music. Participants were asked to provide closure judgements on harmonic sequences in order to assess the appropriateness of sequence endings. At the same time participants read syntactic garden-path sentences. Closure judgements revealed a change in harmonic processing as the result of reading a syntactically challenging word. We found no influence of an arithmetic control manipulation (experiment 1) or semantic garden-path sentences (experiment 2). Our results provide behavioural evidence for a specific influence of linguistic syntax processing on musical harmony judgements. A closer look reveals that the shared resources appear to be needed to hold a harmonic key online in some form of syntactic working memory or unification workspace related to the integration of chords and words. Overall, our results support the syntax specificity of shared music–language processing resources.

“Language influences music harmony perception: effects of shared syntactic integration resources beyond attention” by Richard Kunert, Roel M. Willems, and Peter Hagoort in Royal Society Open Science. Published online February 3 2016 doi:10.1098/rsos.150685