Summary: The anterior cingulate cortex plays a key role in how the brain can simulate the results of different actions and make the best decisions.

Source: Zuckerman Institute

Our minds can help us make decisions by contemplating the future and predicting the consequences of our actions. Imagine, for instance, trying to find your way to a new restaurant near your home. Your brain can build a mental model of your neighborhood and plan the route you should take to get there.

Scientists have now found that a brain structure called the anterior cingulate cortex (ACC), known to be important for decision making, is involved in using such mental models to learn. A new study of mice published today in Neuron highlights sophisticated mental machinery that helps the brain simulate the results of different actions and make the best choice.

“The neurobiology of model-based learning is still poorly understood,” said Thomas Akam, PhD, a researcher at Oxford University and lead author on the new paper. “Here, we were able to identify a brain structure that is involved in this behavior and demonstrate that its activity encodes multiple aspects of the decision-making process.”

Deciphering how the brain builds mental models is essential to understanding how we adapt to change and make decisions flexibly: what we do when we discover that one of the roads on the way to that new restaurant is closed for construction, for example.

“These results were very exciting,” said senior author Rui Costa, DVM, PhD, Director and CEO of Columbia’s Zuckerman Institute, who started this research while an investigator at the Champalimaud Centre for the Unknown, where most of the data was collected. “These data identify the anterior cingulate cortex as a key brain region in model-based decision-making, more specifically in predicting what will happen in the world if we choose to do one particular action versus another.”

Model or model-free?

A big challenge in studying the neural basis of model-based learning is that it often operates in parallel with another approach called model-free learning. In model-free learning, the brain does not put a lot of effort into creating simulations. It simply relies on actions that have produced good outcomes in the past.

You might use a model-free mental approach when traveling to your favorite restaurant, for example. Because you’ve been there before, you don’t need to invest mental energy in plotting the route. You can simply follow your habitual path and let your mind focus on other things.

To isolate the contributions of these two cognitive schemes – model-based and model-free – the researchers set up a two-step puzzle for mice.

In this task, an animal first chooses one of two centrally located holes to poke its nose into. This action activates one of two other holes to the side, each of which has a certain probability of providing a drink of water.

“Just like in real life, the subject has to perform extended sequences of actions, with uncertain consequences, in order to obtain desired outcomes,” said Dr. Akam.

To do the task well, the mice had to figure out two key variables. The first was which hole on the side was more likely to provide a drink of water. The second was which of the holes in the center activated that side hole. Once the mice learned the task, they would opt for the action sequence that offered the best outcome. However, in addition to this model-based way of solving the puzzle, mice could also learn simple model-free predictions, e.g. “top is good,” based on which choice had generally led to reward in the past.

The researchers then changed up the experiment in ways that required the animals to be flexible. Every now and then, the side port more likely to provide a drink would switch – or the mapping between central and side ports would reverse.

The animals’ choices as things changed revealed what strategies they were using to learn.

“Model-free and model-based learning should generate different patterns of choices,” said Dr. Akam. “By looking at the subjects’ behavior, we were able to assess the contribution of either approach.”

When the team analyzed the results, about 230,000 individual decisions, they learned that the mice were using model-based and model-free approaches in parallel.

“This confirmed that the task was suitable for studying the neural basis of these mechanisms,” said Dr. Costa. “We then moved on to the next step: investigating the neural basis of this behavior.”

A neural map of model-based learning

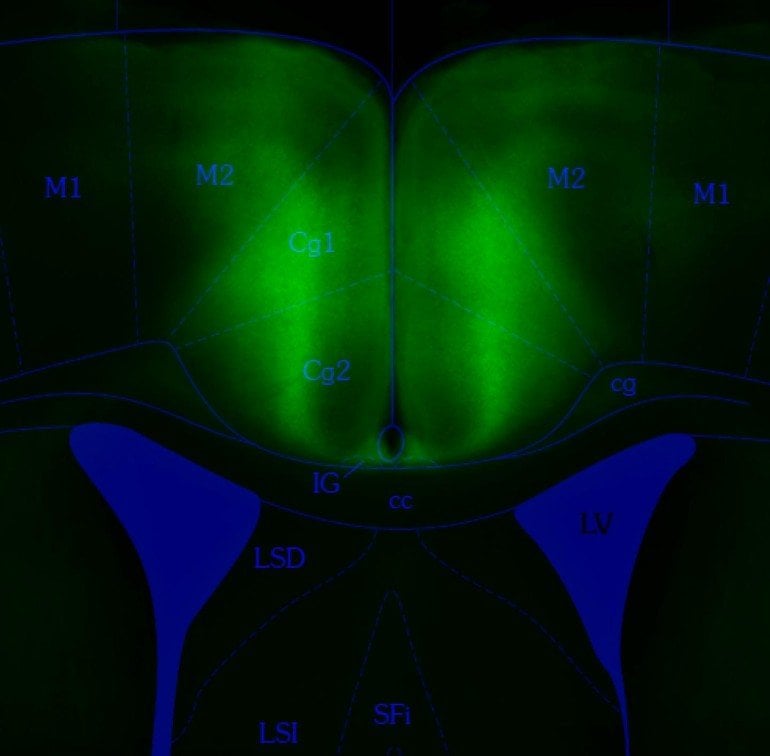

The team focused on a brain region called anterior cingulate cortex (ACC).

“Previous studies established that ACC is involved in action selection and provided some evidence that it could be involved in model-based predictions,” Dr. Costa explained. “But no one had checked the activity of individual ACC neurons in a task designed to differentiate between these different types of learning.”

The researchers discovered a tight connection between the activity of ACC neurons and the behavior of their mice. Simply by the looking at patterns of activity across groups of the cells, the scientists could decode whether the mouse was about chose one hole or another, for example – or whether it was receiving a drink of water.

In addition to representing the animal’s current location in the task, ACC neurons also encoded which state was likely to come next.

“This provided direct evidence that ACC is involved in making model-based predictions of the specific consequences of actions, not just whether they are good or bad,” said Dr. Akam.

Moreover, ACC neurons also represented whether the outcome of actions was expected or surprising, thereby potentially providing a mechanism for updating predictions when they turn out to be wrong.

The team also turned off ACC neurons while the animals were trying to make decisions. This prevented the animals from responding flexibly as the situation changed, an indicator that they were having trouble using model-based predictions.

Understanding how the brain controls complex behaviors like planning and sequential decision making is a big challenge for contemporary neuroscience.

“Our study is one of the first to demonstrate that it is possible to study these aspects of decision-making in mice,” said Dr. Akam. “These results will allow us and others to build mechanistic understanding of flexible decision making.”

About this neuroscience research news

Source: Zuckerman Institute

Contact: Zuckerman Institute

Image: The image is credited to Thomas Akam / Rui Costa / Champalimaud Centre for the Unknown

Original Research: Open access.

“The Anterior Cingulate Cortex Predicts Future States to Mediate Model-Based Action Selection” by Rui Costa et al. Neuron

Abstract

The Anterior Cingulate Cortex Predicts Future States to Mediate Model-Based Action Selection

Highlights

- •A novel two-step task disambiguates model-based and model-free RL in mice

- •ACC represents the task state space, and reward is contextualized by state

- •ACC predicts future states given chosen actions and encodes state prediction surprise

- •Inhibiting ACC prevents state transitions, but not rewards, from influencing choice

Summary

Behavioral control is not unitary. It comprises parallel systems, model based and model free, that respectively generate flexible and habitual behaviors. Model-based decisions use predictions of the specific consequences of actions, but how these are implemented in the brain is poorly understood. We used calcium imaging and optogenetics in a sequential decision task for mice to show that the anterior cingulate cortex (ACC) predicts the state that actions will lead to, not simply whether they are good or bad, and monitors whether outcomes match these predictions. ACC represents the complete state space of the task, with reward signals that depend strongly on the state where reward is obtained but minimally on the preceding choice. Accordingly, ACC is necessary only for updating model-based strategies, not for basic reward-driven action reinforcement. These results reveal that ACC is a critical node in model-based control, with a specific role in predicting future states given chosen actions.