Summary: A new neuroimaging study reveals how different parts of the brain represent an object’s location in depth compared to its 2D location.

Source: Ohio State University.

Scientists record visual cortex combining 2-D and depth info.

We live in a three-dimensional world, but everything we see is first recorded on our retinas in only two dimensions.

So how does the brain represent 3-D information? In a new study, researchers for the first time have shown how different parts of the brain represent an object’s location in depth compared to its 2-D location.

Researchers at The Ohio State University had volunteers view simple images with 3-D glasses while they were in a functional magnetic resonance imaging (fMRI) scanner. The fMRI showed what was happening in the participants’ brains while they looked at the three-dimensional images.

The results showed that as an image first enters our visual cortex, the brain mostly codes the two dimensional location. But as the processing continues, the emphasis shifts to decoding the depth information as well.

“As we move to later and later visual areas, the representations care more and more about depth in addition to 2-D location. It’s as if the representations are being gradually inflated from flat to 3-D,” said Julie Golomb, senior author of the study and assistant professor of psychology at Ohio State.

“The results are surprising because a lot of people assumed we might find depth information in early visual areas. What we found is that even though there might be individual neurons that have some depth information, they don’t seem to be organized into any map or pattern for 3-D space perception.”

Golomb said many scientists have investigated where and how the brain decodes two-dimensional information. Others had looked at how the brain perceives depth. Researchers have found that depth information must be inferred in our brain by comparing the slightly different views from the two eyes (what is called binocular disparity) or from other visual cues.

But this is the first study to directly compare both 2-D and depth information at one time to see how 3-D representations (2-D plus depth) emerge and interact in the brain, she said.

The study was led by Nonie Finlayson, a former postdoctoral researcher at Ohio State, who is now at University College London. Golomb and Xiaoli Zhang, a graduate student at Ohio State, are the other co-authors. The study was published recently in the journal NeuroImage.

Participants in the study viewed a screen in the fMRI while wearing 3-D glasses. They were told to focus on a dot in the middle of the screen. While they were watching the dot, objects would appear in different peripheral locations: to the left, right, top, or bottom of the dot (horizontal and vertical dimensions). Each object would also appear to be at a different depth relative to the dot: behind or in front (visible to participants wearing the 3-D glasses).

The fMRI data allowed the researchers to see what was happening in the brains of the participants when the various objects appeared on the screen. In this way, the scientists could compare how activity patterns in the visual cortex differed when participants saw objects in different locations.

“The pattern of activity we saw in the early visual cortex allowed us to tell if someone was seeing an object that was to the left, right, above or below the fixation dot,” Golomb said. “But we couldn’t tell from the early visual cortex if they were seeing something in front of or behind the dot.

“In the later areas of visual cortex, there was a bit less information about the objects’ two dimensional locations. But the tradeoff was that we could also decode what position they were perceiving in depth.”

Golomb said future studies will look to more closely quantify and model the nature of three-dimensional visual representations in the brain.

“This is an important step in understanding how we perceive our rich three-dimensional environment,” she said.

Source: Julie Golomb – Ohio State University

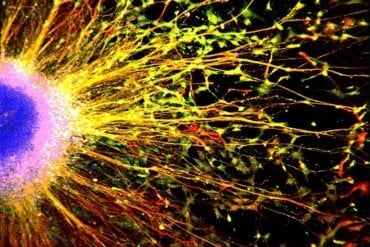

Image Source: NeuroscienceNews.com image is credited to Ohio State University.

Original Research: Abstract for “Differential patterns of 2D location versus depth decoding along the visual hierarchy” by Nonie J. Finlayson, Xiaoli Zhang, and Julie D. Golomb in NeuroImage. Published online February 15 2017 doi:10.1016/j.neuroimage.2016.12.039

[cbtabs][cbtab title=”MLA”]Ohio State University “How the Brain Sees the World in 3D.” NeuroscienceNews. NeuroscienceNews, 21 March 2017.

<https://neurosciencenews.com/3d-brain-vision-6270/>.[/cbtab][cbtab title=”APA”]Ohio State University (2017, March 21). How the Brain Sees the World in 3D. NeuroscienceNew. Retrieved March 21, 2017 from https://neurosciencenews.com/3d-brain-vision-6270/[/cbtab][cbtab title=”Chicago”]Ohio State University “How the Brain Sees the World in 3D.” https://neurosciencenews.com/3d-brain-vision-6270/ (accessed March 21, 2017).[/cbtab][/cbtabs]

Abstract

Differential patterns of 2D location versus depth decoding along the visual hierarchy

Visual information is initially represented as 2D images on the retina, but our brains are able to transform this input to perceive our rich 3D environment. While many studies have explored 2D spatial representations or depth perception in isolation, it remains unknown if or how these processes interact in human visual cortex. Here we used functional MRI and multi-voxel pattern analysis to investigate the relationship between 2D location and position-in-depth information. We stimulated different 3D locations in a blocked design: each location was defined by horizontal, vertical, and depth position. Participants remained fixated at the center of the screen while passively viewing the peripheral stimuli with red/green anaglyph glasses. Our results revealed a widespread, systematic transition throughout visual cortex. As expected, 2D location information (horizontal and vertical) could be strongly decoded in early visual areas, with reduced decoding higher along the visual hierarchy, consistent with known changes in receptive field sizes. Critically, we found that the decoding of position-in-depth information tracked inversely with the 2D location pattern, with the magnitude of depth decoding gradually increasing from intermediate to higher visual and category regions. Representations of 2D location information became increasingly location-tolerant in later areas, where depth information was also tolerant to changes in 2D location. We propose that spatial representations gradually transition from 2D-dominant to balanced 3D (2D and depth) along the visual hierarchy.

“Differential patterns of 2D location versus depth decoding along the visual hierarchy” by Nonie J. Finlayson, Xiaoli Zhang, and Julie D. Golomb in NeuroImage. Published online February 15 2017 doi:10.1016/j.neuroimage.2016.12.039