Summary: Researchers from UC Berkeley have developed a new technique that uses deep networks and AI to colorize images.

Source: Association for Computing Machinery.

UC Berkeley computer scientists develop smarter, enhanced data-driven colorization system for graphic artists.

For decades, image colorization has enjoyed an enduring interest from the public. Though not without its share of detractors, there is something powerful about this simple act of adding color to black and white imagery, whether it be a way of bridging memories between the generations, or expressing artistic creativity. However, the process of manually adding color can be very time consuming and require expertise, with typical professional processes taking hours or days per image to perfect. A team of researchers has proposed a new technique to leverage deep networks and AI, which allows novices, even those with limited artistic ability, to quickly produce reasonable results.

The research, entitled “Real-Time User Guided Colorization with Learned Deep Priors,” is authored by a team at UC Berkeley led by Alexei A. Efros, Professor of Electrical Engineering and Computer Sciences. They will present their work at SIGGRAPH 2017, which spotlights the most innovative in computer graphics research and interactive techniques worldwide. The annual conference will be held in Los Angeles, 30 July to 3 August.

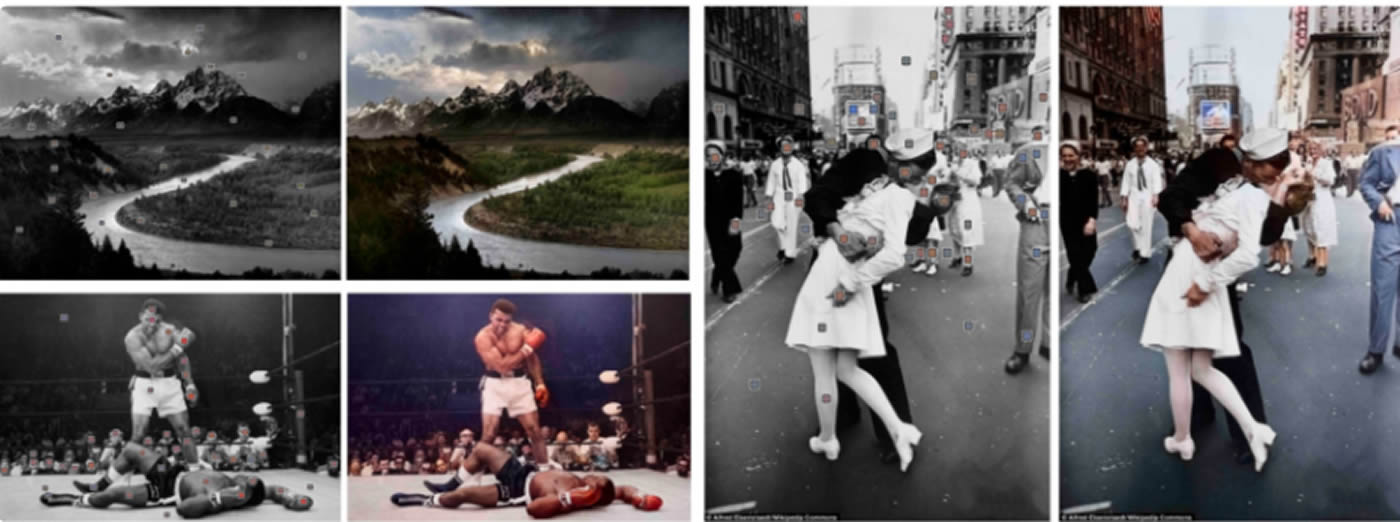

In prior work, the team trained a deep network on big visual data (a million images) to automatically colorize grayscale images, with no user intervention. While the results were sometimes very good, it was prone to certain artifacts. One major limitation was that the color of many objects–for example, shirts–may be inherently ambiguous. The system could only ultimately decide on one possibility.

“The goal of our previous project was to just get a single, plausible colorization,” says Richard Zhang, a coauthor and PhD candidate, advised by Professor Efros. “If the user didn’t like the result, or wanted to change something, they were out of luck. We realized that empowering the user and adding them in the loop was actually a necessary component for obtaining desirable results.”

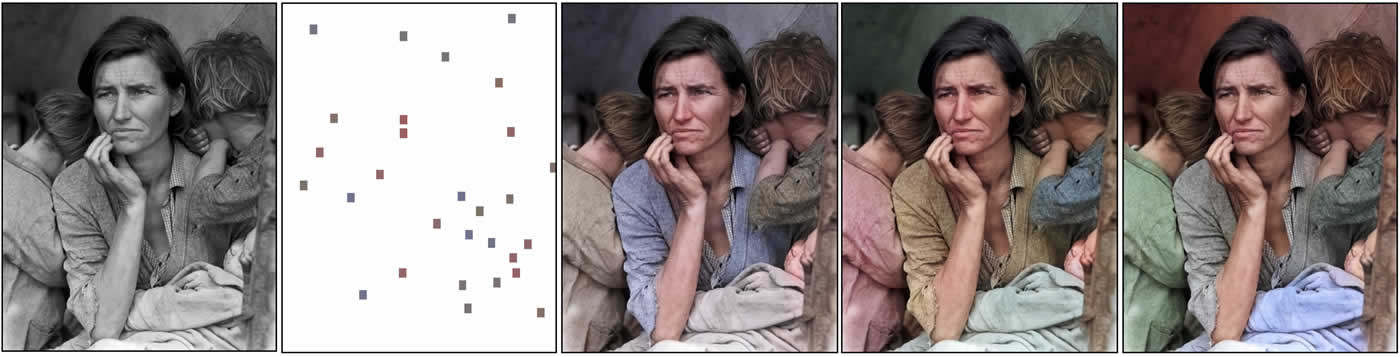

The proposed system uses AI to colorize a grayscale image (left), guided by user color ‘hints’ (second), providing the capability for quickly generating multiple plausible colorizations (middle to right). NeuroscienceNews.com image is credited to Efros et al.

The new network is trained on a grayscale image, along with simulated user inputs. The system improves upon previous automatic colorization systems by enabling the user, in real-time, to correct and customize the colorization. The user provides guidance by adding colored points, or “hints”, which the system then propagates to the rest of the image. The network also learns common colors for different objects and makes appropriate recommendations to the user. Though the new system is only trained on natural images–for instance, elephants are typically brown or gray — the system is also happy to follow the user’s whims, enabling out-of-the-box coloring. For example, a pink elephant–though unnatural — is not off limits.

To evaluate the system, the researchers tested their interface on novice users, challenging them to produce a realistic colorization of a randomly selected grayscale image. Even with minimal training and limited time — just one minute per image — these users quickly learned how to produce colorizations that often fooled real human judges in a real vs. fake test scenario.

Deep networks are being more heavily used in graphics. Perhaps after conquering remaining challenges, such as streamlining memory usage and hardware requirements, along with integrating with existing image editing tools, a system like this one could find its way into commercial tools for image manipulation.

The software is available for download here.

Funding: The research was supported, in part, by NSF SMA-1514512, a Google Grant, the Berkeley Artificial Intelligence Research Lab (BAIR) and a hardware donation by NVIDIA.

Source: Lisa Claydon and Paul Catley – Association for Computing Machinery

Image Source: NeuroscienceNews.com images credited to credited to Efros et al.

Video Source: Video credited to Richard Zhang.

Original Source: The study was presented at ACM SIGGRAPH 2017.

Additional information about the study can be found here.

[cbtabs][cbtab title=”MLA”]Association for Computing Machinery “Colorizing Images With Deep Neural Networks.” NeuroscienceNews. NeuroscienceNews, 6 August 2017.

<neural-network-colorizing-images-7250/>.[/cbtab][cbtab title=”APA”]Association for Computing Machinery (2017, August 6). Colorizing Images With Deep Neural Networks. NeuroscienceNew. Retrieved August 6, 2017 from neural-network-colorizing-images-7250/[/cbtab][cbtab title=”Chicago”]Association for Computing Machinery “Colorizing Images With Deep Neural Networks.” neural-network-colorizing-images-7250/ (accessed August 6, 2017).[/cbtab][/cbtabs]