Smile! It makes everyone in the room feel better because they, consciously or unconsciously, are smiling with you. Growing evidence shows that an instinct for facial mimicry allows us to empathize with and even experience other people’s feelings. If we can’t mirror another person’s face, it limits our ability to read and properly react to their expressions. A Review of this emotional mirroring appears February 11 in Trends in Cognitive Sciences.

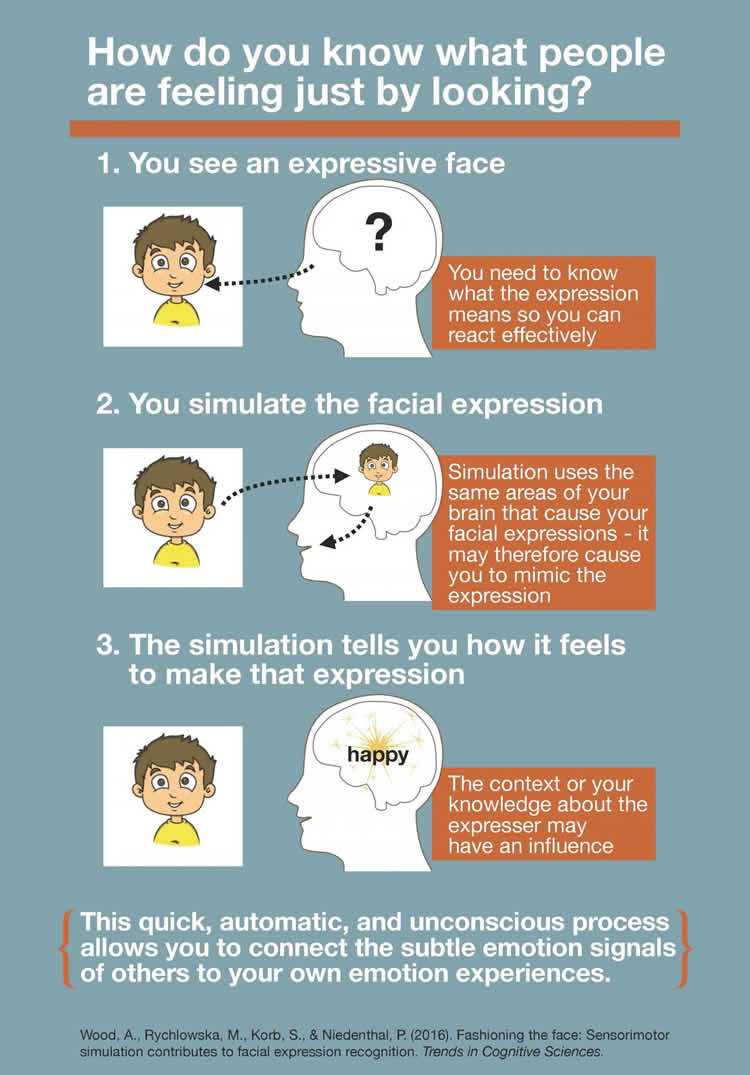

In their paper, Paula Niedenthal and Adrienne Wood, social psychologists at the University of Wisconsin, and colleagues describe how people in social situations simulate others’ facial expressions to create emotional responses in themselves. For example, if you’re with a friend who looks sad, you might “try on” that sad face yourself–without realizing you’re doing so. In “trying on” your friend’s expression, it helps you to recognize what they’re feeling by associating it with times in the past when you made that expression. Humans extract this emotional meaning from facial expressions in a matter of only a few hundred milliseconds.

“You reflect on your emotional feelings and then you generate some sort of recognition judgment, and the most important thing that results is that you take the appropriate action–you approach the person or you avoid the person,” Niedenthal says. “Your own emotional reaction to the face changes your perception of how you see the face, in such a way that provides you more information about what it means.”

A person’s ability to recognize and “share” others’ emotions can be inhibited when they can’t mimic faces, even from something as simple as long-term pacifier use. This is a common complaint for people with central or peripheral motor diseases, like facial paralysis from a stroke or Bell’s palsy–or even due to nerve damage from plastic surgery. Niedenthal notes that the same would not be true for people with congenital paralysis, because if you’ve never had the ability to mimic facial expressions, you will have developed compensatory ways of interpreting emotions.

People with social disorders associated with mimicry and/or emotion-recognition impairments, like autism, can experience similar challenges. “There are some symptoms in autism where lack of facial mimicry may in part be due to suppression of eye contact,” Niedenthal says. In particular, “it may be overstimulating socially to engage in eye contact, but under certain conditions, if you encourage eye contact, the benefit is spontaneous or automatic facial mimicry.”

Niedenthal next wants to explore what mechanism in the brain is functioning to help with facial expression recognition. A better understanding of the mechanism behind sensorimotor simulation, she says, will give us a better idea of how to treat related disorders.

Funding: The researchers are supported by the National Science Foundation.

Source: Joseph Caputo – Cell Press

Image Source: The image is credited to Adrienne Wood.

Original Research: Abstract for “Fashioning the Face: Sensorimotor Simulation Contributes to Facial Expression Recognition” by Adrienne Wood, Magdalena Rychlowska, Sebastian Korb, and Paula Niedenthal in Trends in Cognitive Sciences. Published online February 11 2016 doi:10.1016/j.tics.2015.12.010

Abstract

Fashioning the Face: Sensorimotor Simulation Contributes to Facial Expression Recognition

When we observe a facial expression of emotion, we often mimic it. This automatic mimicry reflects underlying sensorimotor simulation that supports accurate emotion recognition. Why this is so is becoming more obvious: emotions are patterns of expressive, behavioral, physiological, and subjective feeling responses. Activation of one component can therefore automatically activate other components. When people simulate a perceived facial expression, they partially activate the corresponding emotional state in themselves, which provides a basis for inferring the underlying emotion of the expresser. We integrate recent evidence in favor of a role for sensorimotor simulation in emotion recognition. We then connect this account to a domain-general understanding of how sensory information from multiple modalities is integrated to generate perceptual predictions in the brain.

Trends

People’s recognition and understanding of others’ facial expressions is compromised by experimental (e.g., mechanical blocking) and clinical (e.g., facial paralysis and long-term pacifier use) disruptions to sensorimotor processing in the face.

Emotion perception involves automatic activation of pre- and primary-motor and somatosensory cortices, and the inhibition of activity in sensorimotor networks reduces performance on subtle or challenging emotion recognition tasks.

Sensorimotor simulation flexibly supports not only conceptual processing of facial expression but also, through cross-modal influences on visual processing, the building of a complete percept of the expression.

While automatic and presumably nonconscious, sensorimotor simulation of facial expressions is modulated by the perceiver’s social context and motivational state.

“Fashioning the Face: Sensorimotor Simulation Contributes to Facial Expression Recognition” by Adrienne Wood, Magdalena Rychlowska, Sebastian Korb, and Paula Niedenthal in Trends in Cognitive Sciences. Published online February 11 2016 doi:10.1016/j.tics.2015.12.010