Summary: Relating machine learning to biological learning, researchers say while the two approaches aren’t interchangeable, they can be harnessed to offer insights into how the human brain works.

Source: Carnegie Mellon University

Pinpointing how neural activity changes with learning is anything but black and white. Recently, some have posited that learning in the brain, or biological learning, can be thought of in terms of optimization, which is how learning occurs in artificial networks like computers or robots.

A new perspectives piece co-authored by Carnegie Mellon University and University of Pittsburgh researchers relates machine learning to biological learning, showing that the two approaches aren’t interchangeable, yet can be harnessed to offer valuable insights into how the brain works.

“How we quantify the changes we see in the brain and in a subject’s behavior during learning is ever-evolving,” says Byron Yu, professor of biomedical engineering and electrical and computer engineering.

“It turns out that in machine learning and artificial intelligence, there is a well-developed framework in which something learns, known as optimization. We and others in the field have been thinking about how the brain learns in comparison to this framework, which was developed to train artificial agents to learn.”

The optimization viewpoint suggests that activity in the brain should change during learning in a mathematically prescribed way, akin to how the activity of artificial neurons changes in a specific way when they are trained to drive a robot or play chess.

“One thing we are interested in understanding is how the learning process unfolds over time, not just looking at a snapshot of before and after learning occurs,” explains Jay Hennig, a recent Ph.D. graduate in neural computation and machine learning at Carnegie Mellon.

“In this perspectives piece, we offer three main takeaways that would be important for people to consider in the context of thinking about why neural activity might change throughout learning that cannot be readily explained in terms of optimization.”

The takeaways include the inflexibility of neural variability throughout learning, the use of multiple learning processes even during simple tasks, and the presence of large task-nonspecific activity changes.

“It’s tempting to draw from successful examples of artificial learning agents and assume the brain must do whatever they do,” suggests Aaron Batista, professor of bioengineering at the University of Pittsburgh.

“However, one specific difference between artificial and biological learning systems is the artificial system usually does just one thing and does it really well. Activity in the brain is quite different, with many processes happening at the same time. We and others have observed that there are things happening in the brain that machine learning models cannot yet account for.”

Steve Chase, professor of biomedical engineering at Carnegie Mellon and the Neuroscience Institute adds, “We see a theme building and a direction for the future. By drawing attention to these areas where neuroscience can inform machine learning and vice versa, we aim to connect them to the optimization view to ultimately understand, on a deeper level, how learning unfolds in the brain.”

This work is co-authored with Emily Oby, research faculty in bioengineering at the University of Pittsburgh, and Darby Losey, Ph.D. student in neural computation and machine learning at CMU. The group’s work is ongoing and done in collaboration with the Center for Neural Basis of Cognition, a cross-university research and educational program between Carnegie Mellon and the University of Pittsburgh that leverages each institution’s strengths to investigate the cognitive and neural mechanisms that give rise to biological intelligence and behavior.

About this AI and learning research news

Author: Sara Vaccar

Source: Carnegie Mellon University

Contact: Sara Vaccar – Carnegie Mellon University

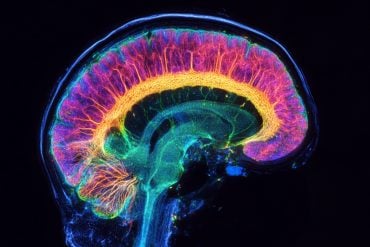

Image: The image is in the public domain

Original Research: The findings will appear in Neuron