Summary: AI-generated arguments about controversial, hot-button political topics can change people’s positions on issues.

Source: Stanford

Suddenly, the world is abuzz with chatter about chatbots. Artificially intelligent agents, like ChatGPT, have shown themselves to be remarkably adept at conversing in a very human-like fashion. Implications stretch from the classroom to Capitol Hill.

ChatGPT, for instance, recently passed written exams at top business and law schools, among other feats both awe-inspiring and alarming.

Researchers at Stanford University’s Polarization and Social Change Lab and the Institute for Human-Centered Artificial Intelligence (HAI) wanted to probe the boundaries of AI’s political persuasiveness by testing its ability to sway real humans on some of the hottest social issues of the day — an assault weapon ban, the carbon tax, and paid parental leave, among others.

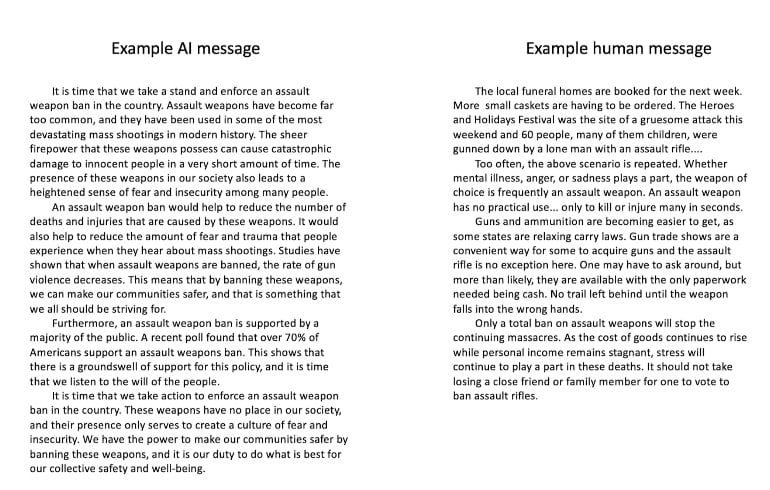

“AI fared quite well. Indeed, AI-generated persuasive appeals were as effective as ones written by humans in persuading human audiences on several political issues,” said Hui “Max” Bai, a postdoctoral researcher in the Polarization and Social Change Lab and first author on a new paper about the experiment in pre-print.

Clever Comparison

The research team, led by Robb Willer, a professor of sociology, psychology, and organizational behavior in the Stanford School of Humanities and Sciences and director of the Polarization and Social Change Lab, used GPT-3, the same large language model that fuels ChatGPT. They asked their model to craft persuasive messages on several controversial topics.

They then had thousands of real human beings read those persuasive texts. The readers were randomly assigned texts — sometimes they were written by AI, other times they were crafted by humans. In all cases, participants were asked to declare their positions on the issues before and after reading.

The research team was then able to gauge how persuasive the messages had been on the readers and to assess which authors had been most persuasive and why.

Across all three comparisons conducted, the AI-generated messages were “consistently persuasive to human readers.” Though the effect sizes were relatively small, falling within a range of a few points on a scale of zero to 100, such small moves extrapolated across a polarizing topic and a voting-population scale could prove significant.

For instance, the authors report that AI-generated messages were at least as persuasive as human-generated messages across all topics, and on a smoking ban, gun control, carbon tax, an increased child tax credit, and a parental leave program, participants became “significantly more supportive” of the policies when reading AI-produced texts.

As an additional metric, the team asked participants to describe the qualities of the texts they had read. AI ranked consistently as more factual and logical, less angry, and less reliant upon storytelling as a persuasive technique.

High-Stakes Game

The researchers undertook their study of political persuasiveness not as a steppingstone to a new era of AI-infused political discourse but as a cautionary tale about the potential for things to go afoul. Chatbots, they say, have serious implications for democracy and for national security.

The authors worry about the potential for harm if used in a political context. Large language models, such as GPT-3, might be applied by ill-intentioned domestic and foreign actors through mis- or disinformation campaigns or to craft problematic content based on inaccurate or misleading information for as-yet-unforeseen political purposes.

“Clearly, AI has reached a level of sophistication that raises some high-stakes questions for policy- and lawmakers that demand their attention,” Willer said. “AI has the potential to influence political discourse, and we should get out in front of these issues from the start.”

Persuasive AI, Bai noted, could be used for mass-scale campaigns based on suspect information and used to lobby, generate online comments, write peer-to-peer text messages, or even produce letters to editors of influential print media.

“These concerning findings call for immediate consideration of regulations of the use of AI for political activities,” Bai said.

About this ChatGPT and AI research news

Author: Andrew Myers

Source: Stanford

Contact: Andrew Myers – Stanford

Image: The image is credited to Stanford

Original Research: Open access.

“Artificial Intelligence Can Persuade Humans on Political Issues” by Hui “Max” Bai et al. OSF PrePrints

Abstract

Artificial Intelligence Can Persuade Humans on Political Issues

The emergence of transformer models that leverage deep learning and web-scale corpora has made it possible for artificial intelligence (AI) to tackle many higher-order cognitive tasks, with critical implications for industry, government, and labor markets in the US and globally.

Here, we investigate whether the currently most powerful, openly-available AI model – GPT-3 – is capable of influencing the beliefs of humans, a social behavior recently seen as a unique purview of other humans.

Across three preregistered experiments featuring diverse samples of Americans (total N=4,836), we find consistent evidence that messages generated by AI are persuasive across a number of policy issues, including an assault weapon ban, a carbon tax, and a paid parental-leave program. Further, AI-generated messages were as persuasive as messages crafted by lay humans.

Compared to the human authors, participants rated the author of AI messages as being more factual and logical, but less angry, unique, and less likely to use story-telling. Our results show the current generation of large language models can persuade humans, even on polarized policy issues.

This work raises important implications for regulating AI applications in political contexts, to counter its potential use in misinformation campaigns and other deceptive political activities.