Summary: A new AI algorithm recognizes the complex range of emotions invoked when people listen to pieces of music.

Source: UPF Barcelona

Music has been of great importance throughout human history, and emotions have always been the ultimate reason for all musical creations. When writing a song a composer tries to express a particular feeling, causing concert-goers to perhaps laugh, cry or even shiver.

We use music on a day-to-day basis to regulate our emotions or revive a memory. Hence, knowing how to recognize the emotions that music produces has been and will continue to be very important.

Major music platforms such as Spotify or Deezer use classifications, generated by artificial intelligence (AI) algorithms, based on the emotions that music arouses in its listeners.

However, not all people agree on the type of emotions, neither those that music arouses in us nor those that we perceive in the music itself when listening to it. A song like “Happy Birthday” can express “happiness” because it is in a major scale and has a fast pace, but it can generate “sadness” if we remember a person who is no longer with us. Each of us perceives music in a very personal way and this can be influenced by such general aspects as musical preferences, cultural background, the language of the song, etc.

It is important to define this aspect as an AI algorithm needs to know what is called “ground truth” or “labels”. It is the basis on which the algorithm “learns”. For example, for a photo of a golden labrador on Instagram, it is highly likely that we all agree that the label should be “dog”. But with a symphony by Beethoven, the labels can range from “happy” to “nostalgic”, depending on the listener and the context.

In a recent publication in the journal IEEE Signal Processing Magazine, researchers with the Music Technologies Research Group (MTG) at Pompeu Fabra University, together with scientists from the Academia Sinica in Taiwan, the University of Hong Kong, and Durham University in the United Kingdom, among others, propose a new conceptualization framework that helps to characterize music in terms of emotions and thus build models that are better adapted to people’s characteristics.

“Recognition of emotions in music is one of the most complex tasks of musical description and computational modeling”, explains the doctoral student Juan Sebastián Gómez Cañón, first author of the study. “People’s opinions vary greatly and it is difficult to find the reasons why the section of a song can arouse a certain emotion. It is a very subjective task and using artificial intelligence algorithms still requires a great deal of research”.

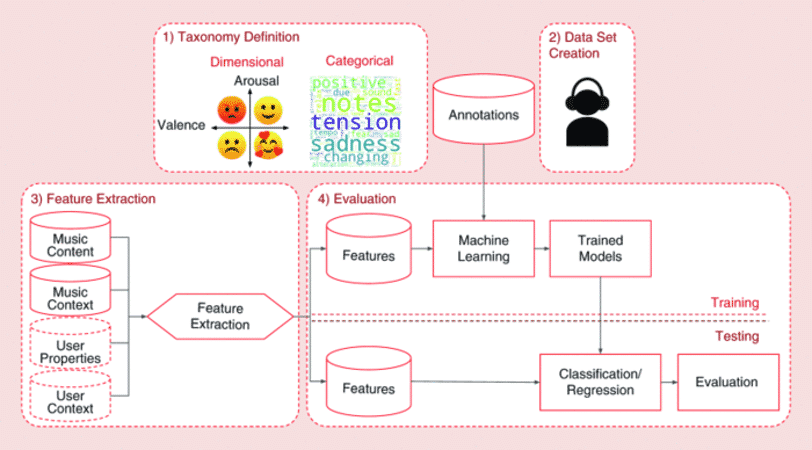

The main goal of the research was to create a guide on the operation of current music emotion recognition (MER) systems. Hence they propose an approach in which the human being is at the center of the design of the system in order to combat the problem of subjectivity.

The research has allowed the authors to propose areas where the research field needs to go into greater depth, such as the accessibility of open-source data, the reproducibility of the experiments, the relevance of people’s cultural context, and the need to study the ethical implications of the possible applications of MER. Gómez adds that “most of the research on music and emotions has been carried out by and for people from Western, Educated, Industrialized, Rich, and Democratic (WEIRD) countries. It is crucial to go further in order to evaluate non-Western traditional music, collect data from diverse listeners and democratize this research to different musical cultures in the world”.

They also included proposals for handling the ethical implications for these types of applications, such as privacy, the bias of the systems to the Western listener and the impact they can have on our well-being. “When an algorithm can accurately predict the emotion that a type of music can arouse, the most important question will be how we can ensure that these algorithms will be used for our well-being”, Gómez finishes explaining.

Get involved in TROMPA, a citizen science project

In order to better understand our opinions about emotions in music, the MTG, led by Emilia Gómez, co-author of the study, is collecting data using citizen science through project TROMPA (Towards Richer Online Music Public-Domain Archives). This project is funded by the European Union’s Horizon 2020 programme.

TROMPA is still active and asks participants to listen to a musical theme and write down the various emotions that it arouses or expresses. “With TROMPA, we have developed tools that combine artificial intelligence with human intelligence to connect public domain music repositories, use them to create beneficial applications for different music communities, and enrich these repositories for their future use”, Dr. Emilia Gómez comments.

“With everyone’s collaboration, we can create a personalized model that fits their opinions”, continues Juan Sebastián Gómez, “we make musical recommendations from different parts of the world (Latin America, Africa and the Middle East). The idea of this platform is for our participants to have fun, get to know music of the world, and learn a little more about the relationship between music and emotion, so we invite you all to participate!”.

The project has involved Institutions such as Delft University of Technology (TUDelft), the University of Music and Performing Arts, Vienna, the Royal Concertgebouw Orchestra, Amsterdam, and small companies such as Barcelona’s Voctrolabs.

About this AI research news

Author: Gerard Vall-llovera Calmet

Source: UPF Barcelona

Contact: Gerard Vall-llovera Calmet – UPF Barcelona

Image: The image is credited to UPF

Original Research: Closed access.

“Music Emotion Recognition: Toward new, robust standards in personalized and context sensitive applications” by J.S. Gómez-Cañón, E. Cano, T. Eerola, P. Herrera, X. Hu, Y.-H. Yang, E. Gómez. IEEE Signal Processing Magazine

Abstract

Music Emotion Recognition: Toward new, robust standards in personalized and context sensitive applications

Emotion is one of the main reasons why people engage and interact with music. Songs can express our inner feelings, produce goosebumps, bring us to tears, share an emotional state with a composer or performer, or trigger specific memories.

Interest in a deeper understanding of the relationship between music and emotion has motivated researchers from various areas of knowledge for decades, including computational researchers. Imagine an algorithm capable of predicting the emotions that a listener perceives in a musical piece, or one that dynamically generates music that adapts to the mood of a conversation in a film—a particularly fascinating and provocative idea.

These algorithms typify music emotion recognition (MER), a computational task that attempts to automatically recognize either the emotional content in music or the emotions induced by music to the listener [3] . To do so, emotionally relevant features are extracted from music. The features are processed, evaluated, and then associated with certain emotions.

MER is one of the most challenging high-level music description problems in music information retrieval (MIR), an interdisciplinary research field that focuses on the development of computational systems to help humans better understand music collections.

MIR integrates concepts and methodologies from several disciplines, including music theory, music psychology, neuroscience, signal processing, and machine learning.