Summary: Artificial neural networks help researchers uncover new clues as to why people on the autism spectrum have trouble interpreting facial expressions.

Source: Tohoku University

People with autism spectrum disorder have difficulty interpreting facial expressions.

Using a neural network model that reproduces the brain on a computer, a group of researchers based at Tohoku University have unraveled how this comes to be.

The journal Scientific Reports published the results on July 26, 2021.

“Humans recognize different emotions, such as sadness and anger by looking at facial expressions. Yet little is known about how we come to recognize different emotions based on the visual information of facial expressions,” said paper coauthor, Yuta Takahashi.

“It is also not clear what changes occur in this process that leads to people with autism spectrum disorder struggling to read facial expressions.”

The research group employed predictive processing theory to help understand more. According to this theory, the brain constantly predicts the next sensory stimulus and adapts when its prediction is wrong. Sensory information, such as facial expressions, helps reduce prediction error.

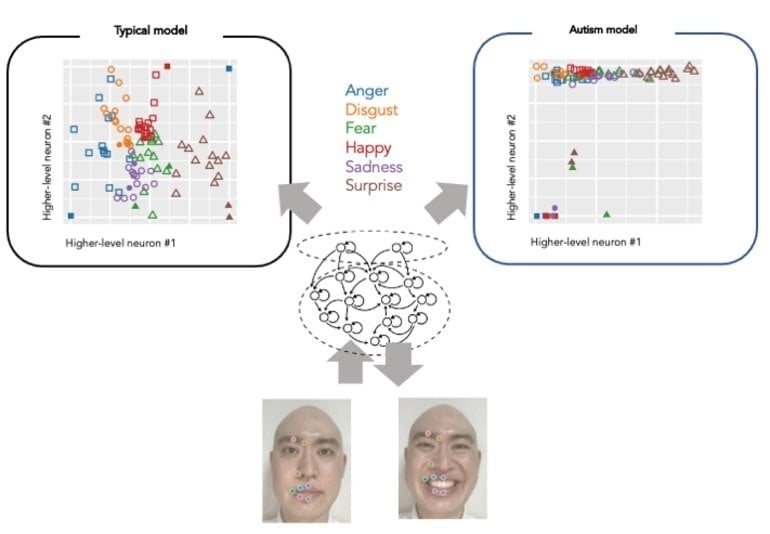

The artificial neural network model incorporated the predictive processing theory and reproduced the developmental process by learning to predict how parts of the face would move in videos of facial expression. After this, the clusters of emotions were self-organized into the neural network model’s higher level neuron space – without the model knowing which emotion the facial expression in the video corresponds to.

The model could generalize unknown facial expressions not given in the training, reproducing facial part movements and minimizing prediction errors.

Following this, the researchers conducted experiments and induced abnormalities in the neurons’ activities to investigate the effects on learning development and cognitive characteristics. In the model where heterogeneity of activity in neural population was reduced, the generalization ability also decreased; thus, the formation of emotional clusters in higher-level neurons was inhibited. This led to a tendency to fail in identifying the emotion of unknown facial expressions, a similar symptom of autism spectrum disorder.

According to Takahashi, the study clarified that predictive processing theory can explain emotion recognition from facial expressions using a neural network model.

“We hope to further our understanding of the process by which humans learn to recognize emotions and the cognitive characteristics of people with autism spectrum disorder,” added Takahashi. “The study will help advance developing appropriate intervention methods for people who find it difficult to identify emotions.”

About this AI and autism research news

Source: Tohoku University

Contact: Press Office – Tohoku University

Image: The image is credited to Yuta Takahashi, et al

Original Research: Open access.

“Neural network modeling of altered facial expression recognition in autism spectrum disorders based on predictive processing framework” by Yuta Takahashi, Shingo Murata, Hayato Idei, Hiroaki Tomita & Yuichi Yamashita. Scientific Reports

Abstract

Neural network modeling of altered facial expression recognition in autism spectrum disorders based on predictive processing framework

The mechanism underlying the emergence of emotional categories from visual facial expression information during the developmental process is largely unknown. Therefore, this study proposes a system-level explanation for understanding the facial emotion recognition process and its alteration in autism spectrum disorder (ASD) from the perspective of predictive processing theory. Predictive processing for facial emotion recognition was implemented as a hierarchical recurrent neural network (RNN).

The RNNs were trained to predict the dynamic changes of facial expression movies for six basic emotions without explicit emotion labels as a developmental learning process, and were evaluated by the performance of recognizing unseen facial expressions for the test phase. In addition, the causal relationship between the network characteristics assumed in ASD and ASD-like cognition was investigated.

After the developmental learning process, emotional clusters emerged in the natural course of self-organization in higher-level neurons, even though emotional labels were not explicitly instructed.

In addition, the network successfully recognized unseen test facial sequences by adjusting higher-level activity through the process of minimizing precision-weighted prediction error. In contrast, the network simulating altered intrinsic neural excitability demonstrated reduced generalization capability and impaired emotional clustering in higher-level neurons. Consistent with previous findings from human behavioral studies, an excessive precision estimation of noisy details underlies this ASD-like cognition.

These results support the idea that impaired facial emotion recognition in ASD can be explained by altered predictive processing, and provide possible insight for investigating the neurophysiological basis of affective contact.