Summary: Matching the location of a face to the speech sounds a person is producing significantly increases our ability to understand them, especially in noisy environments.

Source: American Institute of Physics

Seeing a person’s face as we are talking to them greatly improves our ability to understand their speech. While previous studies indicate that the timing of words-to-mouth movements across the senses is critical to this audio-visual speech benefit, whether it also depends on spatial alignment between faces and voices has been largely unstudied.

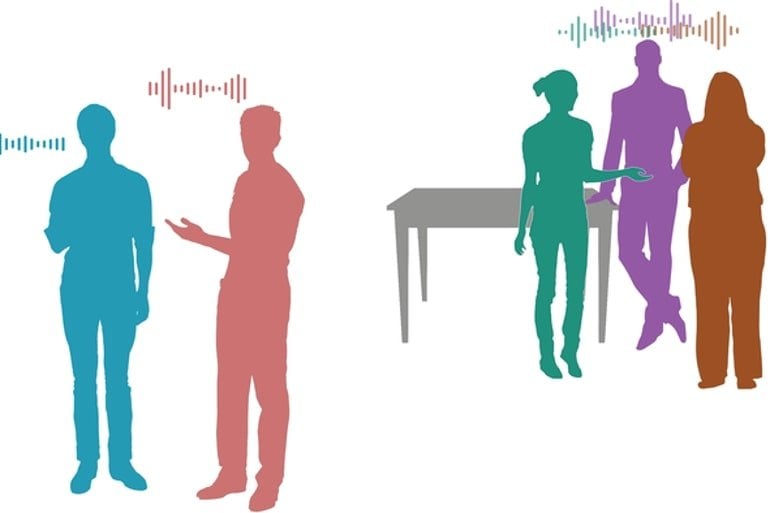

Researchers found matching the locations of faces with the speech sounds they are producing significantly improves our ability to understand them, especially in noisy areas where other talkers are present.

In the Journal of the Acoustical Society of America, researchers from Harvard University, University of Minnesota, University of Rochester, and Carnegie Mellon University outline a set of online experiments that mimicked aspects of distracting scenes to learn more about how we focus on one audio-visual talker and ignore others.

“If there’s only one multisensory object in a scene, our group and others have shown that the brain is perfectly willing to combine sounds and visual signals that come from different locations in space,” said author Justin Fleming. “It’s when there’s multisensory competition that spatial cues take on more importance.”

The researchers first asked participants to pay attention to one talker’s speech and ignore another talker, either when corresponding faces and voices originated from the same location or different locations. Participants performed significantly better when the face matched where the voice was coming from.

Next, they found task performance decreased when participants directed their gaze toward a voice trying to distract them.

Finally, the researchers showed spatial alignment between faces and voices was more important when the background noise was louder, suggesting the brain makes more use of audio-visual spatial cues in challenging sensory environments.

The pandemic forced the group to get creative about conducting such research with participants over the internet.

“We had to learn about — and, in some cases, create — several tasks to make sure participants were seeing and hearing the stimuli properly, wearing headphones, and following instructions,” Fleming said.

Fleming hopes their findings will lead to improved designs for hearing devices and better handling of sound in virtual and augmented reality. They look to expand on their work by bringing additional real-world elements into the fold.

“Historically, we have learned a great deal about our sensory systems from studies involving simple flashes and beeps,” he said. “However, this and other studies are now showing that when we make our tasks more complicated in ways that better simulate the real world, new patterns of results start to emerge.”

About this auditory neuroscience research news

Author: Larry Frum

Source: American Institute of Physics

Contact: Larry Frum – American Institute of Physics

Image: The image is credited to Justin Fleming

Original Research: Open access.

“Spatial alignment between faces and voices improves selective attention to audio-visual speech” by Justin Fleming et al. Journal of the Acoustical Society of America

Abstract

Spatial alignment between faces and voices improves selective attention to audio-visual speech

The ability to see a talker’s face improves speech intelligibility in noise, provided that the auditory and visual speech signals are approximately aligned in time. However, the importance of spatial alignment between corresponding faces and voices remains unresolved, particularly in multi-talker environments.

In a series of online experiments, we investigated this using a task that required participants to selectively attend a target talker in noise while ignoring a distractor talker.

In experiment 1, we found improved task performance when the talkers’ faces were visible, but only when corresponding faces and voices were presented in the same hemifield (spatially aligned).

In experiment 2, we tested for possible influences of eye position on this result. In auditory-only conditions, directing gaze toward the distractor voice reduced performance, but this effect could not fully explain the cost of audio-visual (AV) spatial misalignment.

Lowering the signal-to-noise ratio (SNR) of the speech from +4 to −4 dB increased the magnitude of the AV spatial alignment effect (experiment 3), but accurate closed-set lipreading caused a floor effect that influenced results at lower SNRs (experiment 4).

Taken together, these results demonstrate that spatial alignment between faces and voices contributes to the ability to selectively attend AV speech.