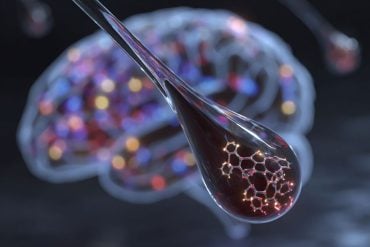

Summary: Researchers have identified two distinct processes that are triggered in the brain when a person is exposed to prolonged or repeated sensory input.

Source: University of Queensland

How the human brain adapts to external stimulation – an important mechanism for species survival – has been made clearer by University of Queensland (UQ) research.

Queensland Brain Institute (QBI) researchers Dr Reuben Rideaux and Professor Jason Mattingley have discovered that two distinct processes are triggered in the human brain when exposed to prolonged or repeated sensory inputs.

“When light enters the eye, neurons in visual areas of the brain begin to fire and scientists have long debated whether the brain adapts to this prolonged input because the neurons get fatigued, or instead fire more selectively to sharpen perception, Dr Rideaux said.

“Our work has shown for the first time that both processes occur, but they become active at different points in time.”

The research team designed an experiment to measure a phenomenon called the tilt-aftereffect – a visual illusion in which prolonged exposure to a visual stimulus causes shifts in subsequent perceived orientations.

“If you stare for a few seconds at a patch of black and white stripes rotated slightly clockwise from vertical, and then look at a perfectly vertical pattern, those vertical stripes will appear to be tilted slightly counter clockwise,” Dr Rideaux explained.

The electrical activity of volunteers’ brains was measured using electroencephalography (EEG) at the start of the experiment and again after looking at the patterns.

The results were compared against a sophisticated, computational model of a working brain.

“We simulated different hypotheses for how adaptation changes brain activity and discovered that neither the ‘fatigue’ nor the ‘sharpening’ account alone could explain what was happening. Dr Rideaux said.

“But when we combined the two, we started to see a match.

“We discovered visual neurons initially show fatigue during the adaptation phase, but after a few hundred milliseconds, behave in a manner that suggests they are sharpening perception.”

Professor Mattingley said the process could be compared to completing a jigsaw puzzle.

“Normally you have some idea of what the puzzle should look like when its finished, but while you’re putting the pieces together you must pay attention to the fine details on each piece,” Professor Mattingley said.

“The brain combines information about what the final image might look like with the incoming sensory information conveyed by the eyes, allowing us to recognise what we’re seeing.”

Professor Mattingley said the results helped explain one of the most intricate processes in the brain and provided a platform for further research into what happens when brain damage or disease causes the system to break down.

“Most of us can recognise familiar objects and sounds effortlessly, but the computations the brain needs to perform are very complicated and not well understood,” Professor Mattingley said.

“Having a better understanding of how sensory systems work in a healthy brain can tell us more about what happens when the system malfunctions, paving the way to better diagnosis and treatment of sensory impairments in the future.”

About this sensory processing research news

Author: Merrett Pye

Source: University of Queensland

Contact: Merrett Pye – University of Queensland

Image: The image is in the public domain

Original Research: Closed access.

“Distinct early and late neural mechanisms regulate feature-specific sensory adaptation in the human visual system” by Reuben Rideaux et al. PNAS

Abstract

Distinct early and late neural mechanisms regulate feature-specific sensory adaptation in the human visual system

A canonical feature of sensory systems is that they adapt to prolonged or repeated inputs, suggesting the brain encodes the temporal context in which stimuli are embedded.

Sensory adaptation has been observed in the central nervous systems of many animal species, using techniques sensitive to a broad range of spatiotemporal scales of neural activity. Two competing models have been proposed to account for the phenomenon.

One assumes that adaptation reflects reduced neuronal sensitivity to sensory inputs over time (the “fatigue” account); the other posits that adaptation arises due to increased neuronal selectivity (the “sharpening” account).

To adjudicate between these accounts, we exploited the well-known “tilt aftereffect”, which reflects adaptation to orientation information in visual stimuli.

We recorded whole-brain activity with millisecond precision from human observers as they viewed oriented gratings before and after adaptation, and used inverted encoding modeling to characterize feature-specific neural responses.

We found that both fatigue and sharpening mechanisms contribute to the tilt aftereffect, but that they operate at different points in the sensory processing cascade to produce qualitatively distinct outcomes.

Specifically, fatigue operates during the initial stages of processing, consistent with tonic inhibition of feedforward responses, whereas sharpening occurs ~200 ms later, consistent with feedback or local recurrent activity.

Our findings reconcile two major accounts of sensory adaptation, and reveal how this canonical process optimizes the detection of change in sensory inputs through efficient neural coding.