Researchers from Trinity College Dublin have identified the precise moment our brains convert speech sounds into meaning. They have, for the first time, shown that fine-grained speech processing details can be extracted from electrical brain signals measured through the scalp.

Understanding speech comes very easily to most of us, but science has struggled to characterise how our brains convert sound waves hitting the ear drum into meaningful words and sentences. However, this challenge has been addressed today with the publication of the new approach in leading journal Current Biology.

Electroencephalography, or EEG, detects electrical activity in the brain, which produces unique signatures as billions of nerve cells communicate with one another. In this study, the researchers showed that EEG is sensitive to phonetic representations of speech.

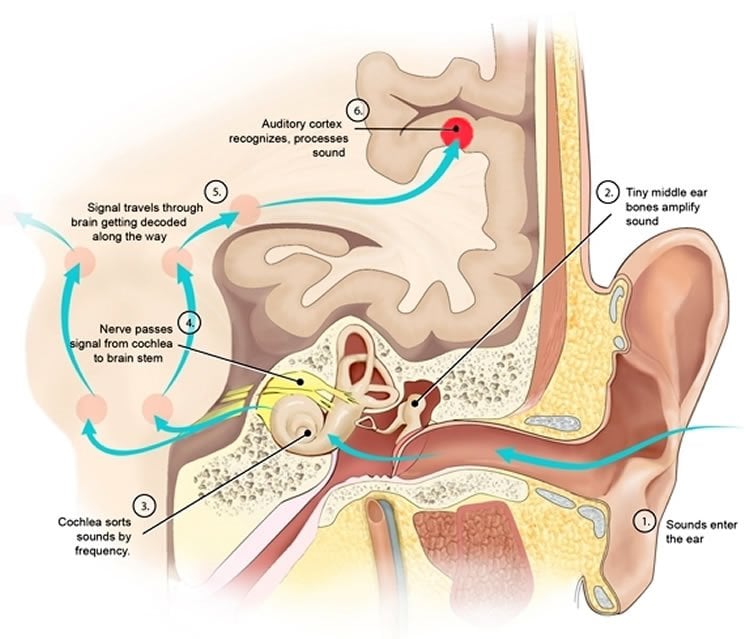

Human speech is a remarkable feat of evolution. When somebody speaks they do so by combining exquisite control of their breath, an incredibly complicated series of tongue, lip and jaw movements and activation and deactivation of their larynx. What follows is a complex series of sound vibrations that travels through the air, combines with sounds from all sorts of other sources, including of course other speakers, and arrives at the human ear.

The brain then somehow has to disentangle this mess of sounds to determine which sounds are coming from where, and what each sound actually represents. In the context of speech this means turning an array of noises (hums, pops, fizzes, clicks) into meaningful units of speech such as syllables and words.

What is even more remarkable is that the brain can easily recognise the same word despite dramatic differences in the sounds that hit the ear drum, such as when that word is spoken in a quiet room or in a noisy restaurant, or is shouted, whispered or sung, by a man or a woman.

“One of the key aspects of speech is that the same word can be spoken in different ways,” explained Ussher Assistant Professor in Active Implantable Medical Devices in Trinity’s School of Engineering and Institute of Neuroscience, Edmund Lalor, who was part of the team that made the discovery.

“Different speakers have different accents, there can be reverberations in the room, and people change how they speak depending on the emotion they are trying to convey. But people still need to categorise a specific word even though the sound hitting their ear can be very different.”

As a result, it has been assumed for a long time that a stage must exist when the brain assesses the different patterns of frequencies in a sound and suddenly recognises these as belonging to a particular unit of speech. It has been difficult to get neural measurements of that stage because animals don’t speak like us and MRI scans are too slow to highlight it.

There is a lot more work to be done to fully understand the specific stages of speech processing that can be captured by the new approach, but the researchers expect it will have a number of important applications.

Dr Lalor added: “We are really excited by the potential applications. We think the approach we have developed will allow researchers to study how speech and language processing develop in infants and why people with certain disorders have such difficulty in understanding speech, for example. There are also opportunities to help develop technology for people with hearing impairments, such as hearing aids and cochlear implants.”

Funding: This work was funded by the Irish Research Council.

Source: Thomas Deane – Trinity College Dublin

Image Credit: The image is in the public domain

Original Research: Abstract for “Low-Frequency Cortical Entrainment to Speech Reflects Phoneme-Level Processing” by Giovanni M. Di Liberto, James A. O’Sullivan, and Edmund C. Lalor in Current Biology. Published online September 24 2015 doi:10.1016/j.cub.2015.08.030

Abstract

Low-Frequency Cortical Entrainment to Speech Reflects Phoneme-Level Processing

Highlights

•EEG reflects categorical processing of phonemes within continuous speech

•EEG is best modeled when representing speech as acoustic signal plus phoneme labels

•Neural delta and theta bands reflect this speech-specific cortical activity

•Specific speech articulatory features are discriminable in EEG responses

Summary

The human ability to understand speech is underpinned by a hierarchical auditory system whose successive stages process increasingly complex attributes of the acoustic input. It has been suggested that to produce categorical speech perception, this system must elicit consistent neural responses to speech tokens (e.g., phonemes) despite variations in their acoustics. Here, using electroencephalography (EEG), we provide evidence for this categorical phoneme-level speech processing by showing that the relationship between continuous speech and neural activity is best described when that speech is represented using both low-level spectrotemporal information and categorical labeling of phonetic features. Furthermore, the mapping between phonemes and EEG becomes more discriminative for phonetic features at longer latencies, in line with what one might expect from a hierarchical system. Importantly, these effects are not seen for time-reversed speech. These findings may form the basis for future research on natural language processing in specific cohorts of interest and for broader insights into how brains transform acoustic input into meaning.

“Low-Frequency Cortical Entrainment to Speech Reflects Phoneme-Level Processing” by Giovanni M. Di Liberto, James A. O’Sullivan, and Edmund C. Lalor in Current Biology. Published online September 24 2015 doi:10.1016/j.cub.2015.08.030