Psychologists have hypothesized that when we try to understand the scenery we see, we begin by assessing some pre-ordained priorities. A new study questions that idea by providing evidence that people simply make the easiest distinctions first.

Psychology researchers who have hypothesized that we classify scenery by following some order of cognitive priorities may have been overlooking something simpler. New evidence suggests that the fastest categorizations our brains make are simply the ones where the necessary distinction is easiest.

There are many ways we parse scenery. Is it navigable or obstructed? Natural or man-made? A lake or a river? A face or not a face? In many previous experiments, researchers have found that some levels of categorization seem special in that they occur earlier than others, leading to a hypothesis that the brain has a prescribed set of priorities. One example of this, the “superordinate advantage,” holds that people will first sort out the global or “superordinate” character of a scene before categorizing basic details. Judging “indoor vs. outdoor,” the hypothesis goes, not only happens before “kitchen vs. bathroom,” but must happen first.

After gathering the new data published in the journal PLOS Computational Biology, senior author Thomas Serre, assistant professor of cognitive, linguistic and psychological sciences at Brown University, isn’t so sure.

“Whatever is happening in the visual system might not be as sophisticated as we thought,” Serre said.

Of categorization and computation

Serre and co-authors Imri Sofer and Sébastien Crouzet wanted to see whether the main predictor of how people first categorized a scene was merely the “discriminability” of the categorization, a measure of the ease of making the needed distinction.

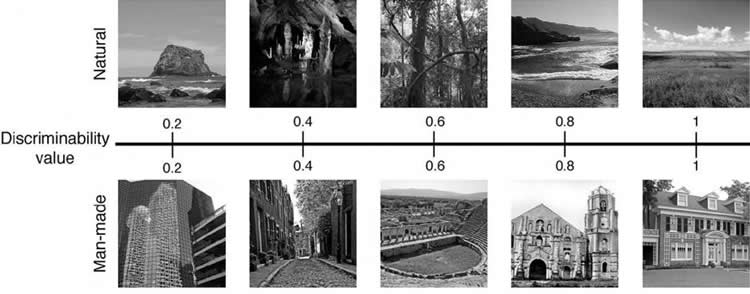

They started by establishing discriminability scores for scenery images (they didn’t rely, as some studies have, on abstract images with clear shapes and colors). To do this they used a standard computational model that could be trained by exposing it to pictures from very large database of natural scenes. After training, the algorithm was able to learn many categorization tasks. For each categorization task, the algorithm could also calculate how close each example was to the boundary (i.e., the line where it was a 50/50 shot) of being one thing or the other (e.g., man-made or natural). The greater the mathematical distance from that category boundary, the higher the discriminability score.

Once the researchers had a way of scoring discriminability, they conducted two experiments with small groups of human volunteers.

In the first one they asked eight volunteers to go through hundreds of trials in which they got quick glimpses of images and had to make the man-made vs. natural distinction by pressing a button. The researchers carefully presented images with the full range of discriminability scores. What they observed is that the higher the discriminability, the faster and more accurately the volunteers could make the categorization.

That small experiment confirmed that the computational model was able to predict human behavioral responses and that discriminability was a significant factor. That set the table for the more profound question: If one accounts for discriminability, do categorization levels, such as in the superordinate advantage, really matter?

The next experiment addressed that by presenting another 24 volunteers with tasks where they had to sort through a superordinate categorization (e.g., man-made vs. natural) and a basic categorization (e.g., desert vs. beach). Half of the participants were given tasks where the greater discriminability was at the superordinate level and half were given tasks where the easier distinctions lay in the basic level. The experiment involved more than 1,000 trials.

If people had a hardwired priority for the superordinate level, they would always make that distinction more quickly, but that’s not what happened. Instead, people for whom the basic categorizations were easier accomplished those more quickly and accurately. By manipulating discriminability, the researchers dispensed with the superordinate advantage and replaced it with a “basic advantage.”

These results suggest that the superordinate advantage is not necessarily part of a pre-ordained hierarchy in the brain. The superordinate categorization may just typically be easier.

“The mere fact that it is possible to reverse [the superordinate advantage], shows that it not a sequential type of process,” Serre said.

It’s certainly still possible that a hybrid of the two hypotheses exists, Serre said. There may be some hierarchy or priorities, but discriminability is such a powerful factor it can actually overwhelm them. Further experiments are underway.

As researchers continue to probe the psychology of how we sort out scenes, Serre said, they should at least use discriminability as a control in their experiments.

Funding: A National Science Foundation CAREER award to Serre funded the study. Other support came from the Defense Advanced Research Projects Agency, the Office of Naval Research, and Brown University.

Source: David Orenstein – Brown University

Image Source: The images are credited to Serre lab/Brown University

Original Research: Full open access research for “Explaining the Timing of Natural Scene Understanding with a Computational Model of Perceptual Categorization” by Imri Sofer, Sébastien M. Crouzet, and Thomas Serre in PLOS Computational Biology. Published online September 3 2015 doi:10.1371/journal.pcbi.1004456

Abstract

Explaining the Timing of Natural Scene Understanding with a Computational Model of Perceptual Categorization

Observers can rapidly perform a variety of visual tasks such as categorizing a scene as open, as outdoor, or as a beach. Although we know that different tasks are typically associated with systematic differences in behavioral responses, to date, little is known about the underlying mechanisms. Here, we implemented a single integrated paradigm that links perceptual processes with categorization processes. Using a large image database of natural scenes, we trained machine-learning classifiers to derive quantitative measures of task-specific perceptual discriminability based on the distance between individual images and different categorization boundaries. We showed that the resulting discriminability measure accurately predicts variations in behavioral responses across categorization tasks and stimulus sets. We further used the model to design an experiment, which challenged previous interpretations of the so-called “superordinate advantage.” Overall, our study suggests that observed differences in behavioral responses across rapid categorization tasks reflect natural variations in perceptual discriminability.

“Explaining the Timing of Natural Scene Understanding with a Computational Model of Perceptual Categorization” by Imri Sofer, Sébastien M. Crouzet, and Thomas Serre in PLOS Computational Biology. Published online September 3 2015 doi:10.1371/journal.pcbi.1004456